In this tutorial, we provide an easy-to-understand explanation of the stochastic gradient descent algorithm. In addition, we explain how to implement this algorithm from scratch in Python. A YouTube tutorial accompanying this tutorial is given below.

Before we explain the stochastic gradient method, it is very important to understand the concept of gradient and the concept of batch gradient descent.

What is a Gradient?

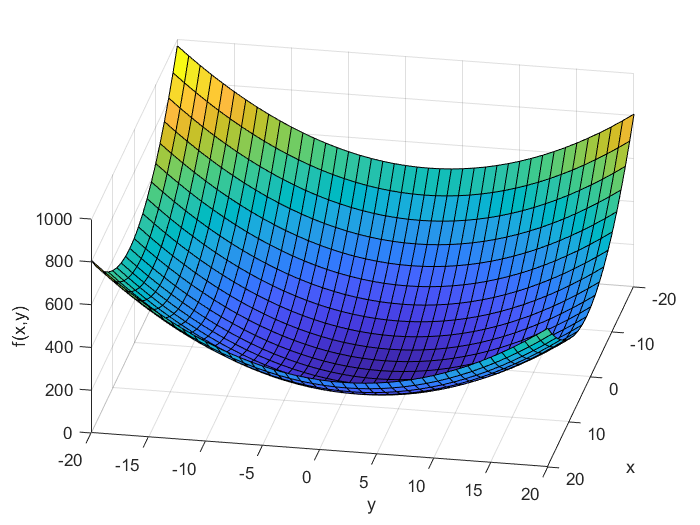

Let us first explain the mathematical concept of a gradient. Let us consider the following function

(1) ![]()

where ![]() and

and ![]() are independent variables, and

are independent variables, and ![]() is a real scalar value. This function is illustrated in the figure below.

is a real scalar value. This function is illustrated in the figure below.

Now, the question is:

What is the gradient of this function at a certain point?

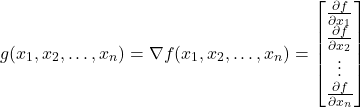

From the mathematical point of view, the gradient is a vector defined by the following equation

(2)

where

(nabla) is a symbol transforming f into the gradient

(nabla) is a symbol transforming f into the gradient is the gradient vector

is the gradient vector and

and  are the partial derivatives of

are the partial derivatives of  with respect to

with respect to  and

and  .

.

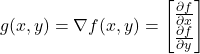

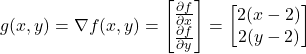

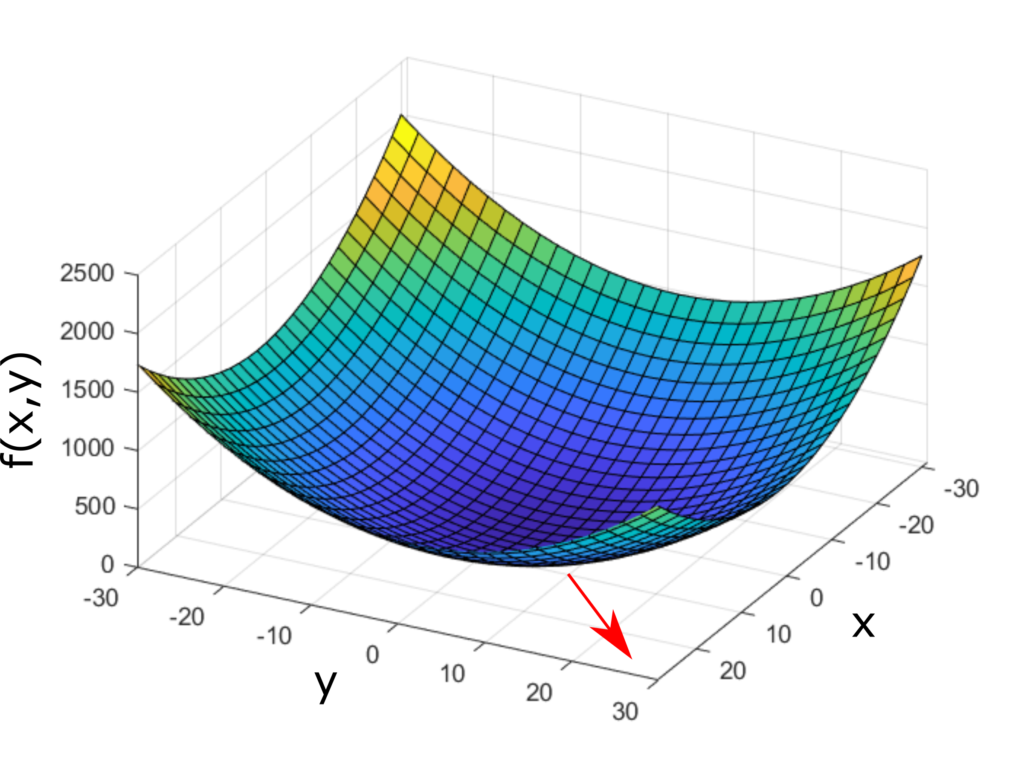

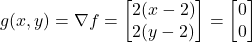

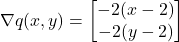

Let us compute the gradient of the function (1). The gradient is given by the following equation

(3)

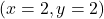

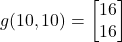

The gradient at the point ![]() is equal to

is equal to

(4) ![]()

This gradient is shown in the figure below (red arrow).

and

and  . The gradient is illustrated by the red arrow.

. The gradient is illustrated by the red arrow.When the function ![]() depends on

depends on ![]() variables:

variables: ![]() ,

, ![]() , …,

, …, ![]() , then the gradient is defined by

, then the gradient is defined by

(5)

For presentation clarity, let us return to the example of the function of two variables. Everything explained in this tutorial can easily be generalized to the case of functions depending on three or more variables. Several important facts about this gradient should be observed:

- The gradient is equal to zero at the point

. This point is actually the minimum of the function. That is, the function achieves the minimum at the point

. This point is actually the minimum of the function. That is, the function achieves the minimum at the point  for which the gradient is equal to zero. We have

for which the gradient is equal to zero. We have(6)

This expression is zero for and

and  , and from the form of the function (1), we conclude that the minimum is achieved at

, and from the form of the function (1), we conclude that the minimum is achieved at  and

and  .

.

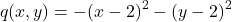

On the other hand, if our function was for example(7)

Then, the gradient is(8)

The function achieves a maximum at the point

achieves a maximum at the point  , that is, it achieves the maximum value at the point for which its gradient is equal to zero. These simple examples confirm that the candidate points for (local) minimum and maximum of functions are the points for which their gradients become equal to zero. Keep in mind that these are only necessary conditions for a local extremum. The sufficient conditions involve Hessians of functions. This topic is out of the scope of this tutorial.

, that is, it achieves the maximum value at the point for which its gradient is equal to zero. These simple examples confirm that the candidate points for (local) minimum and maximum of functions are the points for which their gradients become equal to zero. Keep in mind that these are only necessary conditions for a local extremum. The sufficient conditions involve Hessians of functions. This topic is out of the scope of this tutorial.

- The gradient is a vector whose value at a certain point is the direction and rate of the (locally) fastest increase of the function

. For example, the gradient at the point

. For example, the gradient at the point  of the function

of the function  defined by the equation (1) is

defined by the equation (1) is (9)

That is, the direction of the fastest increase is a vector

(10)

Let us numerically illustrate this fact. That is, let us show that starting at the point , the direction of the fastest increase of the function is positively collinear with the vector (10). Suppose that at the point

, the direction of the fastest increase of the function is positively collinear with the vector (10). Suppose that at the point  , we move in the direction of a vector that is positively collinear with the gradient vector. We assume that we take a small step in this direction. We can select an infinite number of vectors that are collinear with the gradient vector. The most natural choice is the following unit vector:

, we move in the direction of a vector that is positively collinear with the gradient vector. We assume that we take a small step in this direction. We can select an infinite number of vectors that are collinear with the gradient vector. The most natural choice is the following unit vector:(11)

That is, new point at which we want to evaluate the value of the function

at which we want to evaluate the value of the function  is

is (12)

The value of the function at this point is

at this point is(13)

Now, let us assume that at the point , we move in the direction of another unit vector, which makes an angle of 60 degrees with respect to the x-axis. Again, we assume that we take small steps in this direction. The vector is

, we move in the direction of another unit vector, which makes an angle of 60 degrees with respect to the x-axis. Again, we assume that we take small steps in this direction. The vector is (14)

Let us evaluate the value of the function at a new point in the direction of this vector. The new point is defined by

at a new point in the direction of this vector. The new point is defined by(15)

The value of the function at this point is

at this point is(16)

And obviously, this value is smaller than the value of the function at the point in the direction of the gradient vector. Similarly, it can be numerically verified that if we take any direction, along any unit vector from the point

in the direction of the gradient vector. Similarly, it can be numerically verified that if we take any direction, along any unit vector from the point  , except for the direction of the unit vector that is positively collinear with the gradient, and take a small step, we will always have a smaller function value than the value of the function in the direction of the unit vector positively collinear with the gradient vector.

, except for the direction of the unit vector that is positively collinear with the gradient, and take a small step, we will always have a smaller function value than the value of the function in the direction of the unit vector positively collinear with the gradient vector.

To properly understand gradients, it is also important to introduce the concept of level curves for the case when the function ![]() depends on two variables and the concept of level surfaces for the case when the function

depends on two variables and the concept of level surfaces for the case when the function ![]() depends on three or more variables. Consider the function

depends on three or more variables. Consider the function ![]() . The level curve of this function is defined by

. The level curve of this function is defined by

(17) ![]()

where ![]() is a constant. Obviously, the equation (17) defines a curve that is the intersection of the function

is a constant. Obviously, the equation (17) defines a curve that is the intersection of the function ![]() with the horizontal plane parallel to the xy plane with the distance of

with the horizontal plane parallel to the xy plane with the distance of ![]() from the xy plane.

from the xy plane.

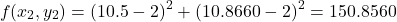

In the case of the function defined by (1) the level curves are obviously circles centered at ![]() with the radius of

with the radius of ![]() :

:

(18) ![]()

The figure below illustrates the level curve.

In the same manner, we can define the level surfaces for functions with three or more variables. For example, for the function

(19) ![]()

The level surface is given by the following equation

(20) ![]()

Obviously, this is a sphere with the radius of ![]() centered at zero.

centered at zero.

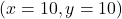

Why are the level curves and level surfaces relevant to the concept of gradients?

Because of this reason:

- The gradient at point

is perpendicular to the level curve (level surface) that passes through that point. More precisely, the gradient at the point

is perpendicular to the level curve (level surface) that passes through that point. More precisely, the gradient at the point  is perpendicular to the tangent (the tangent plane in the case of level surfaces) of the level curve (level surface) at the point

is perpendicular to the tangent (the tangent plane in the case of level surfaces) of the level curve (level surface) at the point  . This is illustrated in the figure below for the function (1) and for

. This is illustrated in the figure below for the function (1) and for  .

.

Batch Gradient Descent Method

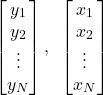

Now that we understand the concept of gradient, let us explain how this concept can be used to solve optimization problems in batch form. The name of this method is the batch gradient descent method which is also known as the steepest descent method and gradient descent method. The word “batch” refers to the data batch, that is, optimization problems in the batch form depend on a data batch:

(21)

where

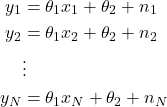

Here, for presentation clarity, we assume that these data points are related through a linear model. However, everything explained in this tutorial can easily be generalized to nonlinear models. Let us assume that the data points are related through a linear model that we want to estimate

(22) ![]()

where ![]() and

and ![]() are parameters of the linear model that we need to estimate. The previous equation is an ideal representation of a physical system or a process. For example, the model (22) can represent a calibration curve of our sensor. For example,

are parameters of the linear model that we need to estimate. The previous equation is an ideal representation of a physical system or a process. For example, the model (22) can represent a calibration curve of our sensor. For example, ![]() might be a measured voltage,

might be a measured voltage, ![]() can a physical variable that we are interested in observing, such as distance, pressure, or temperature. The parameters

can a physical variable that we are interested in observing, such as distance, pressure, or temperature. The parameters ![]() and

and ![]() are unknown parameters of the model that need to be estimated. In practice, the model (22) is always corrupted by measurement noise.

are unknown parameters of the model that need to be estimated. In practice, the model (22) is always corrupted by measurement noise.

The goal is to estimate the parameters ![]() and

and ![]() , from the set of batch data

, from the set of batch data ![]() .

.

By using the data points and from (22), we have

(23)

where ![]() represent measurement noise for every data point

represent measurement noise for every data point ![]() ,

, ![]() .

.

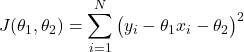

To estimate the parameters, we form the batch cost function:

(24)

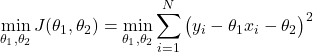

and we form the batch optimization problem

(25)

This optimization problem is a linear least-squares problem for which we can find the closed-form solution. This is explained in our previous post. However, in this tutorial, we will not use such a solution that is only applicable to linear least-squares problems. Instead, we will use the batch gradient descent (also known as the gradient descent method or the steepest descent method) that is applicable to a much more general class of optimization problems that also includes nonlinear optimization problems and nonlinear least squares problems.

The idea of the batch gradient descent is to approximate the solution of the optimization problem (25) iteratively. Let the optimization variables be grouped inside of the vector ![]() , defined by

, defined by

(26) ![]()

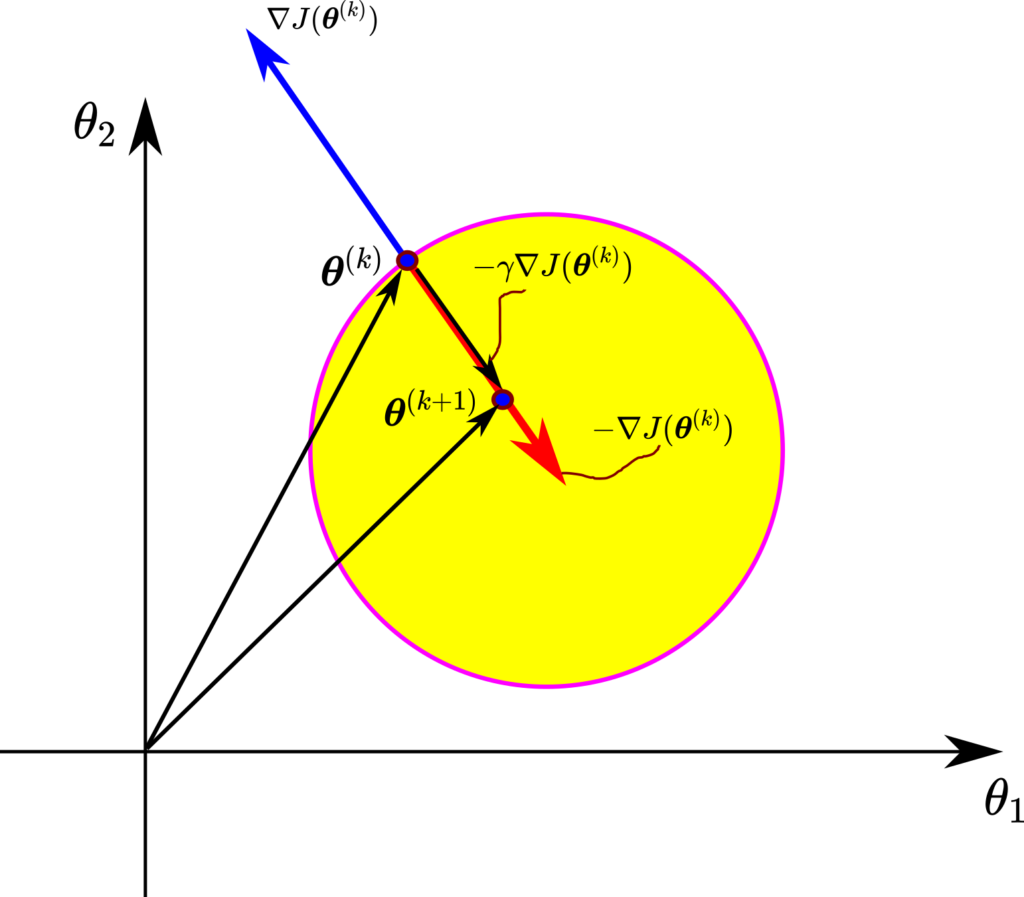

The batch gradient descent method approximates the solution by propagating this iteration

(27) ![]()

where

- The vector of optimization variables at the iteration

is

is

(28)

is the index of the current iteration

is the index of the current iteration  is the step size (learning rate). This is a relatively small real number either selected by the user or selected by using specialized methods, such as line search methods. Here, we assumed that this number is constant and independent from the index

is the step size (learning rate). This is a relatively small real number either selected by the user or selected by using specialized methods, such as line search methods. Here, we assumed that this number is constant and independent from the index  of the current iteration. However, this number can also depend on the current iteration.

of the current iteration. However, this number can also depend on the current iteration. - The gradient of the cost function

evaluated at

evaluated at  is denoted by

is denoted by (29)

We propagate the batch gradient descent iteration (27) for ![]() , until convergence of the cost function or optimization vector variables.

, until convergence of the cost function or optimization vector variables.

So, what is the physical motivation behind the batch gradient descent method (27)?

Consider the illustration shown after this paragraph. We learned that the gradient vector points to the direction of the fastest increase of a function and is essentially equal to the rate of the fastest increase of the function. Now, if we add a minus sign in front of the gradient, we obtain the opposite direction. In this opposite direction, the decrease of the function will be maximized. If we decrease the function value along this direction starting from the current value of the optimization variable vector ![]() , we are doing a good job. That is, we should expect that the cost function will decrease if we move the optimization variable vector in this direction. However, we need to scale our step, so we multiply the negative gradient with a step size that is usually a small number. If we add this negative and scaled gradient to our vector

, we are doing a good job. That is, we should expect that the cost function will decrease if we move the optimization variable vector in this direction. However, we need to scale our step, so we multiply the negative gradient with a step size that is usually a small number. If we add this negative and scaled gradient to our vector ![]() , we get the vector of optimization variables

, we get the vector of optimization variables ![]() . The cost function value at the vector

. The cost function value at the vector ![]() should be smaller than the cost function value at the optimization vector

should be smaller than the cost function value at the optimization vector ![]() . We repeat this procedure iteratively for

. We repeat this procedure iteratively for ![]() .

.

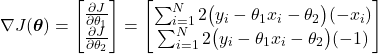

Let us compute the gradient of the cost function (24), so we can implement the batch gradient descent method in Python. The gradient vector is given by

(30)

By introducing the error variable

(31) ![]()

By substituting this definition in (30), we have

(32)

Python Implementation of Batch Gradient Method

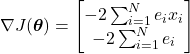

First, we import the necessary libraries and generate the data batch.

# -*- coding: utf-8 -*-

"""

Python implementation of the Batch gradient descent and stochastic gradient descent

"""

import numpy as np

import matplotlib.pyplot as plt

# generate the data

# true value of the parameters that we want to estimate

theta1true=2

theta2true=-2

xValues=np.linspace(-5,5,25)

yValues=np.zeros(xValues.shape)

for i in range(len(xValues)):

# here, change the constant multiplying np.random.normal() to increase/decrease the measurement noise

yValues[i]=theta1true*xValues[i]+theta2true+0.01*np.random.normal()

# plot the data set

plt.plot(xValues,yValues,'bo')

plt.title('Training data')

plt.xlabel('x values')

plt.ylabel('y values')

plt.savefig('dataBatch.png',dpi=600)

plt.show()

We select the true value of the parameters ![]() and

and ![]() .By using this code line:

.By using this code line:

yValues[i]=theta1truexValues[i]+theta2true+0.01np.random.normal()

We generate the vector “yValues”. Also, keep in mind that by adding the term “0.01np.random.normal()” we introduce the measurement noise. Initially, we assume a small value of noise in order to test the convergence of the method. The data that will be used for estimation is shown below.

Next, we define two functions. The first function will return a gradient of the cost function for the data-batch vectors “xValues” and “yValues” and for the current value of the optimization vector ![]() . The second function will return the value of the cost function for the current value of the optimization vector and for the data-barch vectors. These functions are given below.

. The second function will return the value of the cost function for the current value of the optimization vector and for the data-barch vectors. These functions are given below.

# this function returns the gradient of the cost function

# formed for the data batch vectors x and y, and for the thetaParameterVector

# define the batch gradient function

def gradientBatch(thetaParameterVector, x, y):

gradientBatchValue=np.zeros(shape=(2,1))

gradientTheta1=0

gradientTheta2=0

for j in range(len(x)):

error=(y[j]-thetaParameterVector[0]*x[j]-thetaParameterVector[1])

gradientTheta1=gradientTheta1-2*error*x[j]

gradientTheta2=gradientTheta2-2*error

gradientBatchValue[0]=gradientTheta1

gradientBatchValue[1]=gradientTheta2

return gradientBatchValue

# define the cost function for the current value of the optimization vector

# and for the data batch vectors x and y

def costFunction(thetaParameterVector, x, y):

costFunction=0

for j in range(len(x)):

costFunction=costFunction+(y[j]-thetaParameterVector[0]*x[j]-thetaParameterVector[1])**2

return costFunction

Next, we select the number of iterations of the batch gradient descent method, the step size, and the initial guess of the optimization vector. Then, we define a for loop that implements the batch gradient descent method given by the equation (27). The code lines shown below perform these tasks

# batch gradient descent parameters

# number of iterations

numberIterationsGradient=1000

# step size

stepSizeBatchGradient=0.0001

# initial value of the optimization variables

thetaInitial=np.random.normal(size=(2,1))

# these list store the optimization parameters during iterations

theta1List=[]

theta2List=[]

# this list stores values of the cost function

costFunctionList=[]

# initialize the vector of optimization variables

thetaCurrent=thetaInitial

# here we iteratively update the vector of optimization variables

for i in range(numberIterationsGradient):

theta1List.append(thetaCurrent[0][0])

theta2List.append(thetaCurrent[1][0])

costFunctionList.append(costFunction(thetaCurrent,xValues,yValues))

thetaNext=thetaCurrent-stepSizeBatchGradient*gradientBatch(thetaCurrent,xValues,yValues)

thetaCurrent=thetaNext

print(costFunctionList[i])

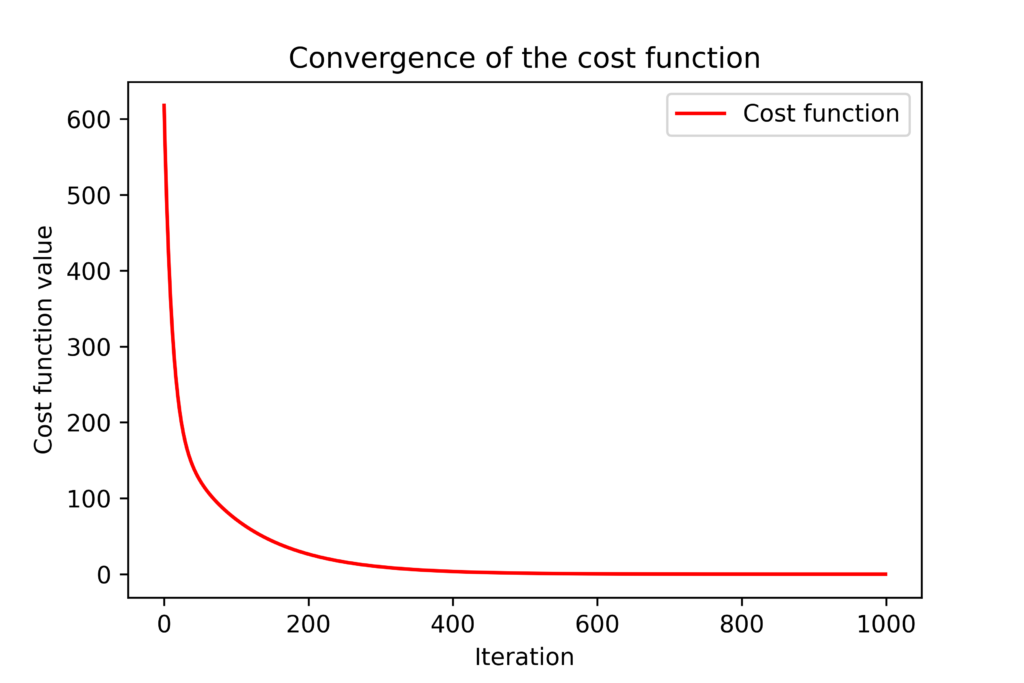

The batch gradient descent iteration is implemented in code line 23. We call the previously defined function “gradientBatch” which returns the value of the gradient at the current value of the optimization vector. We also update the list storing the current values of entries of the optimization vector and the current value of the cost function. This is important for investigating the convergence of the method. The figure below shows the convergence of the cost function ![]() with respect to the optimization iteration index

with respect to the optimization iteration index ![]() .

.

.

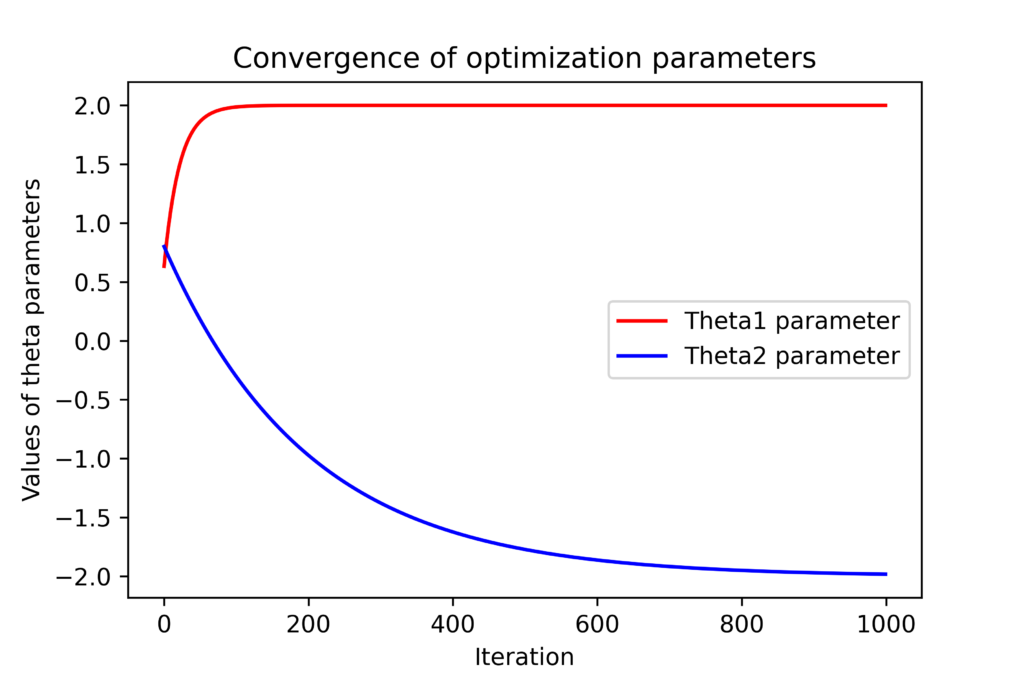

.The figure below shows the convergence of the parameters ![]() and

and ![]() which are the entries of the optimization vector

which are the entries of the optimization vector ![]() .

.

and

and  with respect to the optimization iteration index

with respect to the optimization iteration index  .4

.4We can observe that the entries of the optimization vector converge to the true values. However, they will never converge to the exact values due to the introduced measurement noise, unless we have an extremely large number of data samples. The figures shown above are generated by using these code lines.

plt.title('Convergence of the cost function')

plt.plot(costFunctionList,'r',label='Cost function')

plt.xlabel('Iteration')

plt.ylabel('Cost function value')

plt.legend()

plt.savefig('convergenceCostFunction.png',dpi=600)

plt.show()

plt.title('Convergence of optimization parameters')

plt.plot(theta1List,'r',label='Theta1 parameter')

plt.plot(theta2List,'b',label='Theta2 parameter')

plt.xlabel('Iteration')

plt.ylabel('Values of theta parameters')

plt.legend()

plt.savefig('convergenceTheta.png',dpi=600)

plt.show()