In this post, we provide a brief introduction to Linear Quadratic Regulator (LQR) for set point control. Furthermore, we explain how to compute and simulate the LQR algorithm in MATLAB. The main motivation for developing this lecture comes from the fact that most application-oriented tutorials available online are focusing on the simplified case in which the set point is a zero state (equilibrium point). However, less attention has been dedicated to the problem of regulating the system state to non-zero set points (desired states). The purpose of this lecture and the accompanying YouTube video is to explain how to compute and simulate a general form of the LQR algorithm that works for arbitrary set points. The YouTube video accompanying this post is given below. The GitHub page with all the codes is given here.

The LQR algorithm is arguably one of the simplest optimal control algorithms. Good books for learning optimal control are:

1.) Kwakernaak, H., & Sivan, R. (1972). Linear optimal control systems (Vol. 1, p. 608). New York: Wiley-Interscience.

2.) Lewis, F. L., Vrabie, D., & Syrmos, V. L. (2012). Optimal Control. John Wiley & Sons.

3.) Anderson, B. D., & Moore, J. B. (2007). Optimal Control: Linear Quadratic Methods. Courier Corporation.

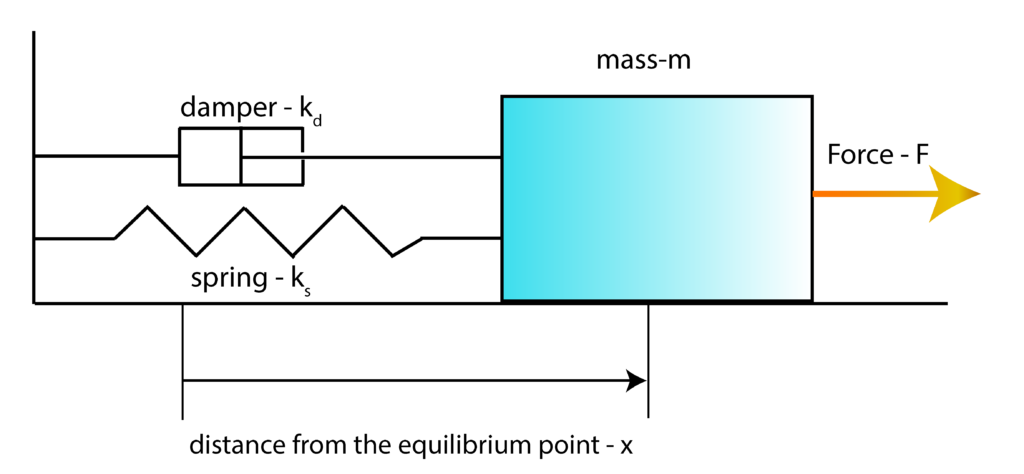

To explain the basic principles and ideas of the LQR algorithm, we use an example of a mass-spring-damper system that is introduced in our previous post, which can be found here. The sketch of the system is shown in the figure below

First, we introduce the state-space variables

(1) ![]()

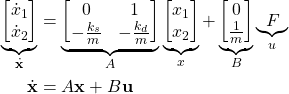

where ![]() . Then, by using these state-space variables and by using the differential equation describing the system dynamics (see our previous post), we obtain the state-space model

. Then, by using these state-space variables and by using the differential equation describing the system dynamics (see our previous post), we obtain the state-space model

(2)

Since in this post, we are considering state feedback, the output equation is not relevant. However, for completeness, we will write the output equation

(3)

In this particular post and lecture, we assume that the full state information is available at every time instant. That is, we assume that both ![]() and

and ![]() are available. Everything presented in this lecture can be generalized for the case in which only position

are available. Everything presented in this lecture can be generalized for the case in which only position ![]() is observed.

is observed.

The goal of the set point regulation is to steer the system to the desired state (desired set point) ![]() , starting from some non-zero initial state (that is in the general case different from

, starting from some non-zero initial state (that is in the general case different from ![]() ) and under a possible action of external disturbances.

) and under a possible action of external disturbances.

In this post, we will use the terms “desired state” and “desired set point” interchangeably. The first step is to determine the control input ![]() that will produce the desired state

that will produce the desired state ![]() in the steady-state. Since

in the steady-state. Since ![]() is constant, by substituting

is constant, by substituting ![]() by

by ![]() , and

, and ![]() by

by ![]() in (2), we obtain the following equation

in (2), we obtain the following equation

(4) ![]()

where ![]() since

since ![]() is a constant steady-state. Assuming that the matrix

is a constant steady-state. Assuming that the matrix ![]() has full column rank, from (4), we have that the desired control input

has full column rank, from (4), we have that the desired control input ![]() , producing the desired steady-state

, producing the desired steady-state ![]() , is given by

, is given by

(5) ![]()

In principle, we can control the system by applying the constant control input ![]() and by waiting for the system to settle down in the desired steady-state

and by waiting for the system to settle down in the desired steady-state ![]() . However, there are several issues with this type of control that can be exaggerated for undamped systems. Namely, the system might exhibit a large overshoot during its transient response, or oscillatory behavior. These phenomena can compromise system safety or performance. Furthermore, the system might be subjected to external disturbances that can seriously deteriorate the control accuracy and performance. These facts motivate the development of the set point feedback control algorithm. The idea is to improve the transient system response and disturbance rejection using a feedback control algorithm.

. However, there are several issues with this type of control that can be exaggerated for undamped systems. Namely, the system might exhibit a large overshoot during its transient response, or oscillatory behavior. These phenomena can compromise system safety or performance. Furthermore, the system might be subjected to external disturbances that can seriously deteriorate the control accuracy and performance. These facts motivate the development of the set point feedback control algorithm. The idea is to improve the transient system response and disturbance rejection using a feedback control algorithm.

To develop the control algorithm, we introduce the error variables as follows

(6) ![]()

By differentiating these expressions and by substituting (6) in (2), we obtain

(7) ![]()

Here it should be kept in mind that since ![]() is constant, we have

is constant, we have ![]() . By substituting this in (7), we obtain

. By substituting this in (7), we obtain

(8) ![]()

On the other hand, by substituting (4) in (8), we obtain

(9) ![]()

Having this form of dynamics, we can straightforwardly apply the LQR algorithm that is derived under the assumption of zero desired state. The LQR algorithm optimizes the following cost function

(10) ![]()

where

(11) ![]()

where ![]() is an identity matrix, and

is an identity matrix, and ![]() and

and ![]() are positive real numbers. The solution that optimizes the cost function (10) is the feedback control law of the following form

are positive real numbers. The solution that optimizes the cost function (10) is the feedback control law of the following form

(12) ![]()

where the matrix ![]() is defined by

is defined by

(13) ![]()

where ![]() is the solution of the algebraic matrix Riccati equation:

is the solution of the algebraic matrix Riccati equation:

(14) ![]()

Fortunately, we do not need to write our own code for solving the Riccati equation. We will use the MATLAB function lqr() to compute the control matrix ![]() . By substituting (12) in (9), we obtain the closed-loop system

. By substituting (12) in (9), we obtain the closed-loop system

(15) ![]()

The LQR control algorithm will make the matrix ![]() asymptotically stable (all the eigenvalues of A-BK are in the left-half of the complex plane). This means that the set-point state error

asymptotically stable (all the eigenvalues of A-BK are in the left-half of the complex plane). This means that the set-point state error ![]() will asymptotically approach zero. That is, in the steady-state, the system state

will asymptotically approach zero. That is, in the steady-state, the system state ![]() will become

will become ![]() . From (15) , we have

. From (15) , we have

(16) ![]()

The equation (16) can be used to simulate the closed loop system.

Taking into account the error variables (6), we can write the feedback control (12), as follows

(17) ![]()

We now proceed with defining the system in MATLAB and with simulations.

First, using the procedure explained in our previous post, we define the system state-space representation:

clear, pack, clc

% mass

m=10

% damping

kd=5

% spring constant

ks=10

%% state-space system representation

A=[0 1; -ks/m -kd/m];

B=[0; 1/m];

C=[1 0];

D=0;

sysSS=ss(A,B,C,D)

Then, we define the desired state, and we compute the steady-state control input using (5).

%% define the desired state

xd=[3;0];

%% compute ud

ud=-inv(B'*B)*B'*A*xd

The desired state is ![]() and

and ![]() . Next, for the computed

. Next, for the computed ![]() , we simulate the system response. We apply this constant control input and observe the state trajectory. The following code lines are used to simulate the system response.

, we simulate the system response. We apply this constant control input and observe the state trajectory. The following code lines are used to simulate the system response.

%% simulate the system response for the computed ud

% set initial condition

x0=1*randn(2,1);

%final simulation time

tFinal=30;

time_total=0:0.1:tFinal;

input_ud=ud*ones(size(time_total));

[output_ud_only,time_ud_only,state_ud_only] = lsim(sysSS,input_ud,time_total,x0);

figure(1)

plot(time_total,state_ud_only(:,1),'k')

hold on

plot(time_total,state_ud_only(:,2),'r')

xlabel('Time [s]')

ylabel('Position and velocity')

legend('Position','Velocity')

grid

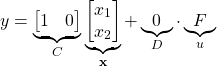

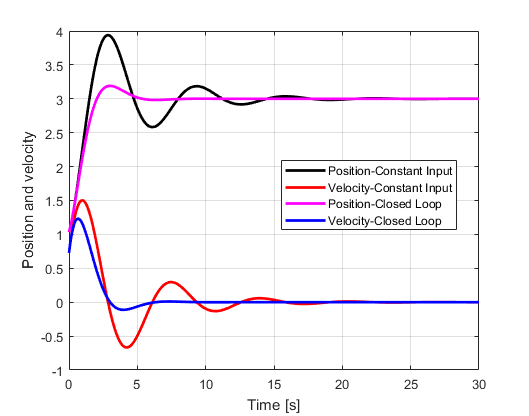

The figure below shows the simulated state trajectories. The position (![]() ) and velocity (

) and velocity (![]() ) start from an arbitrary initial condition that is set by the user. We observe that approximately after 25 seconds the position settles at 3 and velocity settles at 0. These are actually desired steady-states that are set by the user when computing

) start from an arbitrary initial condition that is set by the user. We observe that approximately after 25 seconds the position settles at 3 and velocity settles at 0. These are actually desired steady-states that are set by the user when computing ![]() . We see that the constant control input is able to steer the system’s state to the desired steady-state. However, we can also observe large overshoots. The LQR algorithm will have significant advantages over this control approach, if it is able to reduce the overshoots and to increase the control convergence speed.

. We see that the constant control input is able to steer the system’s state to the desired steady-state. However, we can also observe large overshoots. The LQR algorithm will have significant advantages over this control approach, if it is able to reduce the overshoots and to increase the control convergence speed.

The next goal is to simulate the LQR algorithm. We compute the control matrix ![]() using the MATLAB function lqr(). To compute the control matrix, we defined the weighting matrices

using the MATLAB function lqr(). To compute the control matrix, we defined the weighting matrices ![]() and

and ![]() as diagonal matrices. Finally, we have simulated the closed loop system. We have used the equation (16) to simulate the closed loop system. The code is given below.

as diagonal matrices. Finally, we have simulated the closed loop system. We have used the equation (16) to simulate the closed loop system. The code is given below.

%% simulate the LQR algorithm

Q= 10*eye(2,2);

R= 0.1;

N= zeros(2,1);

[K,S,e] = lqr(sysSS,Q,R,N);

% compute the eigenvalues of the closed loop system

eig(A-B*K)

% eigenvalues of the open-loop system

eig(A)

%simulate the closed loop system

% closed loop matrix

Acl= A-B*K;

Bcl=-Acl;

C=[1 0];

D=0;

sysSSClosedLoop=ss(Acl,Bcl,C,D)

closed_loop_input=[xd(1)*ones(size(time_total));

xd(2)*ones(size(time_total))];

[output_closed_loop,time_closed_loop,state_closed_loop] = lsim(sysSSClosedLoop,closed_loop_input,time_total,x0);

figure(2)

plot(time_total,state_ud_only(:,1),'k')

hold on

plot(time_total,state_ud_only(:,2),'r')

xlabel('Time [s]')

ylabel('Position and velocity')

plot(time_closed_loop,state_closed_loop(:,1),'m')

hold on

plot(time_closed_loop,state_closed_loop(:,2),'b')

legend('Position-Constant Input ','Velocity-Constant Input','Position-Closed Loop ','Velocity-Closed Loop')

grid

Here, we compare the state trajectories of the closed loop system (LQR algorithm) with the state trajectories of the system for the constant steady-state input. This is done to investigate the performance gain obtained by using the LQR algorithm.

From Fig. 3., we can clearly observe the advantages of the LQR algorithm. We can observe that the overshoot is decreased and that the convergence is much faster compared to the system that is controlled using the steady-state control input. Also, the eigenvalues of the matrix A are

-0.2500 + 0.9682i

-0.2500 – 0.9682i

And the eigenvalues of the closed-loop matrix A-BK are

-0.7208 + 0.9458i

-0.7208 – 0.9458i

Since the real parts of the eigenvalues of the closed-loop matrix are almost 3 times further left in the complex plane compared to the eigenvalues of the open-loop system, we can see that the closed-loop system has much more favorable poles from the stability point of view compared to the poles of the open-loop system.