In our previous post and tutorial which can be found here, we explained how to solve unconstrained optimization problems in Python by using the SciPy library and the minimize() function. In this post, we explain how to solve constrained optimization problems by using a similar approach.

The YouTube video accompanying this post is given below.

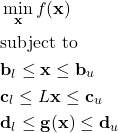

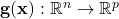

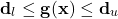

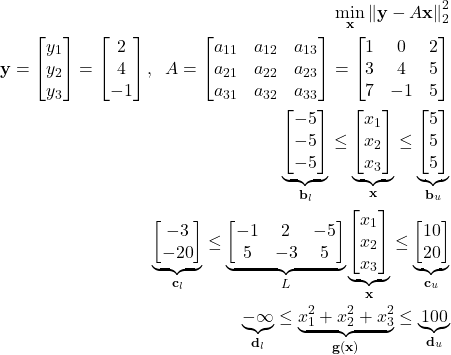

We use the trust-region constrained method to solve constrained minimization problems. This method assumes that the problems have the following form:

(1)

where

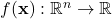

is the n-dimensional vector of optimization variables

is the n-dimensional vector of optimization variables is the cost function

is the cost function-

are the vectors of lower and upper bounds on the optimization variable

are the vectors of lower and upper bounds on the optimization variable

-

are the vectors of lower and upper bounds on the linear term

are the vectors of lower and upper bounds on the linear term  , where

, where  is a constant matrix of parameters. The compact notation

is a constant matrix of parameters. The compact notation(2)

represents a set of linear “less than or equal to” constraints.

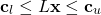

linear “less than or equal to” constraints.  are the vectors of lower and upper bounds on the nonlinear vector function

are the vectors of lower and upper bounds on the nonlinear vector function  . The compact notation

. The compact notation(3)

represents a set of “p” nonlinear “less than or equal to” constraints.

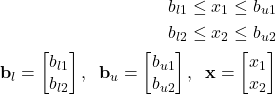

Here are a few comments about the above-presented generic problem. All the less-than or equal to inequalities are interpreted element-wise. For example, the notation

(4) ![]()

is actually a shorthand notation for

(5)

where for simplicity, we assumed ![]() .

.

Then, the one-sided less than or equal to inequalities of the following form

(6) ![]()

are formally written as follows

(7)

In a similar manner, we can formally write other types of one-sided less than or equal inequalities.

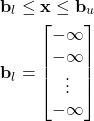

In order to illustrate how to transform particular problems in the above general form, we consider the following optimization problem:

(8)

This problem can be compactly written as follows

(9)

where ![]() denotes the vector 2-norm. The cost function is actually a least-squares cost function.

denotes the vector 2-norm. The cost function is actually a least-squares cost function.

Now that we know how to transform problems in the general form, we can proceed with Python implementation. First, we import the necessary libraries and functions

import numpy as np

import scipy

from scipy.optimize import minimize

from scipy.optimize import Bounds

from scipy.optimize import LinearConstraint

from scipy.optimize import NonlinearConstraint

We use the SciPy Python library and the functions minimize(), Bounds(), LinearConstraint(), and NonlinearConstraint() that are used to define and solve the problem. These functions will be explained in the sequel. But before we explain these functions, we need to construct our problem. Here, for simplicity of the implementation, we will not use the same coefficients as the coefficients used in (9). However, the structure of the problem is the same. We construct the problem with random vector ![]() and the matrix

and the matrix ![]() :

:

# define a random matrix A that is our data matrix

A=np.random.randn(3,3)

# select the right-hand side

Y=np.random.randn(3,1)

Next, we need to define the cost function. We define the cost function as the following Python function

# define the cost function

def costFunction(x,Amatrix, Yvector):

error=Yvector-np.matmul(Amatrix,x)

returnValue=np.linalg.norm(error,2)**2

return returnValue

Basically, this cost function for the given value of the optimization variable “x” and the matrix A and the vector ![]() , returns the value of the cost function.

, returns the value of the cost function.

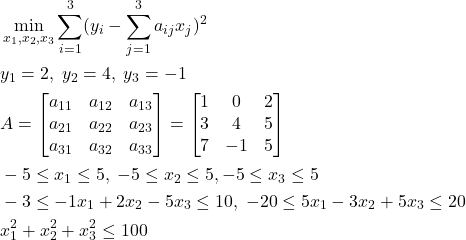

Next, we define the bounds, linear and nonlinear constraints:

# define the bounds

lowerBounds= -1*np.inf*np.ones(3)

upperBounds=10*np.ones(3)

boundData=Bounds(lowerBounds,upperBounds)

#define the linear constraints

lowerBoundLinearConstraints=-1*np.inf*np.ones(3)

upperBoundLinearConstraints=30*np.ones(3)

matrixLinearConstraints=np.random.randn(3,3)

linearConstraints = LinearConstraint(matrixLinearConstraints, lowerBoundLinearConstraints, upperBoundLinearConstraints)

#define the nonlinear constraints

lowerBoundNonLinearConstraints=0

upperBoundNonLinearConstraints=100

def nonlinearConstraintMiddle(x):

return x[0]**2+x[1]**2+x[2]**2

nonlinearConstraints = NonlinearConstraint(nonlinearConstraintMiddle, lowerBoundNonLinearConstraints, upperBoundNonLinearConstraints )

The bounds on ![]() are specified by the function Bound() on the code line 4. This function accepts two arguments: lower and upper bounds. The linear constraints are defined on the code line 10 by using the function LinearConstraint(). The first argument of this function is the matrix

are specified by the function Bound() on the code line 4. This function accepts two arguments: lower and upper bounds. The linear constraints are defined on the code line 10 by using the function LinearConstraint(). The first argument of this function is the matrix ![]() , and the second and third arguments are lower and upper bounds. The nonlinear constraints are defined on the code lines 12 to 18. The nonlinear function

, and the second and third arguments are lower and upper bounds. The nonlinear constraints are defined on the code lines 12 to 18. The nonlinear function ![]() is defined on the code lines 15 to 16. The nonlinear constraints are specified by the function NonlinearConstraint() on the code line 18. The first argument is the name of the function defining the vector function

is defined on the code lines 15 to 16. The nonlinear constraints are specified by the function NonlinearConstraint() on the code line 18. The first argument is the name of the function defining the vector function ![]() , and the second and third arguments are lower and upper bounds.

, and the second and third arguments are lower and upper bounds.

Finally, we can specify an initial guess of the solution and solve the optimization problem

## solve the optimization problem

# select the initial guess of the solution

x0=np.random.randn(3)

# see all the options for the solver

scipy.optimize.show_options(solver='minimize',method='trust-constr')

result = minimize(costFunction, x0, method='trust-constr',

constraints=[linearConstraints, nonlinearConstraints],

args=(A, np.reshape(Y,(3,))),options={'verbose': 1}, bounds=boundData)

# here is the solution

result.x

We need an initial guess of the solution since we are using an iterative optimization method. The initial guess is specified on the code line 4. In our case, we use the Trust Region Constrained algorithm, that is specified by ‘trust-constr’. We can see all the options for the solver by using the function scipy.optimize.show_options(). We can see what are the parameters that we can change or adjust in order to achieve a better accuracy or to increase the number of iterations or function evaluations. We solve the problem by using the functioin minimize() on the code line 10. The first argument is the name of the cost function. The second argument is the initial guess of the solution. The method is specified as the third argument. The constraint functions are specified as the list, and this is the fourth argument. The fifth argument contains parameters that are sent to the cost function. These parameters are the matrix ![]() and the vector

and the vector ![]() . The last argument is a dictionary containing all the solution options. The minimize function returns the structure “result”. The solution can be accessed by typing result.x.

. The last argument is a dictionary containing all the solution options. The minimize function returns the structure “result”. The solution can be accessed by typing result.x.