Introduction

In this post and tutorial, we provide an introduction to Subspace Identification (SI) methods and we develop codes that can be used to effectively estimate Multiple Input -Multiple Output (MIMO) state-space models of dynamical systems. SI methods are effective system identification methods for the estimation of linear MIMO state-space models. There are also a number of extensions of SI methods that can be used for the estimation of nonlinear state-space models. For a relatively broad class of linear state-space problems, subspace identification algorithms perform exceptionally well and outperform most of the algorithms including algorithms based on neural networks.

In contrast to most of the other posts and online lectures, we do not blur the main ideas of the SI algorithm with unnecessary mathematical perplexities and details. Furthermore, to better explain the algorithm, we introduce a model of a physical system and we introduce and provide detailed explanations of Python codes. The YouTube video tutorial accompanying this webpage is given below.

For the impatient, the codes developed and explained in this tutorial can be found here, on my GitHub page.

There are a number of variants of SI methods. In this post, we provide a detailed explanation of a relatively simple SI algorithm that can be used to estimate state-space models of dynamical systems by performing a few relatively simple numerical steps. The main advantage of SI algorithms is that they do not rely upon iterative optimization methods. Instead, they compute estimates of state-space matrices using a least-squares method and using numerical linear algebra methods and algorithms, such as the Singular Value Decomposition (SVD) algorithm, QR decomposition, LU decomposition, etc. The variant of the algorithm that I am going to explain is summarized in this paper (you can cite the paper below if you want to cite this post in your work)

- Haber, Aleksandar. “Subspace identification of temperature dynamics.” arXiv preprint arXiv:1908.02379 (2019).

- Haber, A., Pecora, F., Chowdhury, M. U., & Summerville, M. (2019, October). Identification of temperature dynamics using subspace and machine learning techniques. In Dynamic Systems and Control Conference (Vol. 59155, p. V002T24A003). American Society of Mechanical Engineers.

We start our discussion with an explanation of the class of models that we want to estimate. We consider a linear state-space model having the following form

(1) ![]()

where ![]() is the discrete time instant,

is the discrete time instant, ![]() is the system state,

is the system state, ![]() is the state order,

is the state order, ![]() is the control input,

is the control input, ![]() is the observed output,

is the observed output, ![]() is the process disturbance,

is the process disturbance, ![]() is the measurement noise,

is the measurement noise, ![]() ,

, ![]() , and

, and ![]() are the system matrices.

are the system matrices.

The vectors ![]() and

and ![]() are usually unknown or only their statistics are known. Under several technical assumptions on the statistics of the vectors

are usually unknown or only their statistics are known. Under several technical assumptions on the statistics of the vectors ![]() and

and ![]() that for simplicity and brevity we do not explain in this post, with the system (1) we can associate another state-space model having the following form

that for simplicity and brevity we do not explain in this post, with the system (1) we can associate another state-space model having the following form

(2) ![]()

where ![]() and

and ![]() . The state-space model (2) is often referred to as the Kalman innovation state-space model. The vector

. The state-space model (2) is often referred to as the Kalman innovation state-space model. The vector ![]() and the matrix

and the matrix ![]() model the effect of the process disturbance and the measurement noise on the system dynamics and the system output. The identification problem can now be formulated as follows.

model the effect of the process disturbance and the measurement noise on the system dynamics and the system output. The identification problem can now be formulated as follows.

From the set of input-output data ![]() estimate the model order

estimate the model order ![]() and the system matrices

and the system matrices ![]() and

and ![]() of the state-space model (2).

of the state-space model (2).

It should be emphasized that once the matrices ![]() , and

, and ![]() are estimated, we can disregard the matrix

are estimated, we can disregard the matrix ![]() if we want to work with the model (1).

if we want to work with the model (1).

Before we start with the explanation of the SI algorithm, we first introduce a model and codes that are used for the simulation of its response.

Test Model – System of Two Objects Connected by Springs and Dampers

Mass-spring systems are often used as models of a number of physical systems. For example, a car suspension system can effectively be modeled by a mass-spring-damper system. Also, electrical circuits, composed of resistors, inductors, and capacitors (RLC) circuits, have mechanical equivalents that can be physically realized using objects with different masses, springs, and dampers. For these reasons, we focus on mathematical models of mass-spring-damper systems.

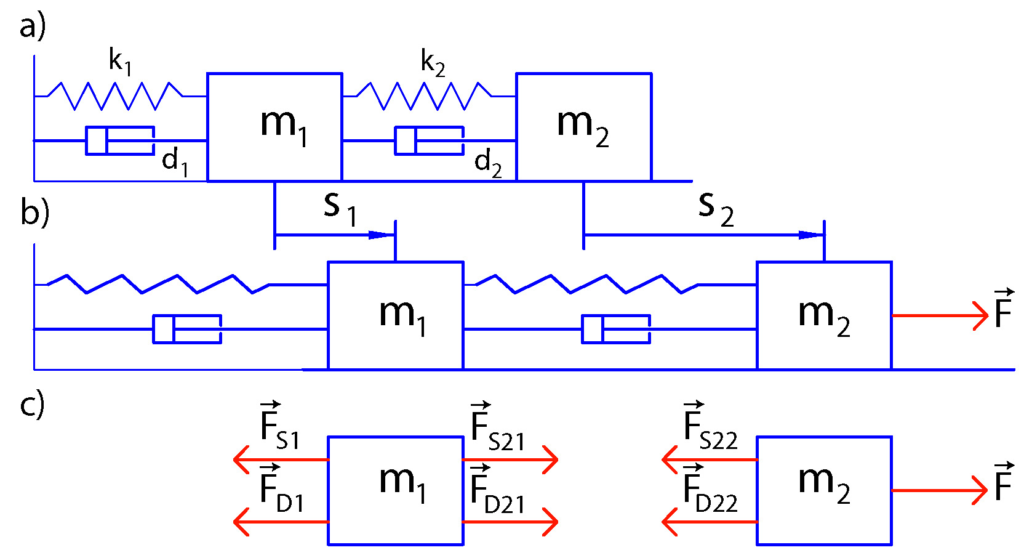

The sketch of the system is shown in Fig.1(a) below. The system consists of two objects of the masses ![]() and

and ![]() connected by springs and dampers. In addition, the object 1 is attached to the wall by a spring and a damper. Figure 2(b) shows the situation when the force

connected by springs and dampers. In addition, the object 1 is attached to the wall by a spring and a damper. Figure 2(b) shows the situation when the force ![]() is acting on the second object. The displacements of the objects

is acting on the second object. The displacements of the objects ![]() and

and ![]() from their equilibrium positions are denoted by

from their equilibrium positions are denoted by ![]() and

and ![]() , respectively.

, respectively.

is acting on the second body. (c) Free body diagrams of the system.

is acting on the second body. (c) Free body diagrams of the system.Figure 1(c) shows the free-body diagrams of the system. The force ![]() is the force that the spring 1 is exerting on the body 1. The magnitude of this force is given by

is the force that the spring 1 is exerting on the body 1. The magnitude of this force is given by ![]() , where

, where ![]() is the spring constant. The force

is the spring constant. The force ![]() is the force that the damper 1 is exerting on the body 1. The magnitude of this force is given by

is the force that the damper 1 is exerting on the body 1. The magnitude of this force is given by ![]() , where

, where ![]() is the damper constant and

is the damper constant and ![]() is the first derivative of the displacement (velocity of the body 1). The force

is the first derivative of the displacement (velocity of the body 1). The force ![]() is the force that the spring 2 is exerting on the body 1. The magnitude of this force is given by

is the force that the spring 2 is exerting on the body 1. The magnitude of this force is given by ![]() , where

, where ![]() is the spring constant. The force

is the spring constant. The force ![]() is the force that the damper 2 is exerting on the body 1. The magnitude of this force is given by

is the force that the damper 2 is exerting on the body 1. The magnitude of this force is given by ![]() , where

, where ![]() is the damper constant.

is the damper constant.

The force ![]() is the force that the spring 2 is exerting on the body 2. The force

is the force that the spring 2 is exerting on the body 2. The force ![]() is the force that the damper 2 is exerting on the body 2.

is the force that the damper 2 is exerting on the body 2.

We assume that the masses of springs and dampers are much smaller than the masses of the objects and consequently, they can be neglected. Consequently, we have ![]() and

and ![]() .

.

The next step is to apply Newton’s second law:

(3) ![]()

Then, we introduce the state-space variables

(4) ![]()

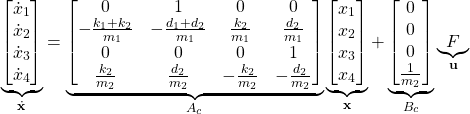

By substituting the force expressions in (3) and by using (4) we arrive at the state-space model

(5)

We assume that only the position of the first object can be directly observed. This can be achieved using for example, infrared distance sensors. Under this assumption, the output equation has the following form

(6) ![]()

The next step is to transform the state-space model (5)-(6) in the discrete-time domain. Due to its simplicity and good stability properties, we use the Backward Euler method to perform the discretization. This method approximates the state derivative as follows

(7) ![]()

where ![]() is the discretization constant. For simplicity, we assumed that the discrete-time index of the input is

is the discretization constant. For simplicity, we assumed that the discrete-time index of the input is ![]() . We can also assume that the discrete-time index of input is

. We can also assume that the discrete-time index of input is ![]() if we want. However, then, when simulating the system dynamics we will start from

if we want. However, then, when simulating the system dynamics we will start from ![]() . From the last equation, we have

. From the last equation, we have

(8) ![]()

where ![]() and

and ![]() . On the other hand, the output equation remains unchanged

. On the other hand, the output equation remains unchanged

(9) ![]()

In the sequel, we present a Python code for discretizing the system and computing the system response to the control input signals. This code will be used to generate identification and validation data.

import numpy as np

import matplotlib.pyplot as plt

from numpy.linalg import inv

# define the system parameters

m1=20 ; m2=20 ; k1=1000 ; k2=2000 ; d1=1 ; d2=5;

# define the continuous-time system matrices

Ac=np.matrix([[0, 1, 0, 0],[-(k1+k2)/m1 , -(d1+d2)/m1 , k2/m1 , d2/m1 ], [0 , 0 , 0 , 1], [k2/m2, d2/m2, -k2/m2, -d2/m2]])

Bc=np.matrix([[0],[0],[0],[1/m2]])

Cc=np.matrix([[1, 0, 0, 0]])

#define an initial state for simulation

#x0=np.random.rand(2,1)

x0=np.zeros(shape=(4,1))

#define the number of time-samples used for the simulation and the sampling time for the discretization

time=300

sampling=0.05

#define an input sequence for the simulation

#input_seq=np.random.rand(time,1)

input_seq=5*np.ones(time)

#plt.plot(input_sequence)

I=np.identity(Ac.shape[0]) # this is an identity matrix

A=inv(I-sampling*Ac)

B=A*sampling*Bc

C=Cc

# check the eigenvalues

eigen_A=np.linalg.eig(Ac)[0]

eigen_Aid=np.linalg.eig(A)[0]

# the following function simulates the state-space model using the backward Euler method

# the input parameters are:

# -- Ad,Bd,Cd - discrete-time system matrices

# -- initial_state - the initial state of the system

# -- time_steps - the total number of simulation time steps

# this function returns the state sequence and the output sequence

# they are stored in the matrices Xd and Yd respectively

def simulate(Ad,Bd,Cd,initial_state,input_sequence, time_steps):

Xd=np.zeros(shape=(A.shape[0],time_steps+1))

Yd=np.zeros(shape=(C.shape[0],time_steps+1))

for i in range(0,time_steps):

if i==0:

Xd[:,[i]]=initial_state

Yd[:,[i]]=C*initial_state

x=Ad*initial_state+Bd*input_sequence[i]

else:

Xd[:,[i]]=x

Yd[:,[i]]=C*x

x=Ad*x+Bd*input_sequence[i]

Xd[:,[-1]]=x

Yd[:,[-1]]=C*x

return Xd, Yd

state,output=simulate(A,B,C,x0,input_seq, time)

plt.plot(output[0,:])

plt.xlabel('Discrete time instant-k')

plt.ylabel('Position- d')

plt.title('System response')

plt.savefig('step_response1.png')

plt.show()

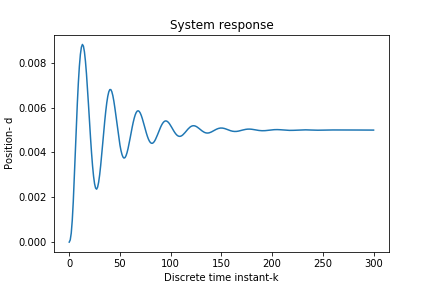

A few comments are in order. We generate a step control signal. The step response of the system is shown in the figure below. From the step response, we can see that the system is lightly damped. Consequently, due to the complex system poles (in the continuous-time domain), the identification of such a system is far from trivial.

Next, we provide a detailed explanation of the subspace identification method.

Step-by-step Explanation of the Subspace Identification Algorithm

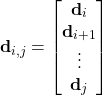

To simplify the derivations and for brevity, we first introduce the following notation.

Let ![]() be an arbitrary

be an arbitrary ![]() -dimensional vector, where

-dimensional vector, where ![]() denotes the discrete-time instant. The notation

denotes the discrete-time instant. The notation ![]() denotes a

denotes a ![]() -dimensional vector defined as follows

-dimensional vector defined as follows

(10)

That is, the vector ![]() is formed by stacking the vectors

is formed by stacking the vectors ![]() on top of each other for an increasing time instant. This procedure is known as the “lifting procedure”.

on top of each other for an increasing time instant. This procedure is known as the “lifting procedure”.

Using a similar procedure, we define a “data matrix” ![]() as follows

as follows

(11) ![]()

That is, the matrix ![]() is formed by stacking the vectors

is formed by stacking the vectors ![]() and by shifting the time index

and by shifting the time index ![]() . Next, we need to define an operator that extracts rows or columns of a matrix.

. Next, we need to define an operator that extracts rows or columns of a matrix.

Let ![]() be an arbitrary matrix. The notation

be an arbitrary matrix. The notation ![]() denotes a new matrix that is created from the rows

denotes a new matrix that is created from the rows ![]() of the matrix

of the matrix ![]() (without row permutations).

(without row permutations).

Similarly, the notation ![]() stands for a matrix constructed from the corresponding columns of the matrix

stands for a matrix constructed from the corresponding columns of the matrix ![]() . This notation is inspired by the standard MATLAB notation which is also used in the Python programming language.

. This notation is inspired by the standard MATLAB notation which is also used in the Python programming language.

Step 1: Estimation of the VARX model or the System Markov Parameters

The abbreviation VARX, stands for Vector Auto-Regressive eXogenous. From the output equation of (2), we can express the innovation vector ![]() :

:

(12) ![]()

By substituting this expression in the state equation of the model (2), we obtain the following equation

(13) ![]()

The last equation can be written compactly as follows

(14) ![]()

where

(15) ![]()

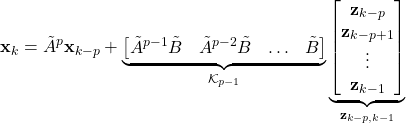

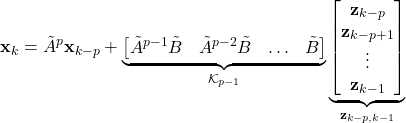

For the development of the SI algorithm, it is important to introduce the parameter ![]() which is referred to as the “past window”. Starting from the time instant

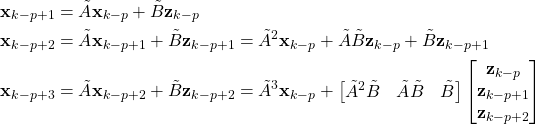

which is referred to as the “past window”. Starting from the time instant ![]() , and by performing recursive substitutions of the equation (14), we obtain

, and by performing recursive substitutions of the equation (14), we obtain

(16)

Following this procedure, we obtain

(17)

By multiplying (17) from left by ![]() and by using the output equation(2), we can obtain

and by using the output equation(2), we can obtain

(18) ![]()

where

(19) ![]()

The matrix ![]() is the matrix of “Markov parameters”. Due to the fact that the matrix

is the matrix of “Markov parameters”. Due to the fact that the matrix ![]() is stable (even if the matrix

is stable (even if the matrix ![]() is unstable, under some relatively mild assumptions we can determine the matrix

is unstable, under some relatively mild assumptions we can determine the matrix ![]() such that the matrix

such that the matrix ![]() is stable), we have

is stable), we have

(20) ![]()

for sufficiently large ![]() . By substituting (20) in (18), we obtain

. By substituting (20) in (18), we obtain

(21) ![]()

This equation represents VARX model. Namely, the system output at the time instant ![]() depends on the past inputs and outputs contained in the vector

depends on the past inputs and outputs contained in the vector ![]() . The first step in the SI algorithm is to estimate the Markov parameters of the VARX model. That is, to estimate the matrix

. The first step in the SI algorithm is to estimate the Markov parameters of the VARX model. That is, to estimate the matrix ![]() .

.

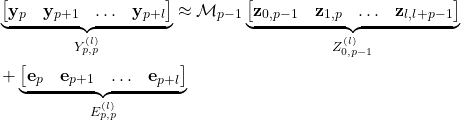

This can be achieved by solving a least-squares problem. Namely, from (21), we can write:

(22)

On the basis of the last equation, we can form the least-squares problem

(23) ![]()

where ![]() is the Frobenius matrix norm. The solution is given by

is the Frobenius matrix norm. The solution is given by

(24) ![]()

Next, we present the Python code to estimate the Markov parameters.

###############################################################################

# This function estimates the Markov parameters of the state-space model:

# x_{k+1} = A x_{k} + B u_{k} + Ke(k)

# y_{k} = C x_{k} + e(k)

# The function returns the matrix of the Markov parameters of the model

# Input parameters:

# "U" - is the input vector of the form U \in mathbb{R}^{m \times timeSteps}

# "Y" - is the output vector of the form Y \in mathbb{R}^{r \times timeSteps}

# "past" is the past horizon

# Output parameters:

# The problem beeing solved is

# min_{M_pm1} || Y_p_p_l - M_pm1 Z_0_pm1_l ||_{F}^{2}

# " M_pm1" - matrix of the Markov parameters

# "Z_0_pm1_l" - data matrix used to estimate the Markov parameters,

# this is an input parameter for the "estimateModel()" function

# "Y_p_p_l" is the right-hand side

def estimateMarkovParameters(U,Y,past):

import numpy as np

import scipy

from scipy import linalg

timeSteps=U.shape[1]

m=U.shape[0]

r=Y.shape[0]

l=timeSteps-past-1

# data matrices for estimating the Markov parameters

Y_p_p_l=np.zeros(shape=(r,l+1))

Z_0_pm1_l=np.zeros(shape=((m+r)*past,l+1)) # - returned

# the estimated matrix that is returned as the output of the function

M_pm1=np.zeros(shape=(r,(r+m)*past)) # -returned

# form the matrices "Y_p_p_l" and "Z_0_pm1_l"

# iterate through columns

for j in range(l+1):

# iterate through rows

for i in range(past):

Z_0_pm1_l[i*(m+r):i*(m+r)+m,j]=U[:,i+j]

Z_0_pm1_l[i*(m+r)+m:i*(m+r)+m+r,j]=Y[:,i+j]

Y_p_p_l[:,j]=Y[:,j+past]

# numpy.linalg.lstsq

#M_pm1=scipy.linalg.lstsq(Z_0_pm1_l.T,Y_p_p_l.T)

M_pm1=np.matmul(Y_p_p_l,linalg.pinv(Z_0_pm1_l))

return M_pm1, Z_0_pm1_l, Y_p_p_l

###############################################################################

# end of function

###############################################################################

To estimate the Markov parameters, we need to select the past window ![]() . The problem of optimal selection of the past window is an important problem, and we will deal with this problem in our next post.

. The problem of optimal selection of the past window is an important problem, and we will deal with this problem in our next post.

Step 2: Estimation of the State-Sequence

Once the Markov parameters are estimated, we can proceed with the estimation of the state sequence. The main idea is that if we would know the state sequence ![]() , then we would be able to estimate the system matrices

, then we would be able to estimate the system matrices ![]() and

and ![]() of the state-space model (2) using a simple least-squares method.

of the state-space model (2) using a simple least-squares method.

Let us write again the equations (17) and (20)

(25)

(26) ![]()

From these two equations, we have

(27) ![]()

Besides the past window ![]() , another user-selected parameter is defined in the SI algorithm. This parameter is called the future window and is usually denoted by

, another user-selected parameter is defined in the SI algorithm. This parameter is called the future window and is usually denoted by ![]() .

.

The future window needs to satisfy the following condition ![]() . For a selected value of the future window

. For a selected value of the future window ![]() , we define the following matrix

, we define the following matrix

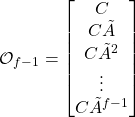

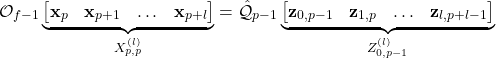

(28)

By multiplying the equation (27) with ![]() , we obtain

, we obtain

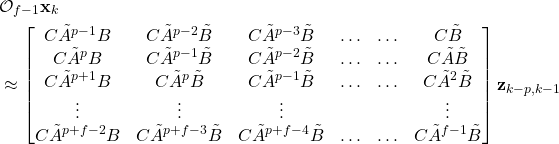

(29)

Taking the assumption (20) into account, from (29), we have

(30)

Now, let us analyze the matrix ![]() in (30). Its first block row is the matrix

in (30). Its first block row is the matrix ![]() . Its second block row is the matrix composed of a block zero matrix and the matrix

. Its second block row is the matrix composed of a block zero matrix and the matrix ![]() in which the last block has been erased, etc. That is, the matrix

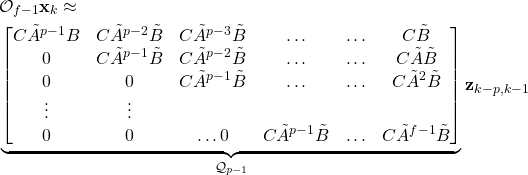

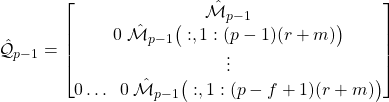

in which the last block has been erased, etc. That is, the matrix ![]() can be completely reconstructed using the Markov parameter matrix. Let

can be completely reconstructed using the Markov parameter matrix. Let ![]() denote the matrix constructed on the basis of the estimated matrix

denote the matrix constructed on the basis of the estimated matrix ![]() . This matrix is formally defined as follows:

. This matrix is formally defined as follows:

(31)

On the basis of the equations (30) and (31), and by iterating the index ![]() from

from ![]() to

to ![]() , we obtain

, we obtain

(32)

Now, let us analyze the equation (32). We are interested in obtaining an estimate of the matrix ![]() that represents the state sequence. That is, we want to obtain an estimate of the state sequence such that in the later stage we can use this estimate to determine the system matrices. The left-hand side of the equation is completely unknown and the right-hand side is known since we have estimated the matrix

that represents the state sequence. That is, we want to obtain an estimate of the state sequence such that in the later stage we can use this estimate to determine the system matrices. The left-hand side of the equation is completely unknown and the right-hand side is known since we have estimated the matrix ![]() . If we would know the matrix

. If we would know the matrix ![]() , then we would be able to solve this equation for

, then we would be able to solve this equation for ![]() . However, we do not know this matrix and it seems that our efforts to estimate the model are doomed to fail.

. However, we do not know this matrix and it seems that our efforts to estimate the model are doomed to fail.

Now, here comes the magic of numerical linear algebra! Under some assumptions, from the equation (32) it follows that we can obtain an estimate of the matrix ![]() by computing a row space of the matrix

by computing a row space of the matrix ![]() . The row space can be computed by computing the singular value decompostion (SVD)

. The row space can be computed by computing the singular value decompostion (SVD)

(33) ![]()

where ![]() are orthonormal matrices, and the matrix

are orthonormal matrices, and the matrix ![]() is the matrix of singular values. Let

is the matrix of singular values. Let ![]() , denote an estimate of the state order (we will explain in the sequel a simple method to estimate the state order, and a more complex method will be explained in our next post). Then, an estimate of the matrix

, denote an estimate of the state order (we will explain in the sequel a simple method to estimate the state order, and a more complex method will be explained in our next post). Then, an estimate of the matrix ![]() , denoted by

, denoted by ![]() , is computed as follows

, is computed as follows

(34) ![]()

where ![]() and the superscript

and the superscript ![]() means that we are taking square roots of the diagonal entries of the matrix

means that we are taking square roots of the diagonal entries of the matrix ![]() . Once we have estimated the matrix

. Once we have estimated the matrix ![]() we can obtain the estimate of the state sequence because:

we can obtain the estimate of the state sequence because:

(35) ![]()

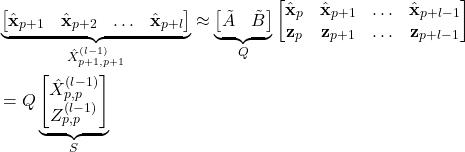

Step 3: Estimation of the System Matrices

Now that we have estimated the state sequence, we can estimate the system matrices by forming two least squares problems. Let us write the equation (14) once more over here for clarity

(36) ![]()

where

(37) ![]()

From this equation, we have

(38)

Since the matrix ![]() is known, the matrix

is known, the matrix ![]() can be estimated by solving

can be estimated by solving

(39) ![]()

The solution is given by

(40) ![]()

Similarly, from the output equation of (2), we have:

(41)

and the matrix ![]() can be estimated by forming a similar least squares problem

can be estimated by forming a similar least squares problem

(42) ![]()

The solution is given by

(43) ![]()

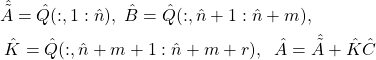

Once these matrices have been estimated, we can obtain the system matrices as follows:

(44)

where the estimates of the matrices ![]() , and

, and ![]() are denoted by

are denoted by ![]() , and

, and ![]() , respectively.

, respectively.

The Python code for estimating the matrices is given below.

###############################################################################

# This function estimates the state-space model:

# x_{k+1} = A x_{k} + B u_{k} + Ke(k)

# y_{k} = C x_{k} + e(k)

# Acl= A - KC

# Input parameters:

# "U" - is the input matrix of the form U \in mathbb{R}^{m \times timeSteps}

# "Y" - is the output matrix of the form Y \in mathbb{R}^{r \times timeSteps}

# "Markov" - matrix of the Markov parameters returned by the function "estimateMarkovParameters()"

# "Z_0_pm1_l" - data matrix returned by the function "estimateMarkovParameters()"

# "past" is the past horizon

# "future" is the future horizon

# Condition: "future" <= "past"

# "order_estimate" - state order estimate

# Output parameters:

# the matrices: A,Acl,B,K,C

# s_singular - singular values of the matrix used to estimate the state-sequence

# X_p_p_l - estimated state sequence

def estimateModel(U,Y,Markov,Z_0_pm1_l,past,future,order_estimate):

import numpy as np

from scipy import linalg

timeSteps=U.shape[1]

m=U.shape[0]

r=Y.shape[0]

l=timeSteps-past-1

n=order_estimate

Qpm1=np.zeros(shape=(future*r,past*(m+r)))

for i in range(future):

Qpm1[i*r:(i+1)*r,i*(m+r):]=Markov[:,:(m+r)*(past-i)]

# estimate the state sequence

Qpm1_times_Z_0_pm1_l=np.matmul(Qpm1,Z_0_pm1_l)

Usvd, s_singular, Vsvd_transpose = np.linalg.svd(Qpm1_times_Z_0_pm1_l, full_matrices=True)

# estimated state sequence

X_p_p_l=np.matmul(np.diag(np.sqrt(s_singular[:n])),Vsvd_transpose[:n,:])

X_pp1_pp1_lm1=X_p_p_l[:,1:]

X_p_p_lm1=X_p_p_l[:,:-1]

# form the matrices Z_p_p_lm1 and Y_p_p_l

Z_p_p_lm1=np.zeros(shape=(m+r,l))

Z_p_p_lm1[0:m,0:l]=U[:,past:past+l]

Z_p_p_lm1[m:m+r,0:l]=Y[:,past:past+l]

Y_p_p_l=np.zeros(shape=(r,l+1))

Y_p_p_l=Y[:,past:]

S=np.concatenate((X_p_p_lm1,Z_p_p_lm1),axis=0)

ABK=np.matmul(X_pp1_pp1_lm1,np.linalg.pinv(S))

C=np.matmul(Y_p_p_l,np.linalg.pinv(X_p_p_l))

Acl=ABK[0:n,0:n]

B=ABK[0:n,n:n+m]

K=ABK[0:n,n+m:n+m+r]

A=Acl+np.matmul(K,C)

return A,Acl,B,K,C,s_singular,X_p_p_l

###############################################################################

# end of function

###############################################################################

Model estimation in practice

To identify the model and test the model performance, we need to generate two sets of data. The first set of data, referred to as the identification data set is used to identify the model. This data set is generated for a random initial condition and for a random input sequence. Not every input sequence can be used for system identification.

The input sequence needs to satisfy the so-called persistency of the excitation condition. Loosely speaking, the identification sequence needs to be rich enough to excite all the system modes. Usually, a random sequence will satisfy this condition. Besides, the identification data set, we are using another data set. This data set, which is referred to as the validation data set is used to test the estimated model performance. This data set is generated for a random initial condition and for a random input sequence. The validation initial state and the input sequence are generated such that they are statistically independent from the initial state and the input sequence used for the identification.

All the functions used for the identification are stored in the file “functionsSID”. Here we provide a detailed explanation of the driver code. As a numerical example, we use the example introduced at the beginning of this post. We start with an explanation of the code. The following code is used to import the necessary functions and to define the model.

import numpy as np

import matplotlib.pyplot as plt

from numpy.linalg import inv

from functionsSID import estimateMarkovParameters

from functionsSID import estimateModel

from functionsSID import systemSimulate

from functionsSID import estimateInitial

from functionsSID import modelError

###############################################################################

# Define the model

# masses, spring and damper constants

m1=20 ; m2=20 ; k1=1000 ; k2=2000 ; d1=1 ; d2=5;

# define the continuous-time system matrices

Ac=np.matrix([[0, 1, 0, 0],[-(k1+k2)/m1 , -(d1+d2)/m1 , k2/m1 , d2/m1 ], [0 , 0 , 0 , 1], [k2/m2, d2/m2, -k2/m2, -d2/m2]])

Bc=np.matrix([[0],[0],[0],[1/m2]])

Cc=np.matrix([[1, 0, 0, 0]])

# end of model definition

###############################################################################

###############################################################################

The following code lines are used to define the identification parameters and to define the identification and validation data sets.

###############################################################################

# parameter definition

r=1; m=1 # number of inputs and outputs

# total number of time samples

time=300

# discretization constant

sampling=0.05

# model discretization

I=np.identity(Ac.shape[0]) # this is an identity matrix

A=inv(I-sampling*Ac)

B=A*sampling*Bc

C=Cc

# check the eigenvalues

eigen_A=np.linalg.eig(Ac)[0]

eigen_Aid=np.linalg.eig(A)[0]

# define an input sequence and initial state for the identification

input_ident=np.random.rand(1,time)

x0_ident=np.random.rand(4,1)

#define an input sequence and initial state for the validation

input_val=np.random.rand(1,time)

x0_val=np.random.rand(4,1)

# simulate the discrete-time system to obtain the input-output data for identification and validation

Y_ident, X_ident=systemSimulate(A,B,C,input_ident,x0_ident)

Y_val, X_val=systemSimulate(A,B,C,input_val,x0_val)

# end of parameter definition

###############################################################################

A few comments are in order. The code lines 11-14 are used to discretize the model. The code lines 17-18 are used to check the eigenvalues of the models. This is important for detecting possible instabilities. The code lines 21-30 are used to define the identification and validation data sets.

The following code is used to estimate the model and to perform the model validation.

###############################################################################

# model estimation and validation

# estimate the Markov parameters

past_value=10 # this is the past window - p

Markov,Z, Y_p_p_l =estimateMarkovParameters(input_ident,Y_ident,past_value)

# estimate the system matrices

model_order=3 # this is the model order \hat{n}

Aid,Atilde,Bid,Kid,Cid,s_singular,X_p_p_l = estimateModel(input_ident,Y_ident,Markov,Z,past_value,past_value,model_order)

plt.plot(s_singular, 'x',markersize=8)

plt.xlabel('Singular value index')

plt.ylabel('Singular value magnitude')

plt.yscale('log')

#plt.savefig('singular_values.png')

plt.show()

# estimate the initial state of the validation data

h=10 # window for estimating the initial state

x0est=estimateInitial(Aid,Bid,Cid,input_val,Y_val,h)

# simulate the open loop model

Y_val_prediction,X_val_prediction = systemSimulate(Aid,Bid,Cid,input_val,x0est)

# compute the errors

relative_error_percentage, vaf_error_percentage, Akaike_error = modelError(Y_val,Y_val_prediction,r,m,30)

print('Final model relative error %f and VAF value %f' %(relative_error_percentage, vaf_error_percentage))

# plot the prediction and the real output

plt.plot(Y_val[0,:100],'k',label='Real output')

plt.plot(Y_val_prediction[0,:100],'r',label='Prediction')

plt.legend()

plt.xlabel('Time steps')

plt.ylabel('Predicted and real outputs')

#plt.savefig('results3.png')

plt.show()

# end of code

###############################################################################

The code lines 5-6 are used to estimate the Markov parameters. We guess the value of the past window (a systematic procedure for selecting the past window will be explained in the next post). The code lines 9-10 are used to estimate the model. Here we also guess the model order. However, there is a relatively simple method to visually select a model order (a more advanced model order selection method will be explained in the next post).

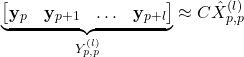

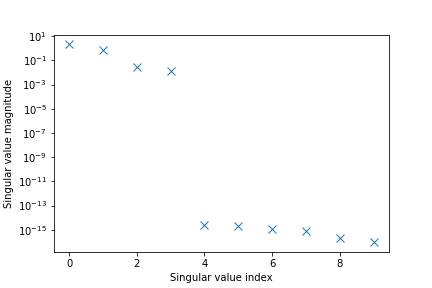

Namely, the function estimateModel() also returns the singular values of the matrix ![]() , see the equation (33). We can select the model order on the basis of the gap between the singular values.

, see the equation (33). We can select the model order on the basis of the gap between the singular values.

Figure 3 below shows the magnitudes of the singular values.

In Fig. 3. we can clearly detect a gap between the singular values 4 and 5. This means that 4 is a good estimate of the model order. In fact, this is the exact model order, since our system is of the fourth-order. This graph is generated using

code lines 12-17.

The code lines 19-21 are used to estimate the initial state of the validation data. Namely, to test the model performance, we need to simulate the estimated model using the validation input sequence. However, to perform the simulation,

we need to know the initial states. The identified model has been simulated using the code line 24. The code lines 27-28 are used to compute the relative model error (a relative error measured by the 2-norm between the true validation output and the predicted one).

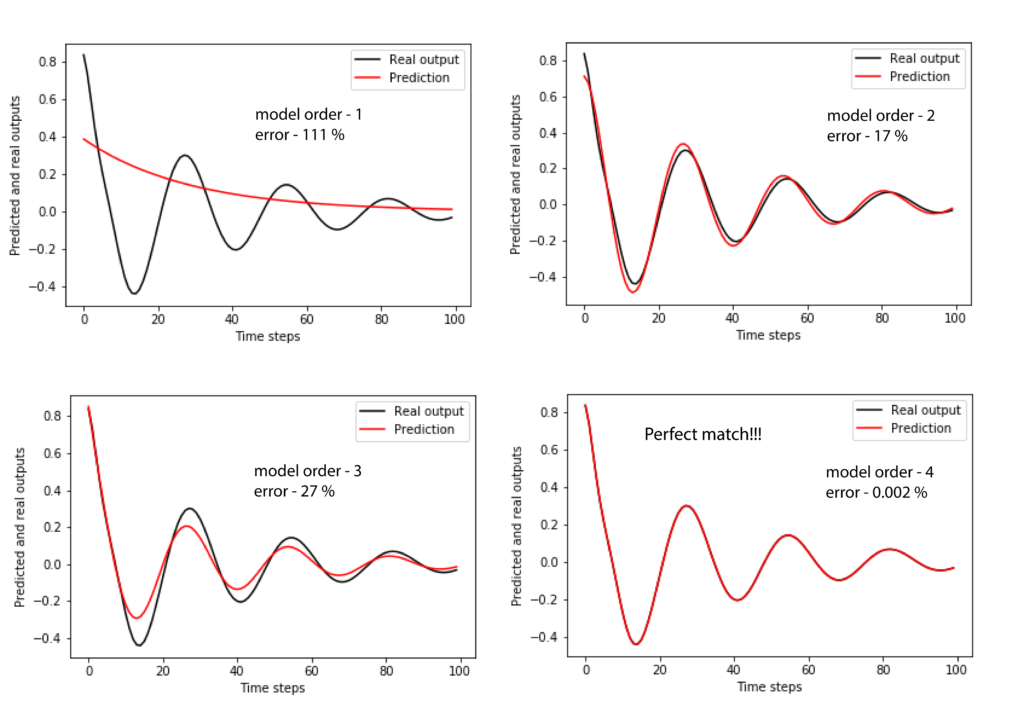

The code lines 31-37 are used to plot the predicted validation output and the true validation output. The results for several model orders are shown in Figure 4 below.

An interesting phenomenon can be observed. Namely, the relative error for the model order of 2 is smaller than the relative error for the model order of 3.

We hope that we have convinced you of the superior performance of the subspace identification algorithms. Notice that we are able to estimate the model by performing only numerical linear algebra steps, without the need to use complex optimization techniques!