In this control theory, mobile robotics, and estimation tutorial we explain how to develop and implement an extended Kalman filter algorithm for localization of mobile robots. We explain how to use the extended Kalman filter to localize (estimate) the robot location and orientation (location and orientation are called the robot pose). We also explain how to implement the localization algorithm in Python from scratch. Here, we need to immediately state that the localization algorithm is developed under the assumption that the locations of external landmarks (markers) are known and that the landmark measurement correspondence is also known. In our second tutorial series, we will consider the case when the landmark measurement correspondence is not known, and in the third tutorial series, we will consider the case when both the landmark locations and their measurement correspondence are not known. Do not be confused if you currently do not understand what is landmark or what is landmark measurement correspondence. These terms will be explained in this tutorial series.

Due to the objective complexity of the estimation and localization problems, we split this tutorial series into three parts:

- Part I – You are currently reading the first part. In this part, we clearly explain the problem formulation. We explain what is a localization problem. We then explain what are landmarks (markers), and we explain the difference between localization with known landmarks and unknown landmarks. We summarize the mobile robot model, as well as its odometry model. We introduce the measurement model that provides us with information about the robot’s location with respect to the known landmarks.

- Part II – In part two, whose link is given here, on the basis of the problem formulation given in the first part, we explain how to mathematically solve the robot localization problem by using the extended Kalman filter. We thoroughly explain how to compute all the partial derivatives necessary to implement the extended Kalman filter.

- Part III – In part three of this tutorial series, whose link is given here, we explain how to implement the extended Kalman filter for mobile robot localization in Python from scratch.

This first tutorial part is organized as follows. First, we briefly summarize the kinematics model of the robot used in this tutorial series, as well as its odometry model. Based on these two models we derive the state equation of the mobile robot. We then derive the output equation that takes into account relative measurements with respect to external landmarks. We then briefly explain the landmark correspondence problem. Finally, we present the robot localization problem formulation.

The YouTube tutorial accompanying the first part is given below.

Kinematics and Odometry Models of Mobile Robot-State Equation Derivation

In this tutorial series, in order not to blur the main ideas of robotic localization with too complex mobile robot models, we use a differential drive robot as our mobile robot. This is done since a differential drive robot has a relatively simple configuration (actuation mechanism) which results in a simple kinematics model. The main purpose of this tutorial is to explain the main principles and ideas of robot localization, without blurring the main ideas with complex robotic configurations or complex mathematical models. Of course, everything explained in this tutorial series can be generalized to more complex mobile robot configurations, and even to UAVs or autonomous driving vehicles. We thoroughly explained the model of the differential drive robot in our previous tutorials given here and here. In the sequel, we briefly summarize the model, and by using this model as well as the odometry model we derive the state equation for localization.

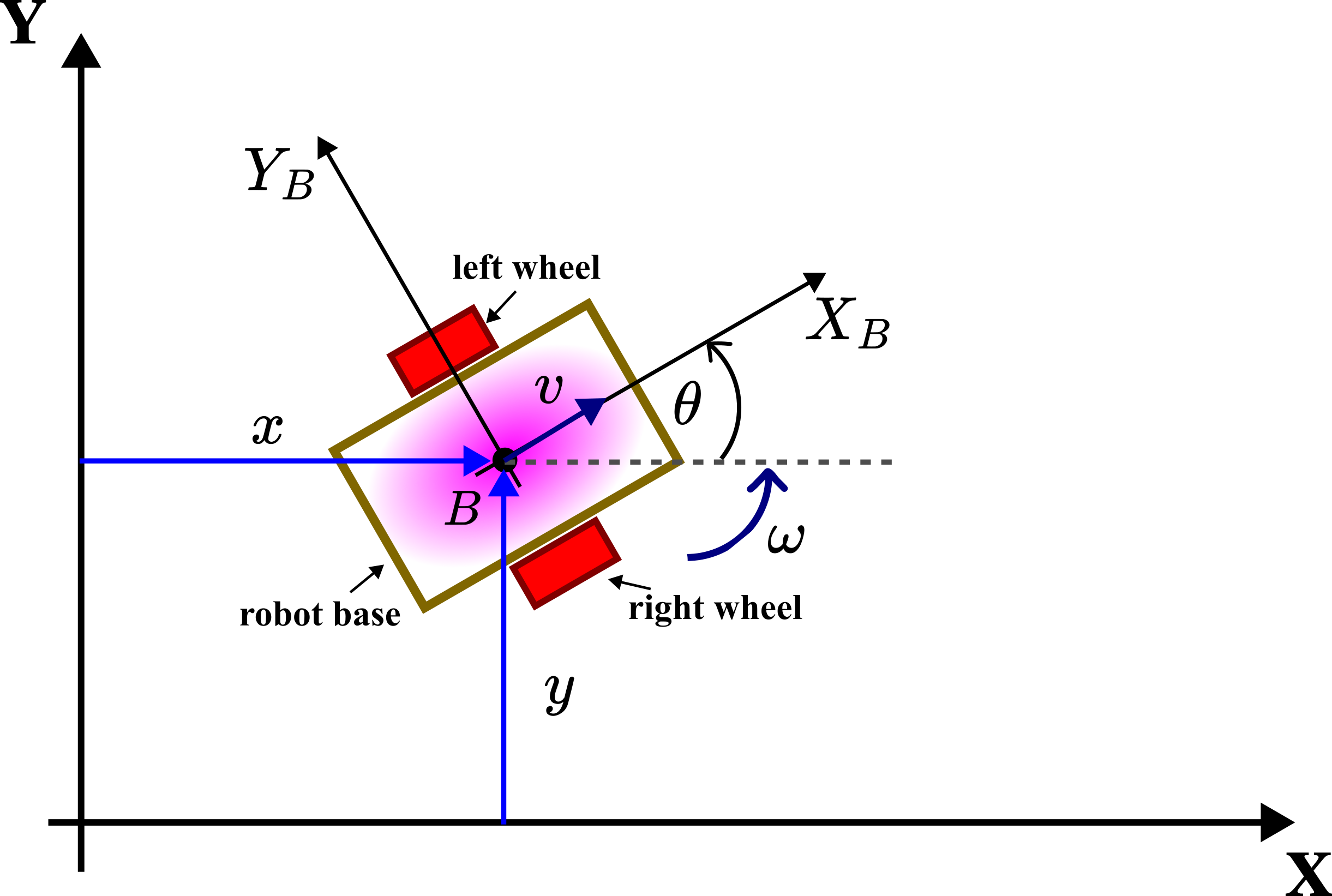

The differential drive (differential wheeled robot) is shown in the figure below.

The robot consists of the robot base and two active (actuated) wheels. There is a third passive caster wheel that is not shown in this graph for clarity. The third caster wheel is used to provide support and stability for the robot base. The symbols used in the above figure are:

is the fixed inertial coordinate system. This coordinate system is also called the inertial frame.

is the body coordinate system. This coordinate system is also called the body frame. The origin of this coordinate system is at the point

which is located at the center of the mass or the center of the symmetry of the mobile robot. The body frame is rigidly attached to the robot body, and it moves (translates and rotates) with the robot body.

and

are the

and

coordinates of the origin of the body frame. That is, these are the coordinates of the center of the body frame in the inertial coordinate system.

- The angle

is the angle that the

axis of the inertial frame makes with the

axis of the body frame. This angle is called the orientation angle.

- The symbol

denotes the instantaneous velocity of the body frame origin

.

- The symbol

denotes the instantaneous angular velocity of the mobile robot.

As explained in our previous tutorial given here, by discretizing the continuous-time kinematics of the mobile robot, we obtain the discrete-time model

(1)

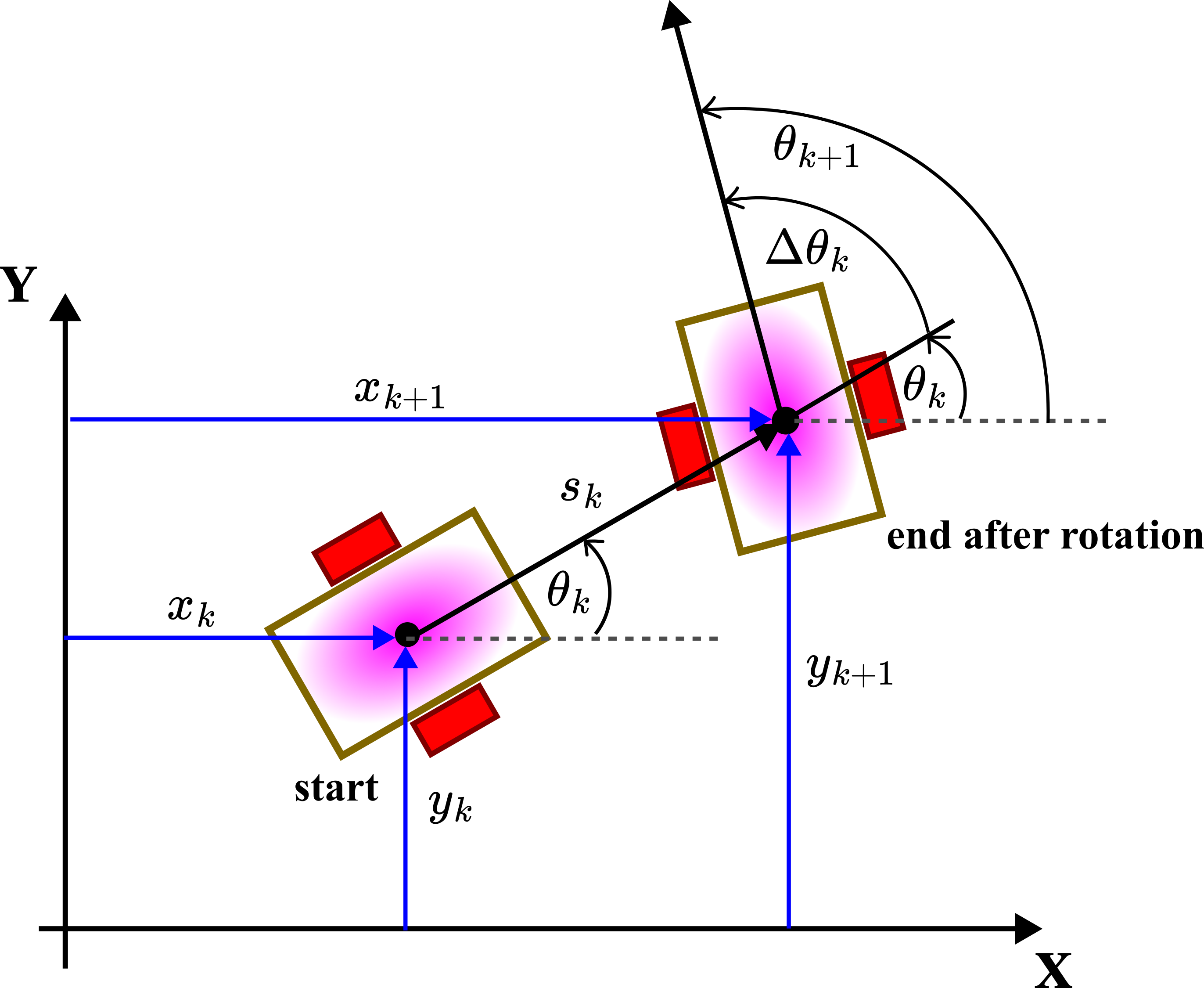

where (also see the figure shown below)

- the subscript

is the discrete-time instant.

and

are the

and

coordinates at the discrete-time instant

. That is,

and

, where

is the discretization constant (

is the time interval between

and

).

is the distance traveled by the robot between the time instants

and

in the direction of the velocity

. It is assumed that the velocity is constant between the time instant

and

.

is the orientation at the discrete-time instant

.

is the change of the orientation between the time instant

and

.

Here, for simplicity, we assume that by using the odometry system, the following quantities are directly measured

- The distance

is measured.

- The change of the angle

is measured.

Instead of this assumption, we can assume that the velocity and angular velocity

are measured directly.

Here, we need to explain the odometry process. We can measure the distance traveled by using wheel encoders or some other method. We can measure the angle change

by using a gyroscope or a compass. However, due to the measurement imperfections and due to other mechanical phenomena, such as for example wheel slipping, we are not able to accurately measure both

and

. Instead, we measure

(2)

where and

are the measurement errors (measurement noise). We assume that both

and

are normally distributed with the standard deviations of

and

, respectively. From (2), we obtain

(3)

By substituting (3) in (1), we obtain

(4)

Let us separate noise from the states and measurements in the last set of equations

(5)

Next, we formally define the states and inputs. The states are defined as follows

(6)

The state vector is defined as follows

(7)

We define the inputs as follows

(8)

Here, you should stop for a second, and try to understand the following. In (8), we defined actual measurements as inputs! However, readers with a strong background in control theory will find this assignment confusing. Usually, inputs in state-space models are control variables determined by an algorithm or determined by us. However, here, we define inputs as output measurements. That is, inputs are defined as measurements of the traveled distance and change of angle. This is a formal assignment that is often used in robotics literature. Also, these inputs are actually only observed at the time instant . This should always be kept in mind.

By substituting (6) and (8) in (5), we obtain

(9)

Next, for clarity, we shift the time index from to

in (9). As a result, we obtain the final form of the state equation

(10)

This is the state equation that will be used for the development of the extended Kalman filter in the next tutorial. Here, it is very important to keep in mind the following when dealing with the state-space model (10). The measurement noise of the measured distance and change of angle formally act as process disturbance in (10). That is, when developing a state estimator, it should always be kept in mind that the stochastic part of the state equation involving and

is not known. However, we know the distribution and standard deviations of these random variables. That is, we know the statistics of

and

. This statistics can be estimated from the collected data.

Next, we need to define the concept of landmarks (markers) and the output equation.

Landmarks (markers) and Output Equation

Localization will be formally defined in the next section of this tutorial. However, here we can briefly say that the localization is the process of estimating the robot pose vector in (6) by using the available measurement data and the pose estimate from the previous time step. It is a very well-known fact that by only using the measurement of the traveled distance and the angle change (or by measuring the velocity and angular velocity) we cannot accurately localize the robot in space. That is, we cannot accurately estimate the robot pose by propagating in time the deterministic part of the state-space model (9). As the time interval of operation grows, the estimation error will also grow (estimation error variances will grow).

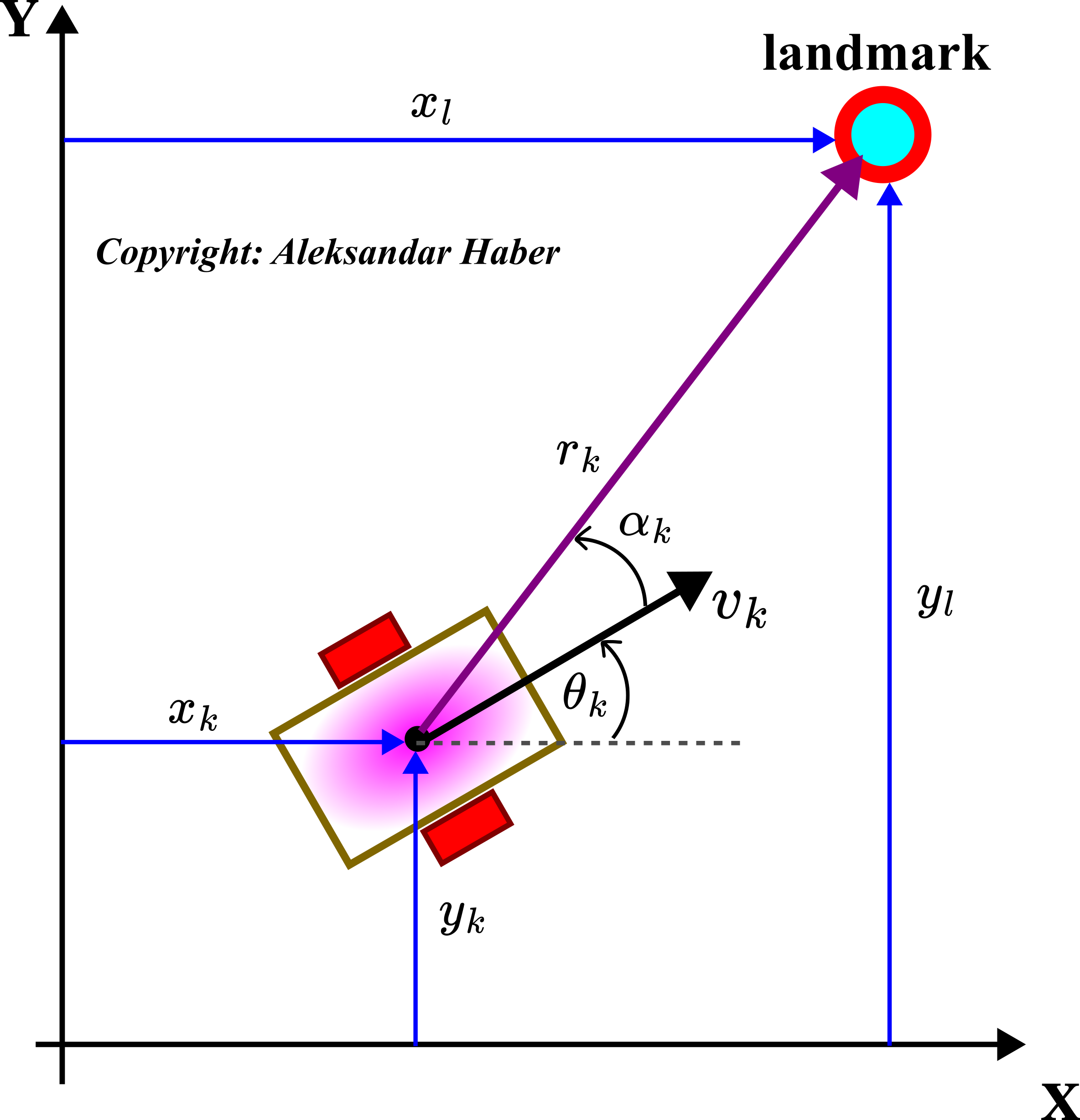

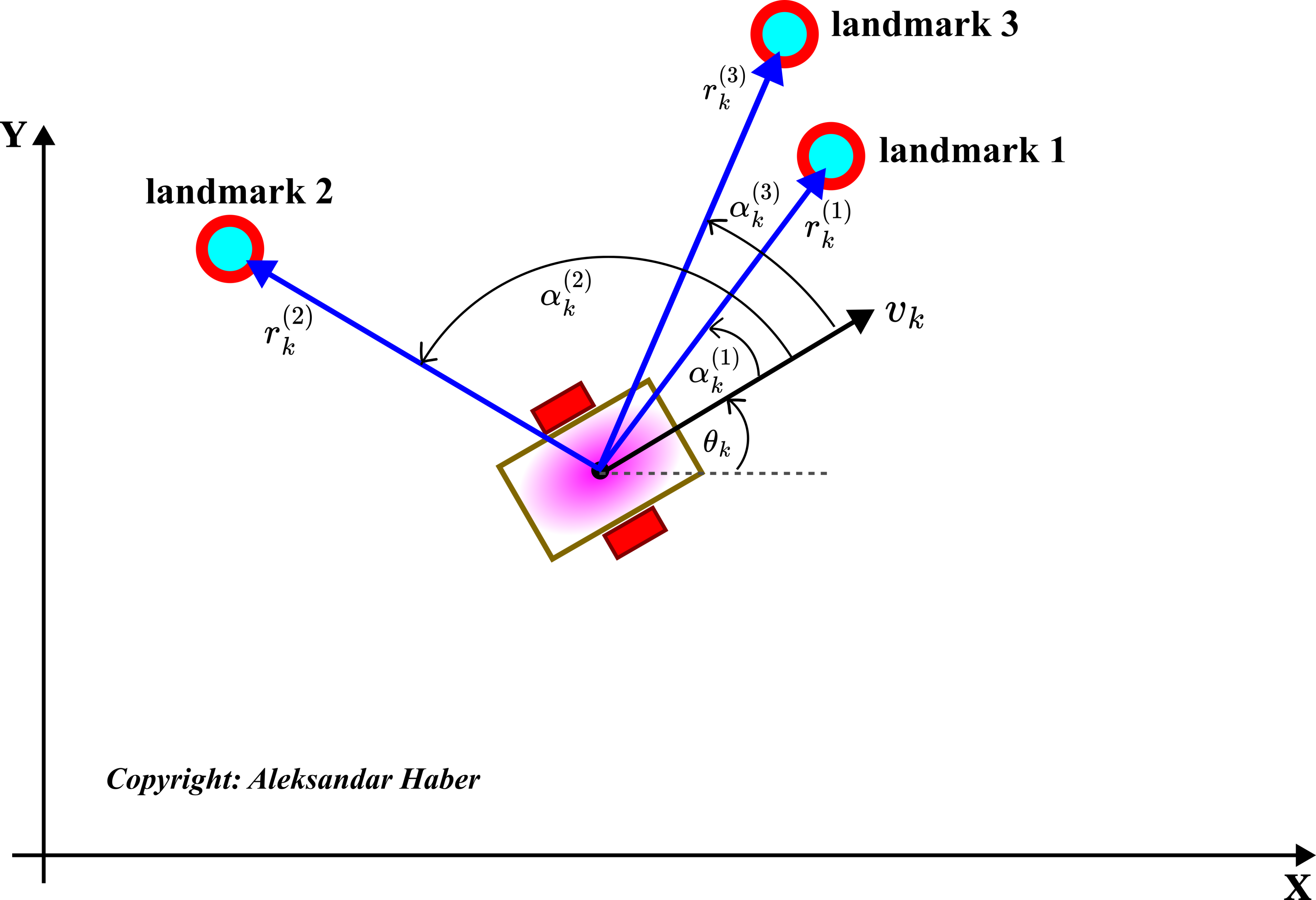

For that reason and to be able to accurately estimate the robot’s location and orientation in space, we need to introduce an additional sensor that is capable of measuring the distance and angle between the robot and external objects known as landmarks or markers in the robotics literature. We can define a landmark (marker) as an object or a feature of the environment that is used as a reference for measuring the robot’s location. Namely, we assume that a mobile robot is equipped with a sensor that can measure the distance between the robot and the landmark and the angle of the line connecting the robot and the landmark. This is illustrated in the figure below.

Figure above shows a landmark with the location , where

and

are the coordinates in the inertial coordinate system. The robot is equipped with a sensor that can measure

- The distance

at the discrete time instant

between the origin of the body frame

and the landmark. This distance is called the range.

- Angle

at the discrete time instant

. This angle is the angle between the line in the direction of the range (the line connecting the robot and landmark) and the heading direction of the robot marked by the velocity

. The angle

is also called the bearing angle or simply bearing.

Here we make the following important assumption:

- The location

of every landmark in the inertial coordinate system is known accurately.

This assumption will be relaxed in our future tutorials on SLAM. Next, we need to define the measurement equation. From Fig. 2, it follows that the range can be expressed as follows

(11)

On the other hand, from the basic geometry we have

(12)

From the last equation, we have

(13)

is the 2-argument arctangent function (here, it is important to use instead of the ordinary arctangent function). Next, we assume that the measurements of the range

and the angle

are corrupted by the measurement noise, that is,

(14)

where and

represent the measurement noise. We assume that both

and

and normally distributed with the standard deviations of

and

, respectively. By substituting the state assignment (6) in (14), we obtain

(15)

The equations (15) represent the measurement model for a single landmark. However, we need to clarify what happens and how the measurement model is defined when we have more than a single landmark. That is, we need to define the landmark correspodence problem.

What is Landmark Correspondence Problem and Why It is Important

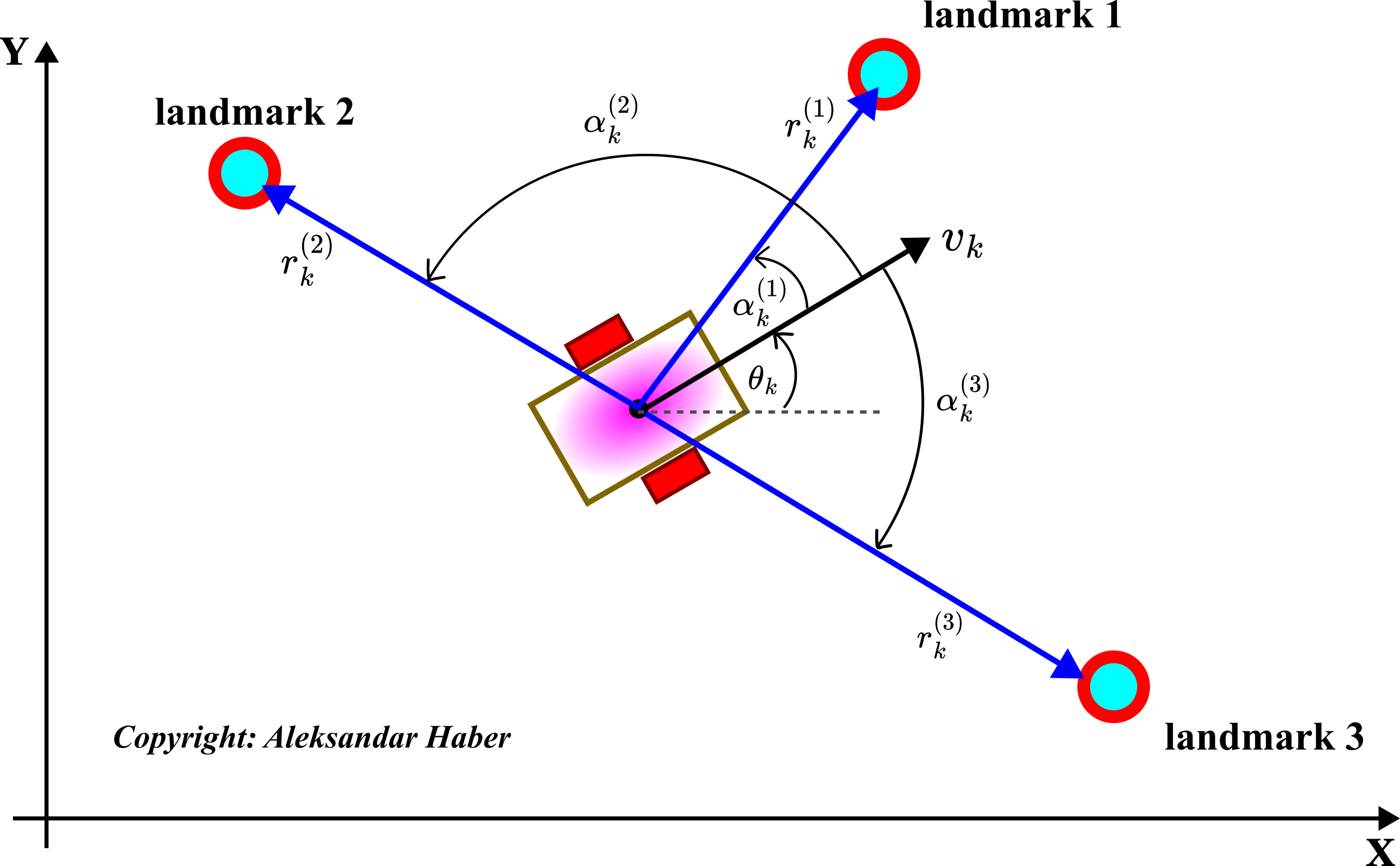

Consider the scenario shown in the figure below.

The robot is in an environment with three landmarks. Let us suppose that we know the locations (coordinates) of all three landmarks. Furthermore, let us assume that the robot sensor can detect the range and bearings (angles) of three landmarks. What the robot receives are three measurements, where every measurement consists of a 2-tuple (range, bearing angle):

(16)

However, these measurements are unlabeled. Unlabeled means that the measurements are not assigned to the corresponding landmarks. That is, in the case of unlabeled measurements, we do not know the correspondence between the landmarks and measurements, and we cannot form the output equations (15). We need to assign every measurement tuple to the corresponding landmark, and after that, we need to form a single measurement equation (15) for every landmark.

The landmark correspondence problem is to assign the landmarks to the received measurements, that is, to label the received measurement tuples, where the label denotes the landmark that corresponds to the measurement. For example, in the case of Figure 3 above and the set of measurements (16), it is obvious that we have the following labels

(17)

The landmark labeling and correspondence in the figure are obvious since the landmarks are decently separated. Consequently, on the basis of the current robot location and received measurements, we can easily figure out how to label the measurements. However, in contrast, consider the landmark configuration shown below.

In Fig. 4 above, landmark 3 and landmark 1 are close to each other. Consequently, the measurements might look like this

(18)

Taking into account that the pose of the robot has to be estimated together with determining the landmark correspondence, and taking into account that all the measurements (including the robot odometry measurements) are corrupted by the measurement noise, it is difficult to properly assign the landmark labels to first and third measurements. That is, we are not sure how to assign landmark 1 and landmark 3 to these measurements.

The correct solution of the landmark correspondence problem is far from trivial and it will be addressed in our future tutorials. In this tutorial series, we assume that the received measurements are properly labeled. That is, in this tutorial series, we assume that the landmark correspondences are perfectly known.

Localization Problem for Mobile Robotics With Known Landmark Correspondence (Labels)

Now, we are ready to formulate the localization problem for mobile robots.

Let us assume that

- There are

fixed landmarks in the environment surrounding the mobile robot.

- The locations of

landmarks are known in the inertial coordinate frame. That is, the location

of every landmark

, where

, is known.

- The mobile robot is equipped with a sensor that can measure the range

and the bearing angle

of every landmark.

- The landmark correspondences are perfectly known.

- The robot is equipped with odometry sensors that can measure the traveled distance

and robot orientation change

.

Then, by using information about

- The odometry readings

and

obtained at the discrete-time instant

.

- Landmark measurements

,

,

,

,

obtained at the discrete-time instant

.

- The pose estimate computed at the previous discrete-time step

:

and

at the discrete-time instant estimate the pose of the robot:

and

.

This problem formulation is graphically illustrated in the figure below.

Here are several important comments about this problem formulation.

First of all, estimates of the quantities are denoted with the hat notation: and

. Then, the third assumption can be made more realistic by assuming that we can only obtain the landmark measurements if the distance between the robot and the landmark is such that the landmark is in the sensor range. For example, some low-cost lidars have a measurement range of about 10-15 meters, and we can obtain the landmark information only if the landmark is in this range. In the next tutorial part, we will investigate the performance of the extended Kalman filter when all the landmarks can be observed at every time instant

and when only landmarks in a given sensor range can be observed at the given discrete-time instant.