In this Python tutorial and mathematics tutorial, we explain how to solve a system of nonlinear equations in Python by using the fsolve() function and without directly specifying the Jacobian matrix. The solver will approximate the Jacobian matrix. In our next post, whose link can be found here, we explained how to solve the system of nonlinear equations by directly specifying the Jacobian matrix.

The function fsolve() can only solve square systems of nonlinear equations. That is, the number of unknown variables has to be equal to the number of equations in the system. According to the documentation, this function is actually a wrapper for the hybrid Powell method implemented in the FORTRAN90 library MINPACK. In our next tutorial, we will explain how to solve in Python overdetermined and underdetermined systems of nonlinear equations.

The YouTube tutorial accompanying this post is given below.

Test Case and Preliminary Transformations and Computations

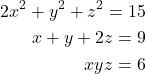

We consider the following system of nonlinear equations

(1)

where ![]() , and

, and ![]() are the unknown variables that we want to determine. This system has at least one solution that is

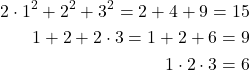

are the unknown variables that we want to determine. This system has at least one solution that is ![]() , and

, and ![]() . We can verify that this is the solution by substituting these values in the original system:

. We can verify that this is the solution by substituting these values in the original system:

(2)

This solution will be used as a test case to test the accuracy of the function fsolve(). It is important to note that while performing simulations, we found another solution:

(3) ![]()

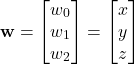

To solve this system in Python, we first need to group the variables in a vector. Let us define the vector ![]() (the symbol for the vector can be arbitrary) that gathers the original variables

(the symbol for the vector can be arbitrary) that gathers the original variables ![]() as follows:

as follows:

(4)

that is

(5) ![]()

where in order to be consistent with Python indexing, we start the index of the variables ![]() from 0.

from 0.

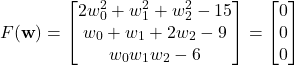

The next step is to use the new notation (4) to write the original system in a vector form given by the following equation

(6) ![]()

where ![]() is a 3 by 1 vector function. By using (4), from (1), we have

is a 3 by 1 vector function. By using (4), from (1), we have

(7)

Python Implementation Without Specifying the Jacobian Matrix

Here, we explain how to implement the solution in Python without specifying the Jacobian matrix. In our next tutorial, whose link is given here, we explain how to implement the solution by specifying the analytical form of the Jacobian. The main advantage of specifying the Jacobian matrix is that in this way we can significantly speed up the convergence of the solver. However, we can still compute the solution without specifying the Jacobian matrix. The solver will actually approximate the Jacobian matrix.

The first step is to import the necessary libraries.

import numpy as np

from scipy.optimize import fsolve

Then, we need to define the function ![]() given by (7). That is, we need to define a function that accepts a vector argument

given by (7). That is, we need to define a function that accepts a vector argument ![]() and that returns the value of the function

and that returns the value of the function ![]() . The function is given below.

. The function is given below.

# for a given variable w, this function returns F(w)

# if w is the solution of the nonlinear system, then

# F(w)=0

# F can be interpreted as the residual

def nonlinearEquation(w):

F=np.zeros(3)

F[0]=2*w[0]**2+w[1]**2+w[2]**2-15

F[1]=w[0]+w[1]+2*w[2]-9

F[2]=w[0]*w[1]*w[2]-6

return F

We will provide the name of this function as an input parameter to the fsolve() function. Since fsolve() uses an iterative method to find the solution, we need to provide an initial guess of the solution that is updated iteratively by the fsolve() function. We generate this initial guess as a random vector, and we call the fsolve() function. This is done by the following code lines:

# generate an initial guess

initialGuess=np.random.rand(3)

# solve the problem

solutionInfo=fsolve(nonlinearEquation,initialGuess,full_output=1)

In our case, the fsolve() function accepts three arguments:

- The name of the function that defines the system of nonlinear equations. In our case, the name is “nonlinearEquation”.

- Initial guess of the solution. In our case, it is “initialGuess”.

- Additional parameters. In our case, we are using only a single additional parameter “full_output=1”. This means that we will force the fsolve() function to return not only the final converged solution, but also a complete data structure containing all the information about the computed solution and the solution process.

Of course, fsolve() can accept additional parameters, such as Jacobian matrix, tolerances, number of iterations, etc. The webpage whose link is given here explains these parameters.

The fsolve() returns the following tuple with all the information

(array([1.16022233, 1.67875447, 3.0805116 ]),

{‘nfev’: 29,

‘fjac’: array([[-0.63698827, -0.12872891, -0.76004921],

[-0.01731484, -0.98332044, 0.18105553],

[ 0.77067901, -0.12849038, -0.62413467]]),

‘r’: array([-7.76826293, -5.68608959, -6.52296419, -0.27258245, -1.53724827,

2.73407002]),

‘qtf’: array([-7.38371370e-09, 8.13569906e-10, 1.17127426e-09]),

‘fvec’: array([-2.24709140e-12, 0.00000000e+00, -2.00284234e-12])},

1,

‘The solution converged.’)

The first entry of this tuple is the computed solution: it is [1.16022233, 1.67875447, 3.0805116 ]. Note that this is the second solution. If we run the code again with a different random initial guess, we will most likely obtain the first solution: [1., 2., 3.]. The second entry “nfev” is the number of function evaluations. Another important parameter is the parameter “fvec”. This is the function ![]() evaluated at the computed solution. This value can be seen as the residual and it can quantify how accurately our computed solution solves the equation. An explanation of other parameters can be found here.

evaluated at the computed solution. This value can be seen as the residual and it can quantify how accurately our computed solution solves the equation. An explanation of other parameters can be found here.

We can extract the solution and manually compute the residual, as follows

#extract the solution

solution=solutionInfo[0]

# compute the residual

residual=nonlinearEquation(solution)