In this control engineering tutorial, we provide an intuitive understanding of Lyapunov’s stability analysis. The YouTube video accompanying this tutorial is given below.

1D Dynamical System and Analytical Solution

Let us consider the following dynamical system

(1) ![]()

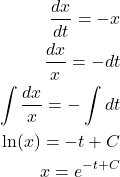

Let us solve this differential equation. We have

(2)

where ![]() is a constant that we need to determine. We determine this constant from the initial condition. Let us assume that

is a constant that we need to determine. We determine this constant from the initial condition. Let us assume that

(3) ![]()

where ![]() is an arbitrary initial condition. It can be positive or negative. By substituting this initial condition into the last equation of (2), we obtain

is an arbitrary initial condition. It can be positive or negative. By substituting this initial condition into the last equation of (2), we obtain

(4) ![]()

Consequently, the solution can be written as follows

(5) ![]()

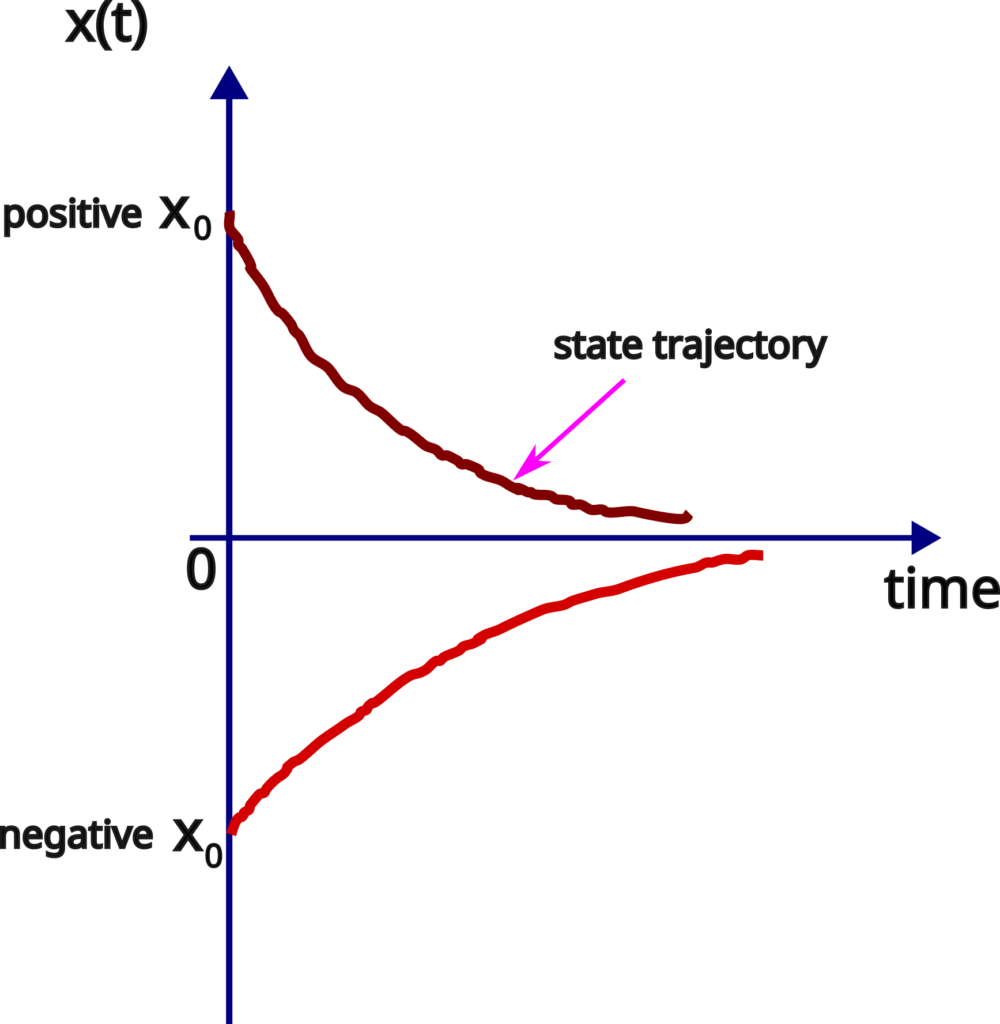

Let us next illustrate this solution for different values of ![]() . The state trajectories are shown in the figure below.

. The state trajectories are shown in the figure below.

If the initial condition is positive, then the state trajectories starting from that initial condition will exponentially decrease according to the law (5). However, they will never reach zero in the final time. On the other hand, if the initial condition is negative, then the state trajectory will increase. However, it will never reach zero. That is, the state will always stay negative.

These trajectories are also illustrated in the figure below.

From this analysis, we conclude that the equilibrium point ![]() is asymptotically stable.

is asymptotically stable.

Stability Analysis Without Solving the Equation – First Approach

Let us try to analyze the stability of the equilibrium point without actually solving the differential equation (1). Here, for clarity, we write the differential equation once more

(6) ![]()

Let us assume that at a certain point in time, the state is positive. This means that ![]() . This implies that the right-hand side of the differential equation (6) is negative. This further implies that the derivative of the state with respect of time is negative. That is, the state decreases and approaches zero.

. This implies that the right-hand side of the differential equation (6) is negative. This further implies that the derivative of the state with respect of time is negative. That is, the state decreases and approaches zero.

On the other hand, let us assume that at a certain point in time, the state is negative. This means that ![]() . This further implies that the first derivative is positive. This means that the state increases, and approaches zero. This is illustrated in the figure below.

. This further implies that the first derivative is positive. This means that the state increases, and approaches zero. This is illustrated in the figure below.

By using this simple analysis, we conclude that the equilibrium point is asymptotically stable.

Stability Analysis by Using Lyapunov’s Stability Theorem

Let us recall the Lyapunov stability theorem. We introduced this theorem in our previous tutorial, which can be found here. For completeness, in the sequel, we restate the Lyapunov stability theorem.

Lyapunov (local) Stability Theorem: Consider a dynamical system

(7) ![]()

where ![]() is a state vector, and

is a state vector, and ![]() is a (nonlinear) vector function. Let

is a (nonlinear) vector function. Let ![]() be an equilibrium point of (7). Let us assume that we are able to find a continuously differentiable scalar function of the state vector argument

be an equilibrium point of (7). Let us assume that we are able to find a continuously differentiable scalar function of the state vector argument ![]() , defined on a neighborhood set

, defined on a neighborhood set ![]() of

of ![]() , that satisfies the following two conditions:

, that satisfies the following two conditions:

- The function

is (locally) positive definite in

is (locally) positive definite in  . That is,

. That is,  and

and  in

in  for all

for all  in

in  .

. - The time derivative of

along state trajectories of the system is (locally) negative semi-definite in

along state trajectories of the system is (locally) negative semi-definite in  . That is,

. That is,  for all

for all  in

in  .

.

Then, ![]() is stable.

is stable.

In addition, if the first time derivative of ![]() is (locally) negative definite in

is (locally) negative definite in ![]() along any state trajectory of the system. That is,

along any state trajectory of the system. That is, ![]() in

in ![]() , then

, then ![]() is asymptotically stable.

is asymptotically stable.

Let us select the following Lyapunov’s function candidate for our one-dimensional system:

(8) ![]()

This function is positive definite everywhere, and ![]() . The first condition is satisfied. Let us compute the time derivative of this function along the trajectories of the system. We have

. The first condition is satisfied. Let us compute the time derivative of this function along the trajectories of the system. We have

(9) ![]()

Here, while computing the first derivative of ![]() , we used the chain rule. That is, we assumed that

, we used the chain rule. That is, we assumed that ![]() , and consequently

, and consequently ![]() . That is,

. That is, ![]() function is a composite function since

function is a composite function since ![]() depends on time. Since we are computing the first derivative along the trajectories of the system, we need to substitute the system dynamics in the last equation. By substituting (1) in the last equation, we obtain

depends on time. Since we are computing the first derivative along the trajectories of the system, we need to substitute the system dynamics in the last equation. By substituting (1) in the last equation, we obtain

(10) ![]()

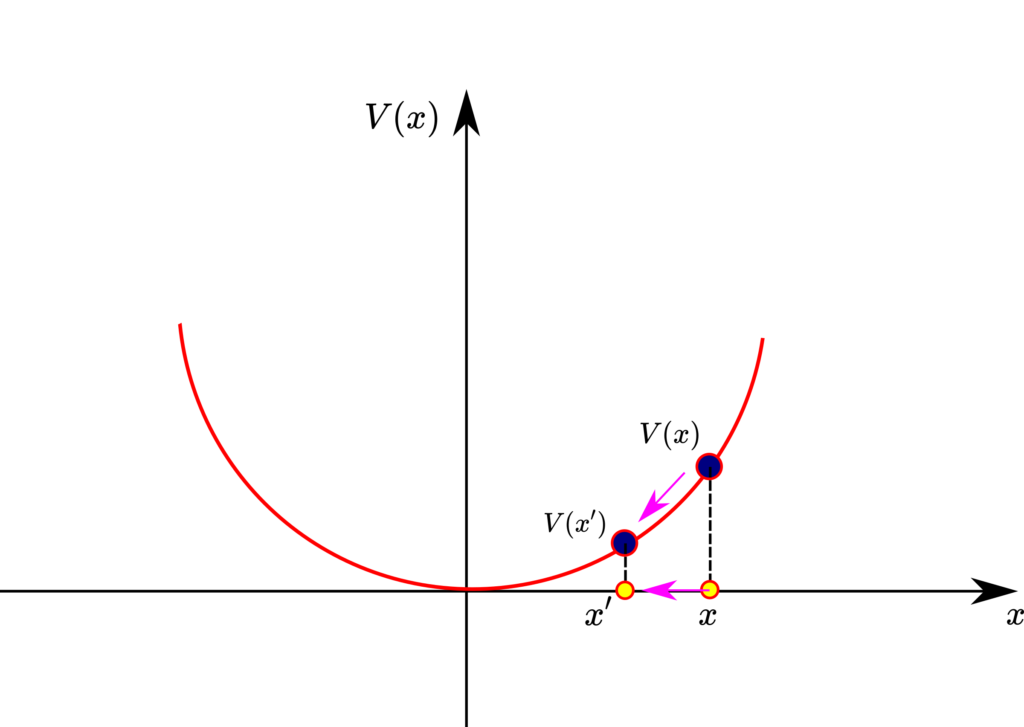

This function is obviously negative definite everywhere. Consequently, we conclude that the function ![]() is the Lyapunov function and that the system is asymptotically stable. However, can we give a physical interpretation and intuitive explanation of this stability result? The answer is yes. For that purpose, consider the figure shown below.

is the Lyapunov function and that the system is asymptotically stable. However, can we give a physical interpretation and intuitive explanation of this stability result? The answer is yes. For that purpose, consider the figure shown below.

Let us assume that at the time instant ![]() , the system was at state

, the system was at state ![]() . Then, since the system is asymptotically stable, at some other time instant

. Then, since the system is asymptotically stable, at some other time instant ![]() , the system will be in state

, the system will be in state ![]() that is closer to the origin. In fact, the system is exponentially stable (this can be seen from the solution (5)). Consider the figure shown above. The state trajectory of the system along the x-axis can be seen as the projection of the trajectory of the ball that rolls up or down the Lyapunov function. If the time derivative of

that is closer to the origin. In fact, the system is exponentially stable (this can be seen from the solution (5)). Consider the figure shown above. The state trajectory of the system along the x-axis can be seen as the projection of the trajectory of the ball that rolls up or down the Lyapunov function. If the time derivative of ![]() is negative, this means that the ball has to role down. This in turn means that the state trajectory of the system has to approach zero equilibrium point. That is,

is negative, this means that the ball has to role down. This in turn means that the state trajectory of the system has to approach zero equilibrium point. That is, ![]() should decrease over time. This is a very rough explanation of why the negative definiteness of the first derivative of the Lyapunov function ensures that the system trajectories are asymptotically stable. On the other hand, let us assume that the system is asymptotically stable, this means that

should decrease over time. This is a very rough explanation of why the negative definiteness of the first derivative of the Lyapunov function ensures that the system trajectories are asymptotically stable. On the other hand, let us assume that the system is asymptotically stable, this means that ![]() . By using this fact, we obtain:

. By using this fact, we obtain:

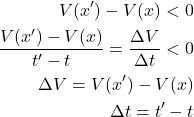

(11)

From the last equation, we have that

(12) ![]()

That is, we have shown that from the asymptotic stability, we obtain that ![]() .

.