In this robotics tutorial, we explain how to install and use a Python library for simulating and visualizing motion of robots. The name of this library is Gym-Aloha. This library is used for developing and testing reinforcement learning algorithms. This library belongs to the so-called gym or gymnasium type of libraries for training reinforcement learning algorithms. Namely, as the word gym indicates, these libraries are capable of simulating the motion of robots, and for applying reinforcement learning actions and observing rewards for every action.

The YouTube tutorial is given below.

Installation Instruction

First of all, make sure that you have installed Anaconda on your system. To install Anaconda, follow the tutorial given here. Next, open a Linux Ubuntu tutorial, and type

sudo apt update && sudo apt upgradeThen, install Git:

sudo apt install git-allThen, clone the remote repository

cd ~

git clone https://github.com/huggingface/gym-aloha

cd gym-alohaThen, create and activate Anaconda virtual environment

conda create -y -n aloha python=3.10 && conda activate alohaFinally, install the necessary library

pip install gym-alohaNext, write and execute the test code

# example.py

import imageio

import gymnasium as gym

import numpy as np

import gym_aloha

env = gym.make("gym_aloha/AlohaInsertion-v0")

#env = gym.make("gym_aloha/AlohaTransferCube-v0")

observation, info = env.reset()

frames = []

for i in range(1000):

action = env.action_space.sample()

observation, reward, terminated, truncated, info = env.step(action)

image = env.render()

frames.append(image)

if (i % 50==0):

observation, info = env.reset()

if terminated or truncated:

observation, info = env.reset()

env.close()

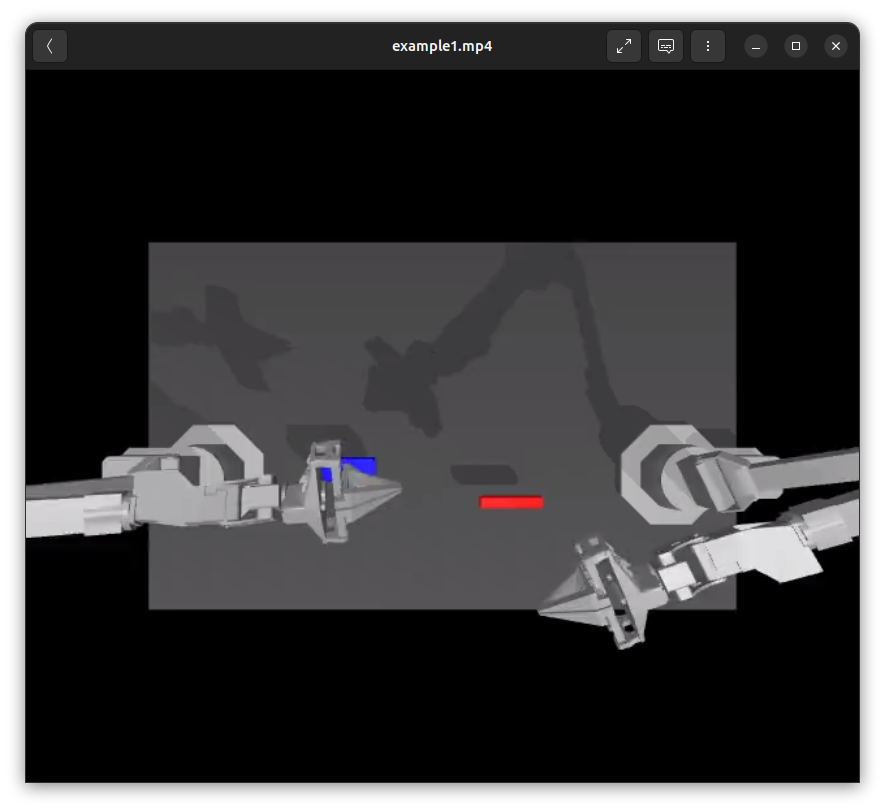

imageio.mimsave("example1.mp4", np.stack(frames), fps=25)This code will simulate random actions applied to two Aloha robots. It will record the top of the table on which two Aloha robots are mounted, and it will save the movie in the file example1.mp4.

This library can simulate two problems:

- The problem of transferring a cube from one robot arm to another (environment specified by “gym_aloha/AlohaTransferCube-v0” ).

- The problem of inserting one part into another (environment specified by “gym_aloha/AlohaInsertion-v0” ).

The reinforcement learning actions are represented by a vector with 14 entries. The entries are 6 control signals for 6 motors of the first robot, binary control action for the first robot gripper (0 open, 1 close), 6 control signals for 6 motors of the second robot, and binary control action for the second robot gripper (0 open, 1 close).

The observation space consists of

qposandqvel: Position and velocity data for the arms and grippers.images: Camera feeds from different angles.env_state: Additional environment state information, such as positions of the peg and sockets.

The rewards for the insertion problem are

- 1 point for touching both the peg and a socket with the grippers.

- 2 points for grasping both without dropping them.

- 3 points if the peg is aligned with and touching the socket.

- 4 points for successful insertion of the peg into the socket.

The rewards for the cube transfer problem are

- 1 point for holding the box with the right gripper.

- 2 points if the box is lifted with the right gripper.

- 3 points for transferring the box to the left gripper.

- 4 points for a successful transfer without touching the table.

When the reward of 4 points is achieved success is returned.

The code presented above will generate random control actions and apply them to the robots. This is achieved with the following two lines in the for loop:

action = env.action_space.sample()

observation, reward, terminated, truncated, info = env.step(action)The function env.step() applies the randomly generated control actions and returns observation, reward, terminated (indicating success), truncated and info. The observation contains a top image of the scene. This scene is saved in the frame list called “frames” and later on a series of scenes is used to generate the video.