- In this tutorial, we explain how to download, install, and run on a local computer the Mistral Small 3 large language model (LLM).

- For people new to LLMs: The Mistral series of models is published by the French company called Mistral AI. The Mistrial AI company is in fourth place in the global AI race and in first place outside of the San Francisco Bay Area (did they consider DeepSeek?).

- Mistral Small 3 is a relatively small model that is optimized for latency. The model has “only” 24B parameters and its quantized version is only about 14 GB large.

- Mistral Small 3 is comparable in terms of performance with larger models such as Llama 3.3 70B and Qwen 32B.

- It is released under Apache 2.0 license which is a a free, permissive license that allows users to modify, use, and distribute software.

- It is an excellent low-cost replacement for GPT4o-mini.

- The performance of Mistral Small 3 is similar to Llama 3.3 70B instruct while being more than 3x faster on the same hardware.

The YouTube tutorial explaining how to install and run Mistral Small 3 is given below.

When to Use Mistral Small 3

- On consumer-level hardware. It works well on our NVIDIA 3090 GPU.

- When quick and accurate responses are required since the model is relatively small.

- Low-latency function calling – ideal for RAG, Internet agents, math reasoning, etc.

- To fine-tune to create subject matter experts. The model can be “easily” fine tuned to create subject matter experts, such as in the fields of medial diagnositcs, technical support, troubleshooting, etc.

We were able to successfully run Mistral Small 3 on a desktop computer with the following specifications

- GPU: NVIDIA 3090

- 64 GB of regular RAM

- Intel i9 processor

Installation Instructions

To install Mistral Small 3 we are going to use Ollama. The first step is to download and install Ollama. To download and install Ollama, go to the official Ollama website

and click on the download button to download the installation file

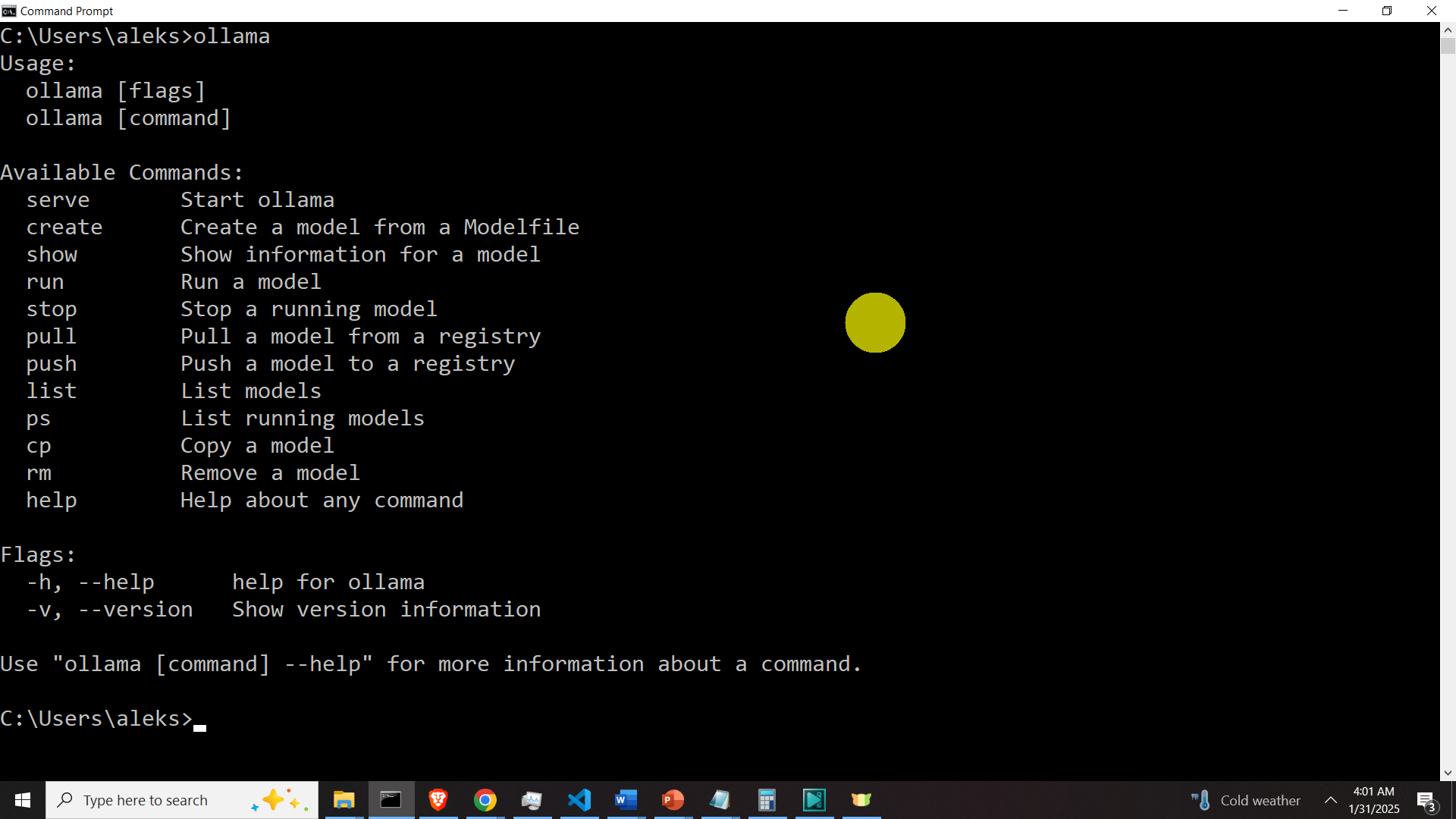

Once the file is downloaded, run it to install Ollama. After Ollama is installed, open a Command Prompt and type the following command

ollamato verify the installation of Ollama. If Ollama is properly installed, the response should look like this

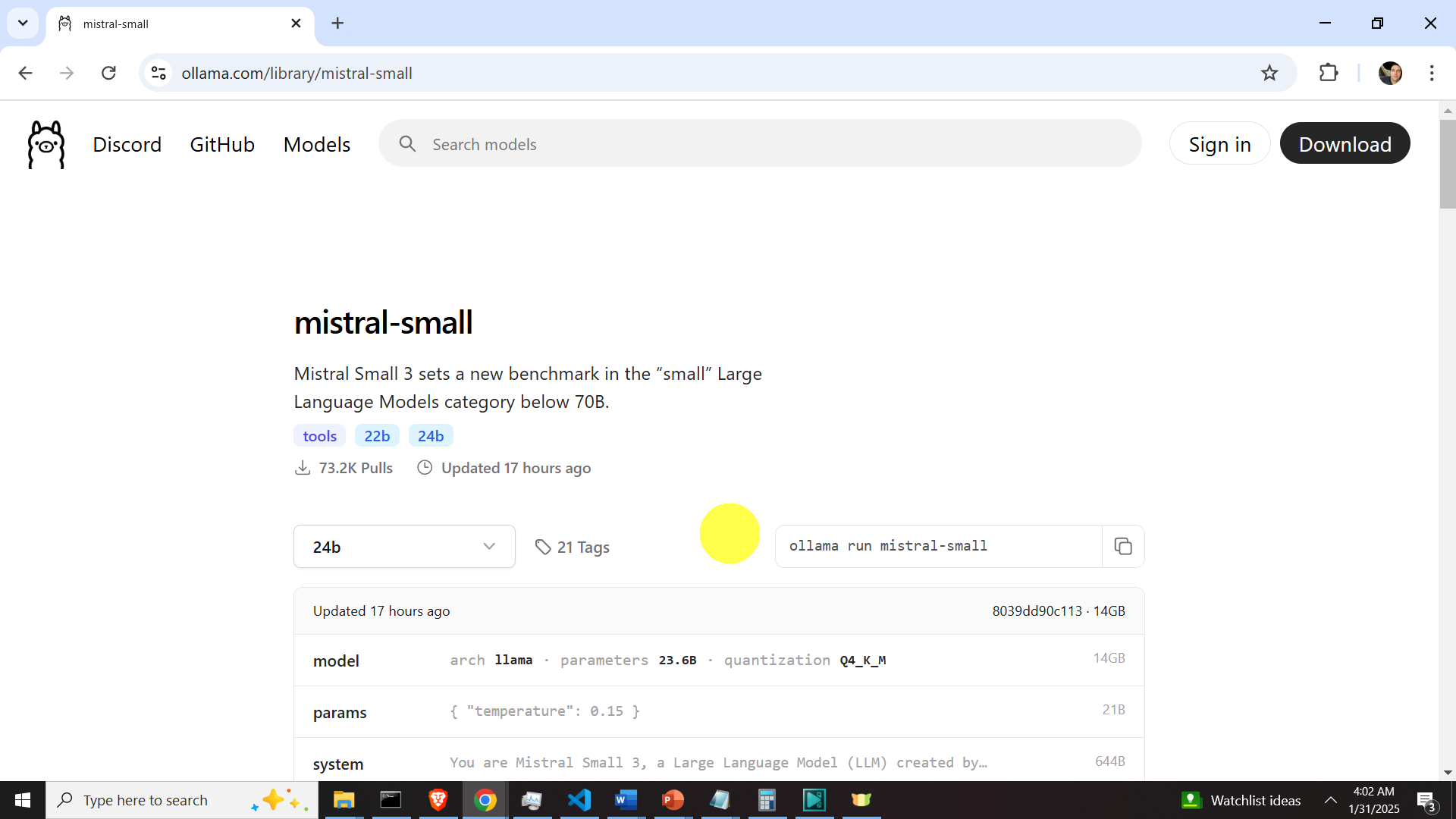

The next step is to download and install the model. To download the model, go back to the Ollama website, and in the search menu search for “mistral-small“

then, click on the model link, and the following webpage will appear

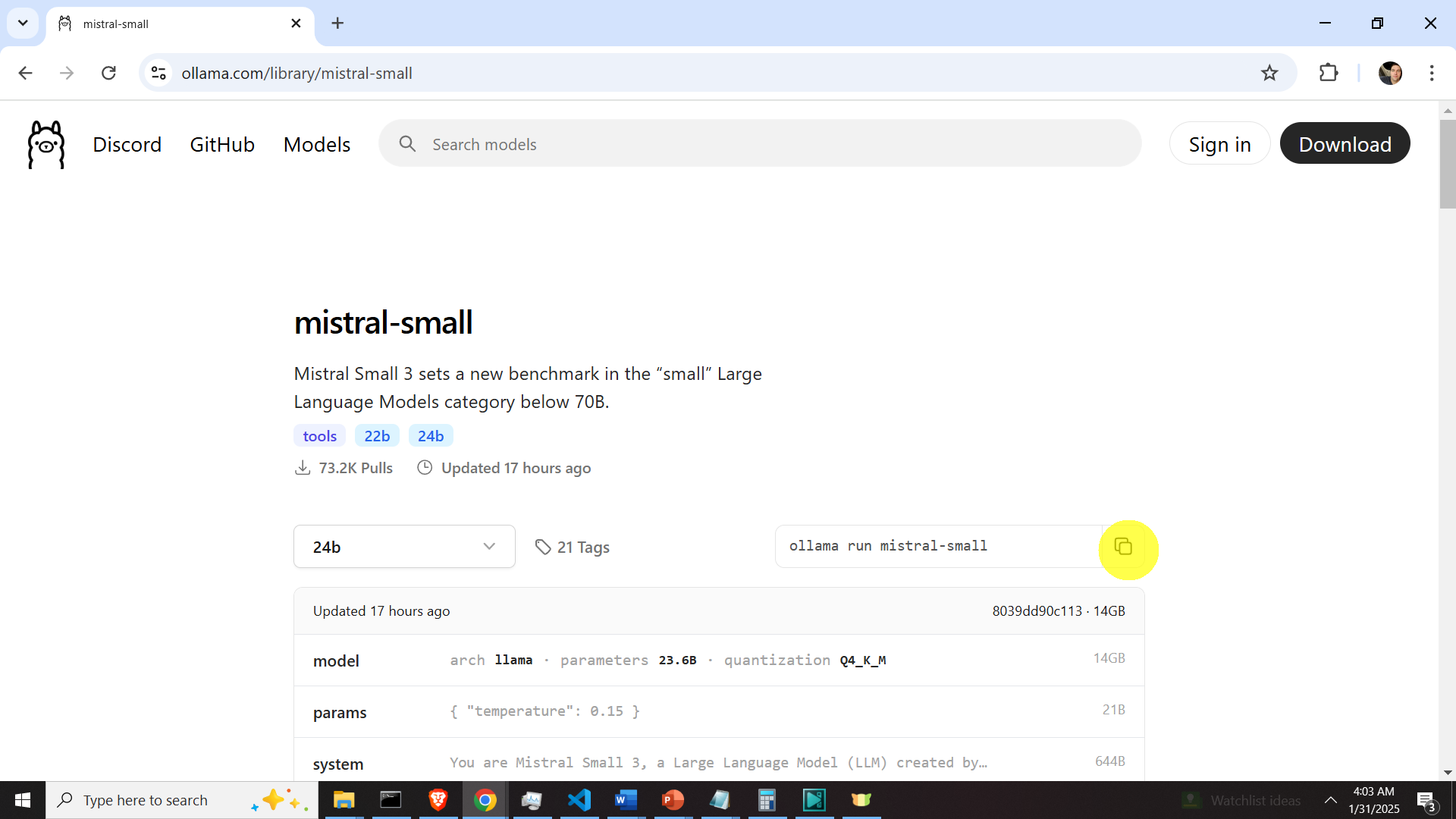

Then, copy the command for installing and running the Mistral Small 3 model as shown in the figure below.

Then, open the command prompt and execute the command in the command prompt

ollama run mistral-smallThis command will download and install the model. After the model is installed it will automatically run and you can start asking questions. To exit the model, press CTRL+d. If you want to run the model again, then type

ollama listto list the exact model name. To run the model, you need to type “ollama run <model name>”, where <model name> should be replaced with the model. The command for running the model should look like this

ollama run mistral-smallthat is the same command that was initially used to download the model. However, this time, since the model is downloaded, the model will only be executed.