In this Ollama, Docker, and Large Language Model Tutorials tutorial, we explain

- How to install Ollama by Using Docker on Linux Ubuntu. That is, how to download and install an official Ollama Docker image and how to run Ollama as a Docker container.

- How to install NVIDIA CUDA GPU support for Ollama Docker containers.

- How to manage Ollama Docker images and containers.

- How to download and install different large language models in Ollama Docker containers.

The YouTube tutorial is given below.

How to Download and Run Ollama Docker Image and Container

The first step is to install Docker on Linux Ubuntu. To do that, follow the tutorial given here. Then, the second step is to install the NVIDIA Container Toolkit. To do that, execute these commands in the terminal:

sudo apt update && sudo apt upgrade

sudo apt install curl

curl --version

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.listsed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.listsudo apt-get update

sudo apt-get install -y nvidia-container-toolkitThe next step is to configure the container by using the nvidia-ctk command

sudo nvidia-ctk runtime configure --runtime=dockerThe final step is to restart the Docker engine

sudo systemctl restart dockerThe next step is to download the Ollama Docker image and start a Docker Ollama container

docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollamaHere, the name of the container is “ollama” which is created from the official image “ollama/ollama”.

To verify that Ollama image is downloaded and that the Ollama container is running, open a new terminal and type this

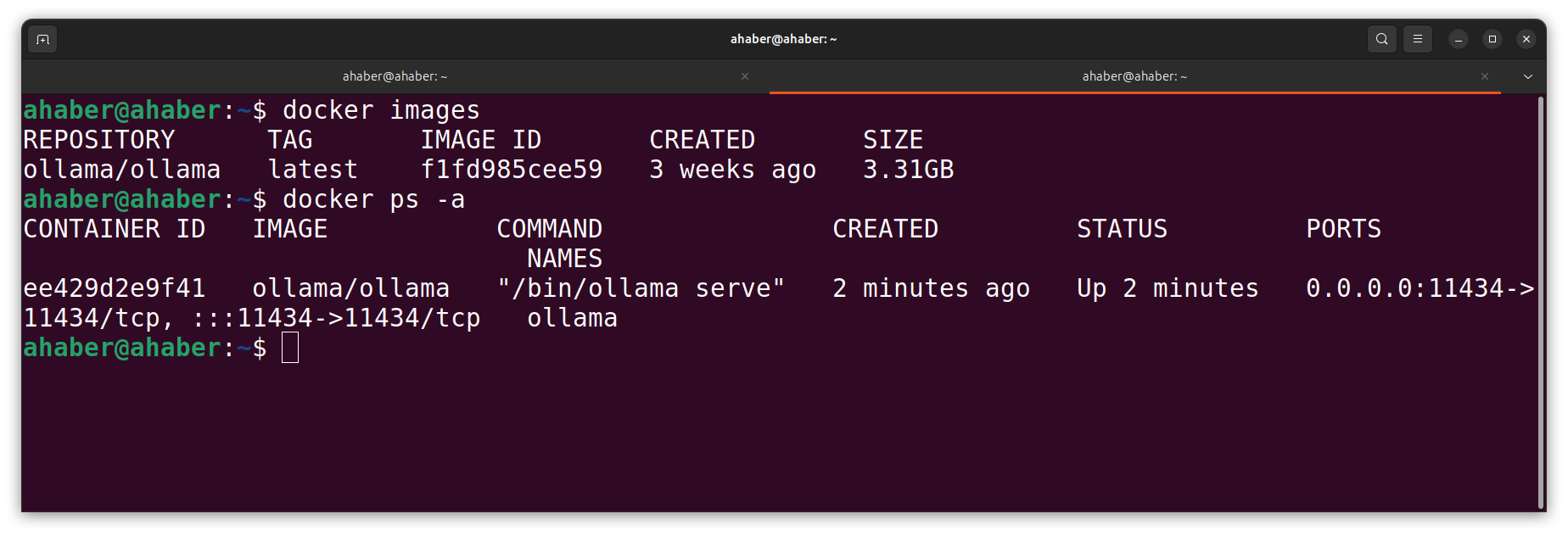

docker images

docker ps -a

If you see a response that looks similar to the response given in the figure above, this means that Ollama container is running in Docker. Next, you can verify that Ollama is running by opening a web browser and by going to the address 0.0.0.0:11434. If Ollama is running in a container the message given in the image below will appear

To stop the container, you need to type this

docker stop ollamaTo start again the container you need to type this

docker start ollamaHow to Run a Large Language Model by Using Ollama Docker Container

First of all, to execute a Ollama command inside of the Docker container, we need to type this

docker exec -it ollama <command name>Let us explain this command. “docker exec -it” is used to execute a command inside of the container. “ollama” is the name of the container, and finally we need to specify the command. For example, to list all downloaded LLMs, we need to type this

docker exec -it ollama ollama listHere “ollama list” is the Ollama command used to list all downloaded models. Next, to download a Llama3.2 model inside of the Docker container, we need to type this

docker exec -it ollama ollama pull llama3.2On the other hand, once the model is downloaded, to run this model, we need to type this

docker exec -it ollama ollama run llama3.2And that is it! Simple as that. In the next tutorial, we will explain how to write a simple container that will install Ollama and run the Large language model in a single container.