In this tutorial, we explain how to install and run Microsoft’s Phi 4 LLM locally in Python. The YouTube tutorial is given below.

Why Phi 4? The race and the fierce competition in the field of LLMs are producing better and better models. Phi 4 is Microsoft’s Newest Small LLM specializing in complex reasoning. It “only” has 14B parameters, and as such, it can be executed locally on “lower-end” hardware.

Note that in our previous tutorial given below, we explained how to run an unofficial release of Phi4 locally by using the Ollama framework.

Install and run Official Phi4 Locally in Python

The first step is to install Microsoft Visual Studio C++. Go to the website

https://visualstudio.microsoft.com/vs/features/cplusplus

and download and install Microsoft Visual Studio C++. Then, download and install CUDA Toolkit

https://developer.nvidia.com/cuda-toolkit

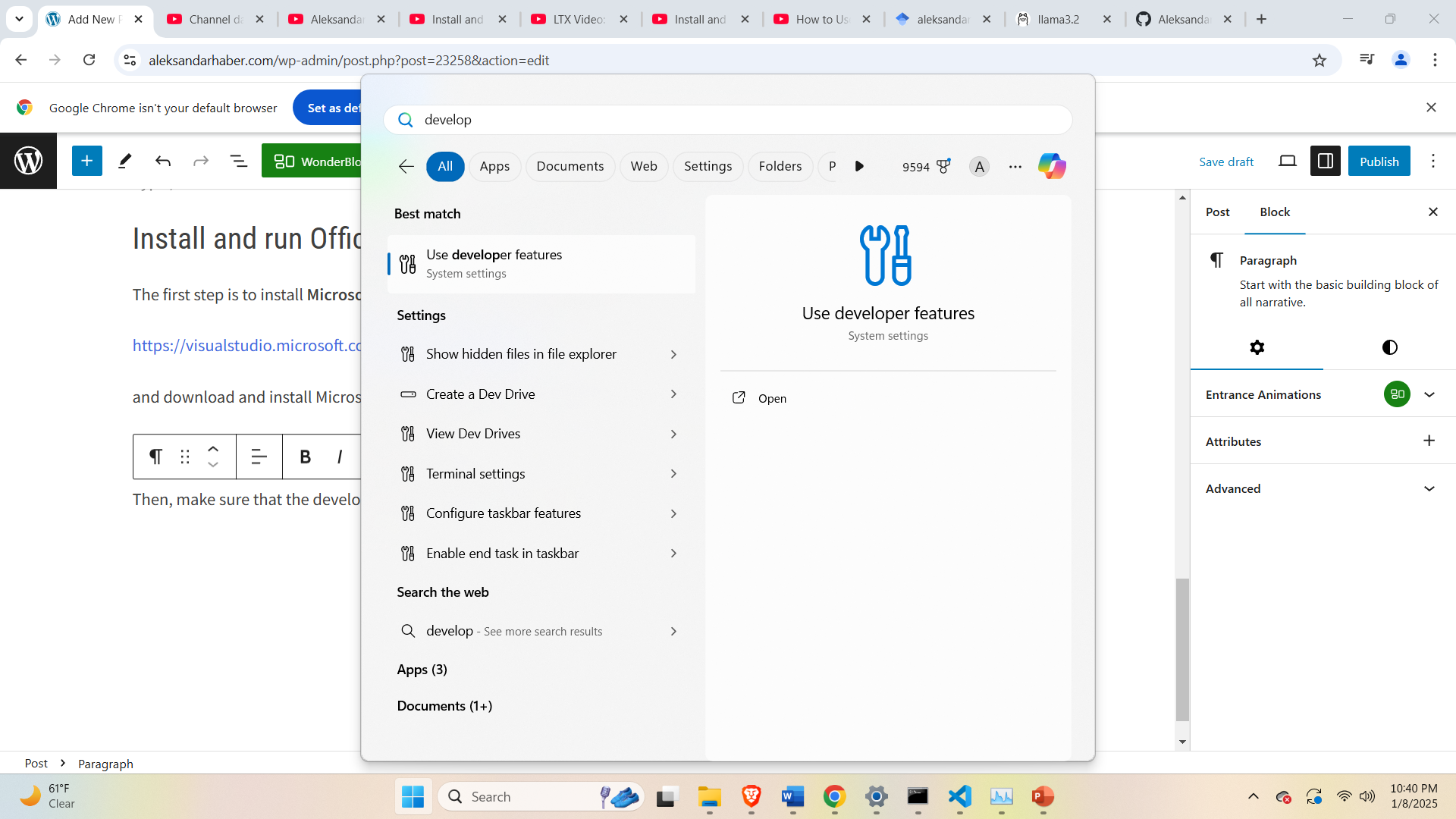

Then, make sure that the developer tools are activated in Windows 11. To do that, click on Start and search for “Use developer features”

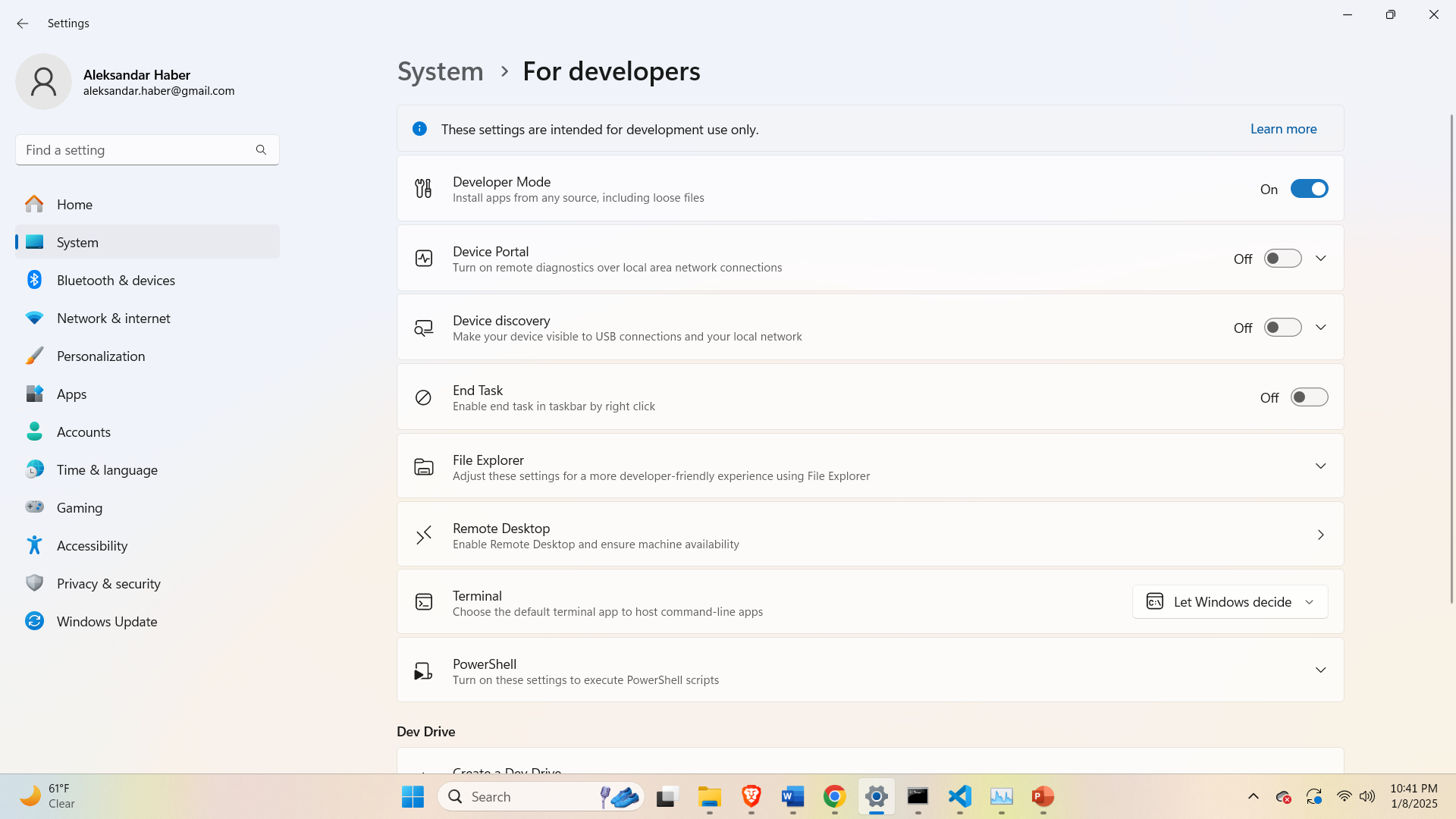

Then, activate the developer tools by clicking on “Developer Mode” as shown in the figure below.

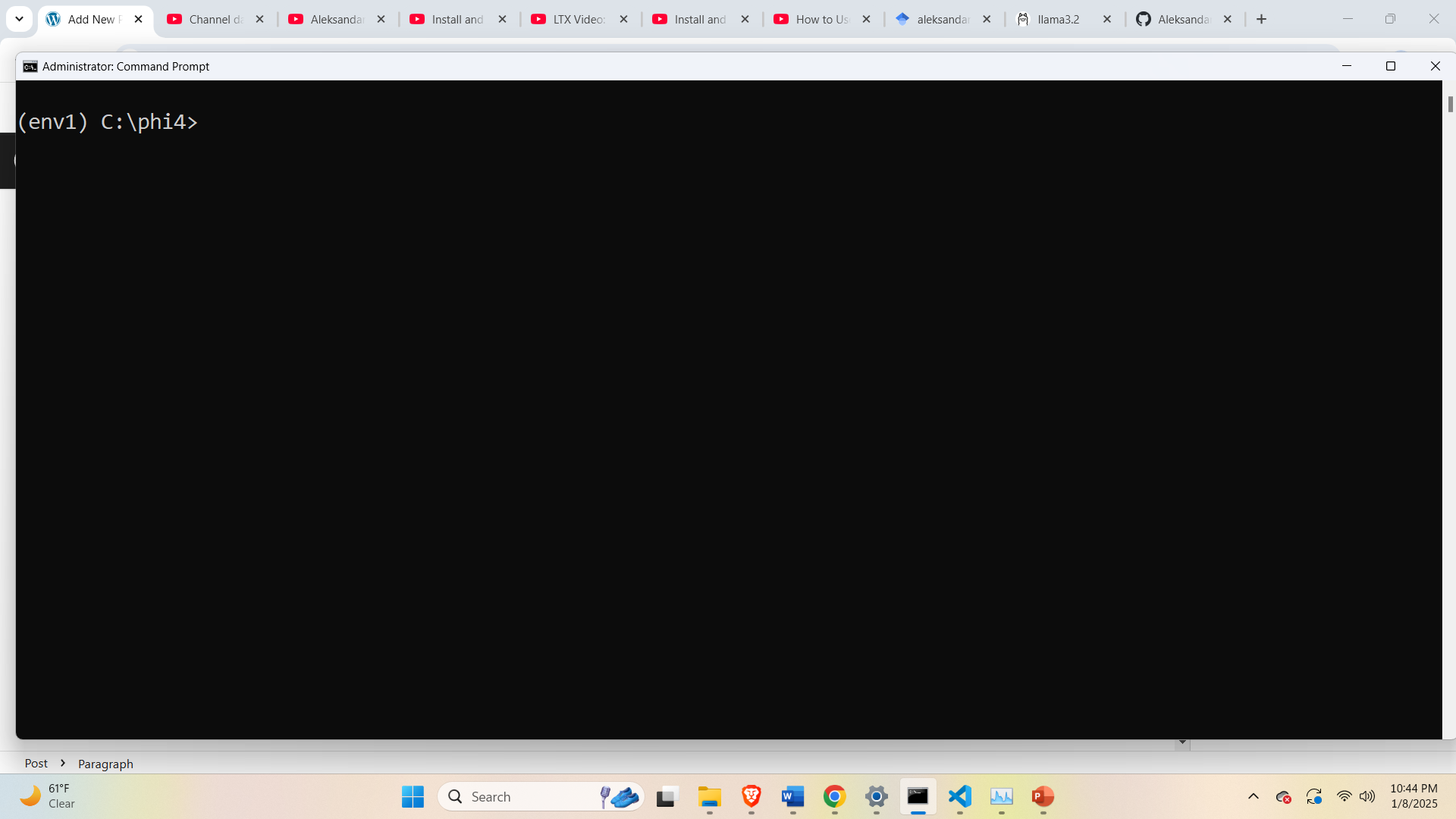

Then, the next step is to create a workspace folder and a Python virtual environment. To do that, open a Command Prompt in the administrator mode, and type

cd\

mkdir phi4

cd phi4

python -m venv env1

env1\Scripts\activate.bat

as the result the Python virtual environment will be created and activated

Then, we need to install the necessary libraries:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

pip install huggingface-hub

pip install transformers

pip install accelerate

First, we install PyTorch, then we install huggingface-hub, transformers and accelerate libraries and packages. Note that the pip command for installing PyTorch (the first pip command) is generated by going to the official PyTorch website:

https://pytorch.org/get-started/locally

and by using the selection table to generate the command. The next step is to download the model files from the official Huggingface website:

https://huggingface.co/microsoft/phi-4/tree/main

To do that, open your favorite Python editor, and type and execute the following code

from huggingface_hub import snapshot_download

snapshot_download(repo_id="microsoft/phi-4",

local_dir="C:\\phi4")This code will download all the files and folders from the remote repository and store it in the local directory. While executing this Python file, you have to make sure that the file is executed by using the Python interpreter from the previously defined virtual environment.

Once everything is downloaded, create a new Python script that will test the Phi4 model. The script is given below.

import transformers

model_id="C:\\phi4"

pipeline = transformers.pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": "auto"},

device_map="cuda",

)

messages = [

{"role": "system", "content": "You are a funny teacher trying to make lectures as interesting as possible and you give real-life examples"},

{"role": "user", "content": "How to explain gravity to high-school students?"},

]

outputs = pipeline(messages, max_new_tokens=128)

print(outputs[0]["generated_text"][-1])This code will create a model pipeline, load the model, define the prompt and the question, run the model and print the output. The answer is given below.

{'role': 'assistant', 'content': 'Alright, class, gather around! Today, we\'re diving into the mysterious and mind-bending world of gravity. Now, I know what you\'re thinking: "Gravity? Isn\'t that just why we don\'t float away into space?" Well, yes, but there\'s so much more to it! Let\'s break it down with some real-life examples that\'ll make your heads spin—figuratively, of course, because gravity keeps them attached to your bodies!\n\n### 1. **Gravity: The Cosmic Glue**\n\nImagine you\'re at a party, and there\'s this one person who\'s so magnetic that everyone just gravitates'}