In this tutorial, we explain how to install and run locally the QwQ-32B Large Language Model (LLM) on a Linux Ubuntu. QwQ-32B is the LLM published by Alibaba. Its performance is similar to the performance of DeepSeek 671B. However, QwQ-32B has a much smaller number of parameters than DeepSeek 671B, and consequently, it can be executed on a local computer. We were able to run QwQ-32B on a computer with 64 GB RAM and NVIDIA 3090. The YouTube tutorial explaining the installation steps is given below.

Install QwQ-32B on a Local Computer

The first step is to install curl so we can install Ollama. To do that, open a terminal and type

sudo apt update && sudo apt upgrade

sudo apt install curl

curl --versionTo install Ollama, open a terminal and type

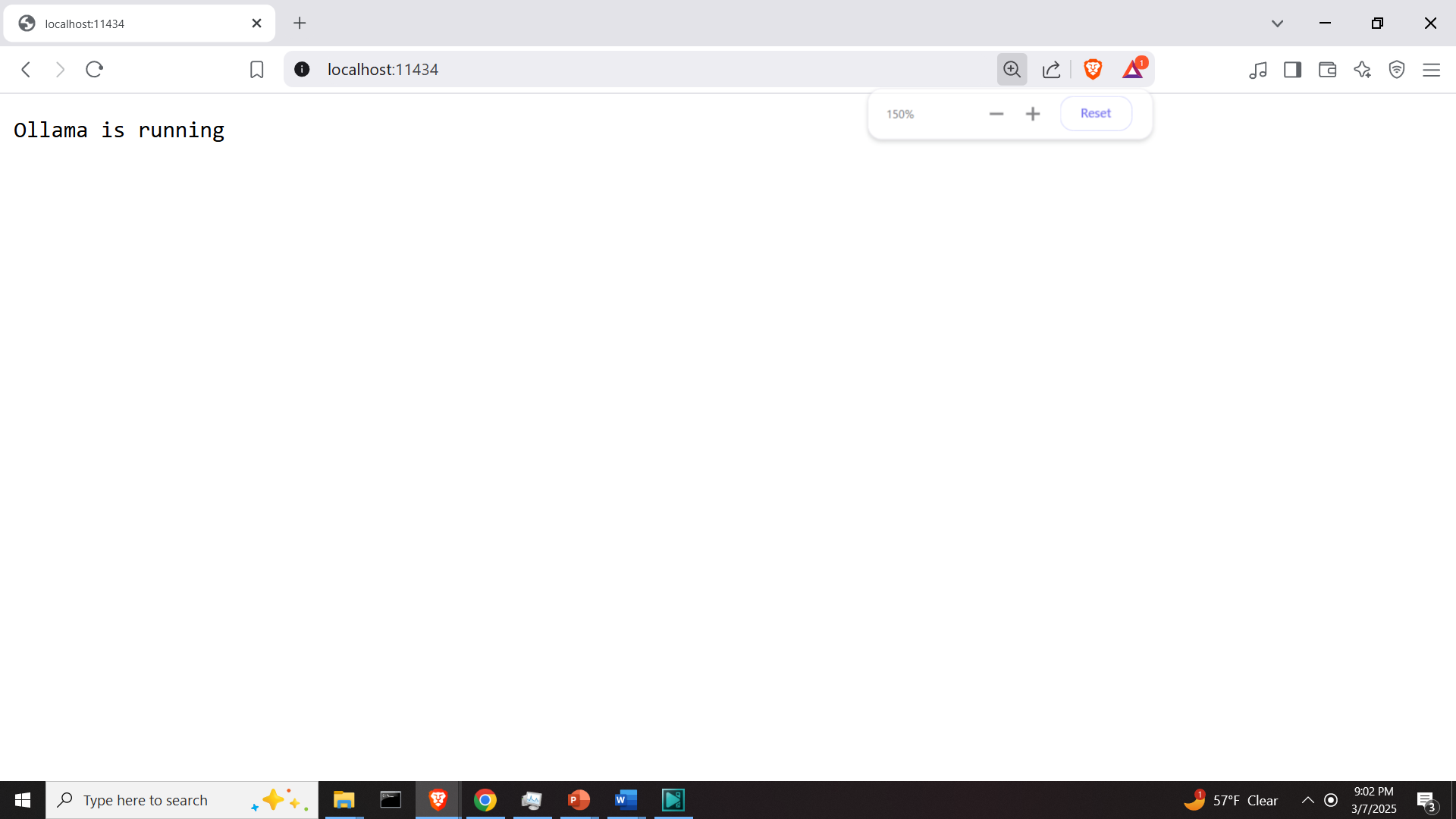

curl -fsSL https://ollama.com/install.sh | shAfter this command is executed, open a web browser, and type the following local address:

http://localhost:11434/If Ollama is properly installed, you should see the message “Ollama is running”.

The next step is to download the model. To download the model, in the terminal type this

ollama pull qwqThis will download the model. Next, we need to install Open WebUI, so we can generate the GUI for the model and we can interact with the model in a user-friendly manner.

Open a terminal and type

cd ~

mkdir testWebUI

cd testWebUI

This will create the workspace folder for the Open WebUI interface. Next, create and activate the Python virtual environment

sudo apt install python3.12-venv

python3 -m venv env1

source env1/bin/activateThe next step is to install Open WebUI and run it:

pip install open-webui

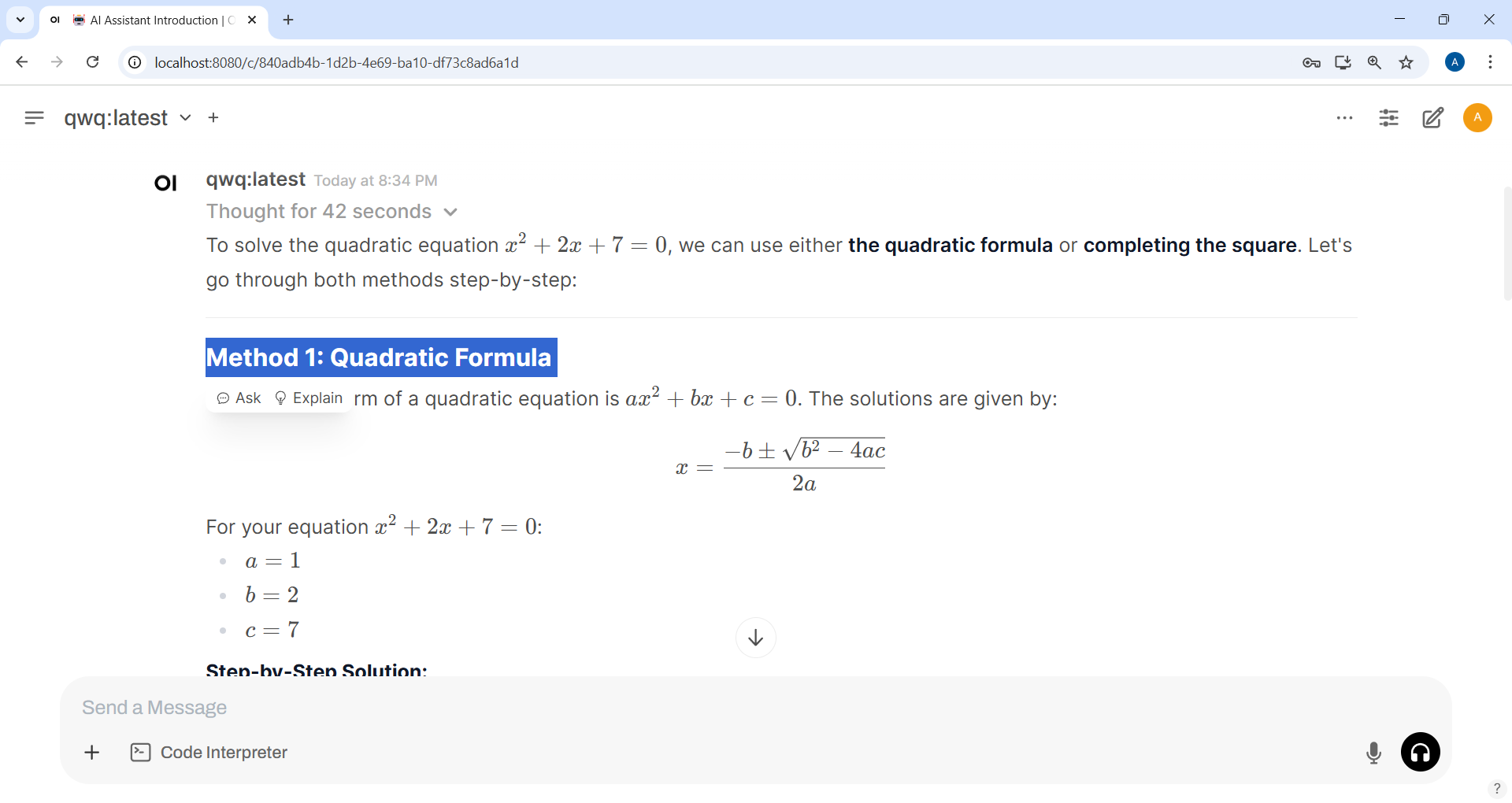

open-webui serveTo start Open WebUI, open a web browser and enter the following address

http://localhost:8080This should start the interface for communicating with the QwQ-32B model. The model will be automatically recognized by Open WebUI. If not, then in the left upper corner of Open WebUI there is a menu for selecting the model.