In our previous tutorial on DeepSeek-R1, we explained how to install and run DeepSeek-R1 locally by using Ollama and a terminal window. Running DeepSeek and similar Large Language Models (LLMs) by using Ollama and a terminal is a first step toward building an AI application. Namely, you would first like to test the model and see if it can work on your hardware, and then, in the next step, you would embed the AI model in your application. For that purpose, you would most likely use Python or a similar language which enables rapid prototyping.

However, some users would just like to run DeepSeek-R1 locally with a graphics user interface (GUI). There are several approaches for running distilled versions of DeepSeek in a GUI. The first approach that we covered in the previous tutorial is to write your own GUI by using Streamlit. This approach is for more advanced users who want to build their own AI application. However, there is a simple approach which is more suitable for users only interested in directly using the model and not in embedding the model in some other application. For that purpose, you can use WebUI to securely and locally run models. In this tutorial, we explain a step by step procedure that will enable you to install and run distilled versions of DeepSeek by using Open WebUI on Windows. Open WebUI is a relatively simple framework and GUI for running models locally. The YouTube tutorial is given below.

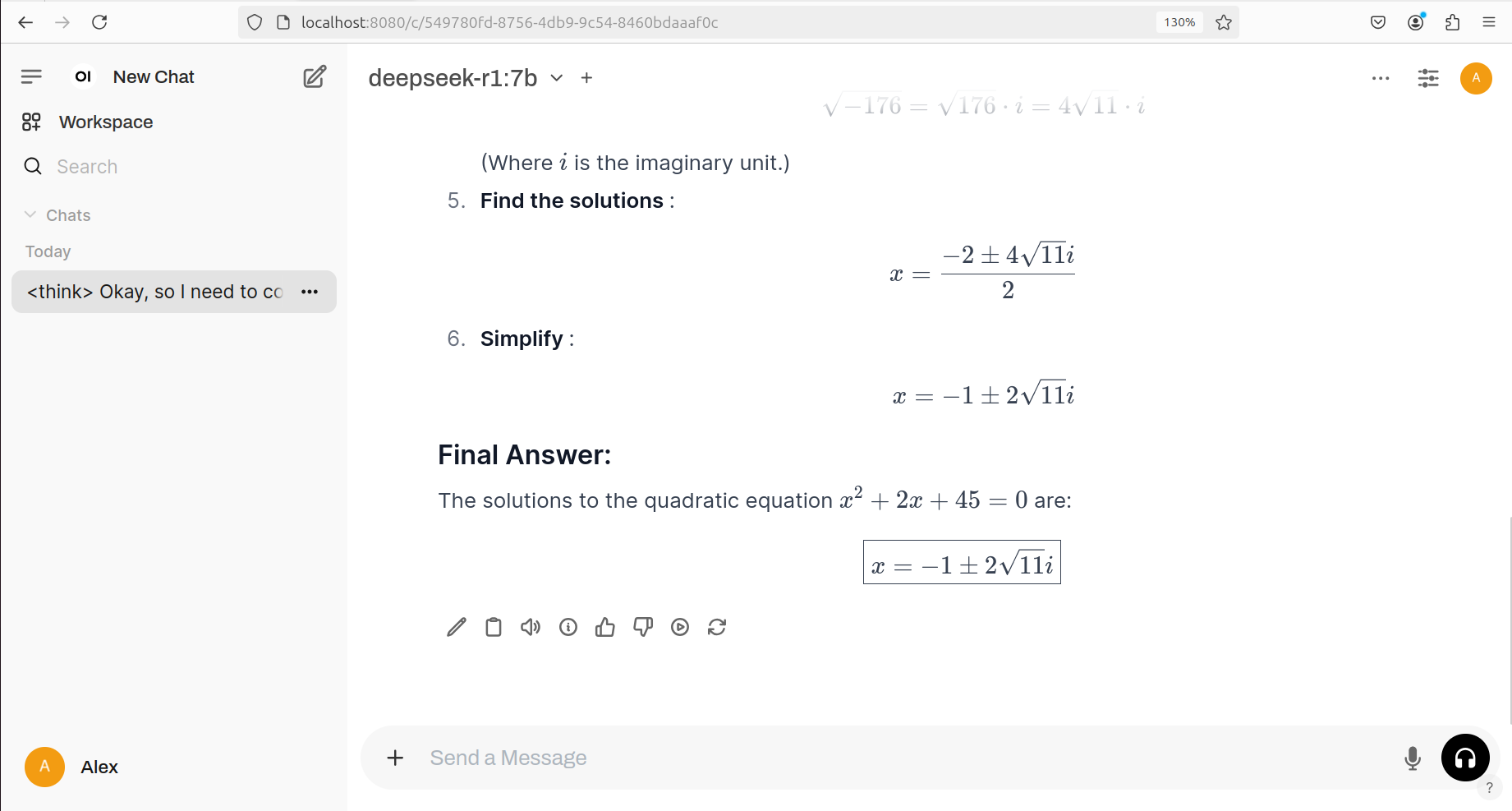

The GUI interface of the WebUI with the DeepSeek-R1 7B model is shown in the figure below.

Installation Procedure

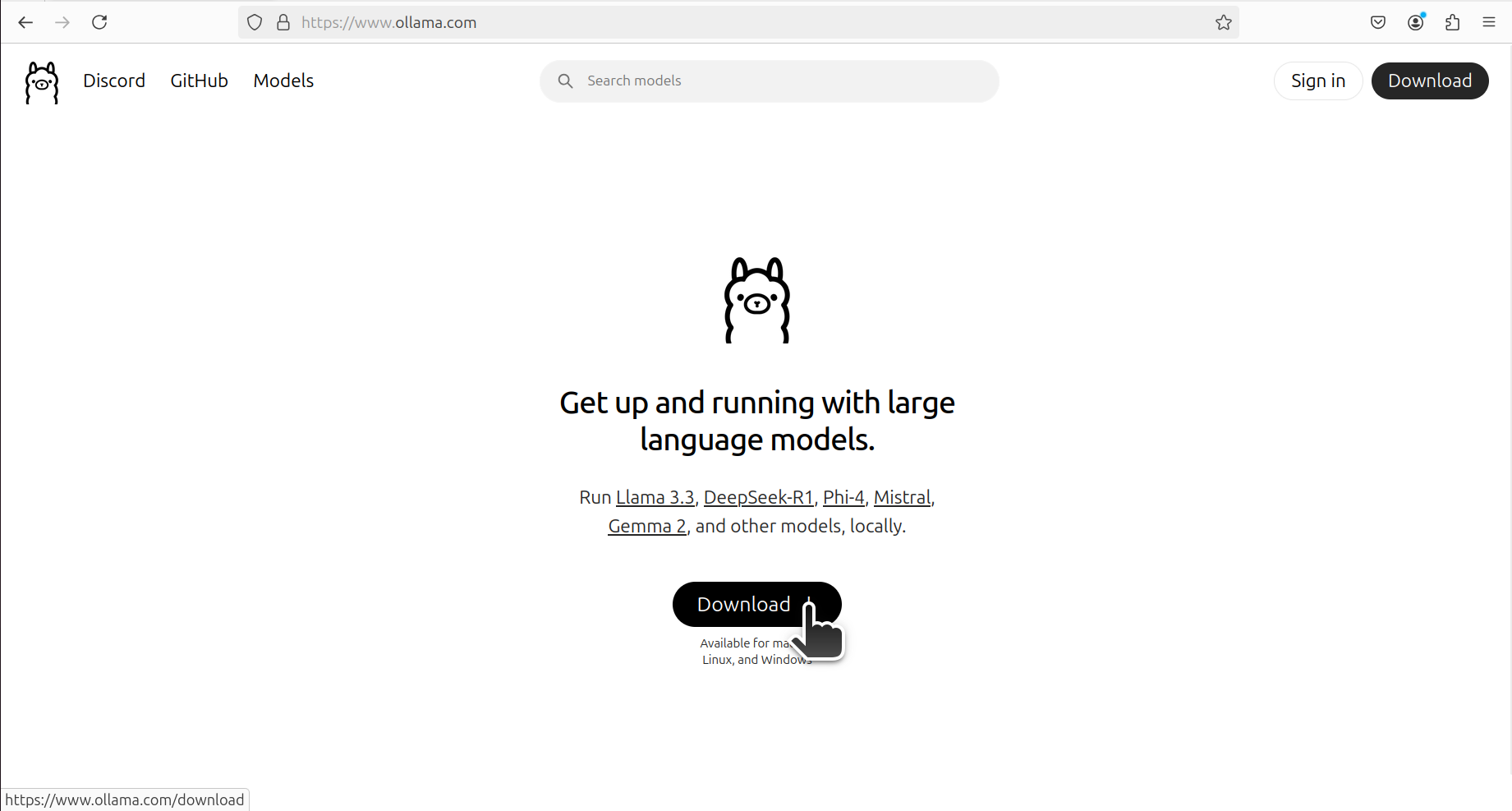

The first step is to install Ollama. To do that go to the Ollama website

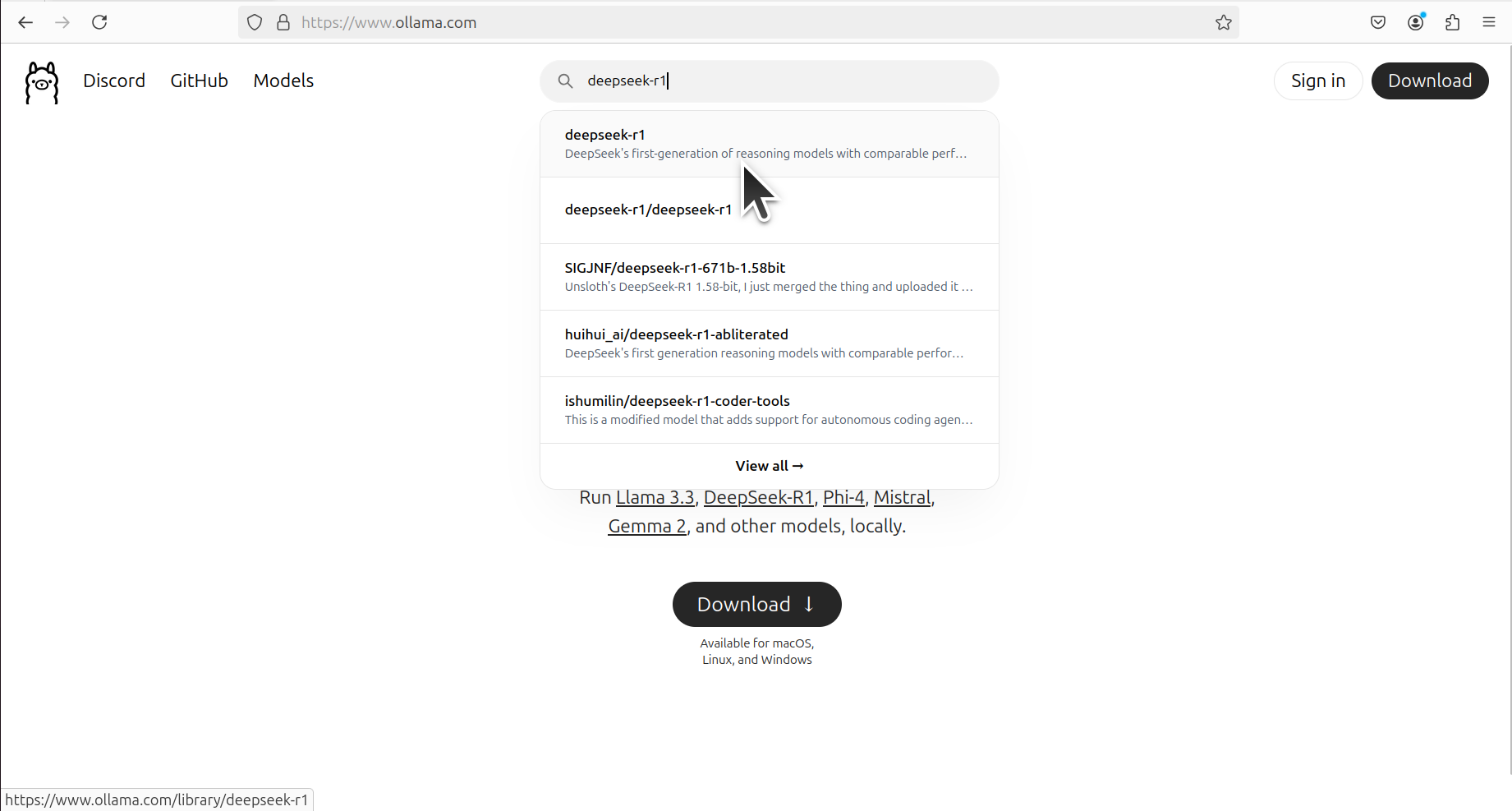

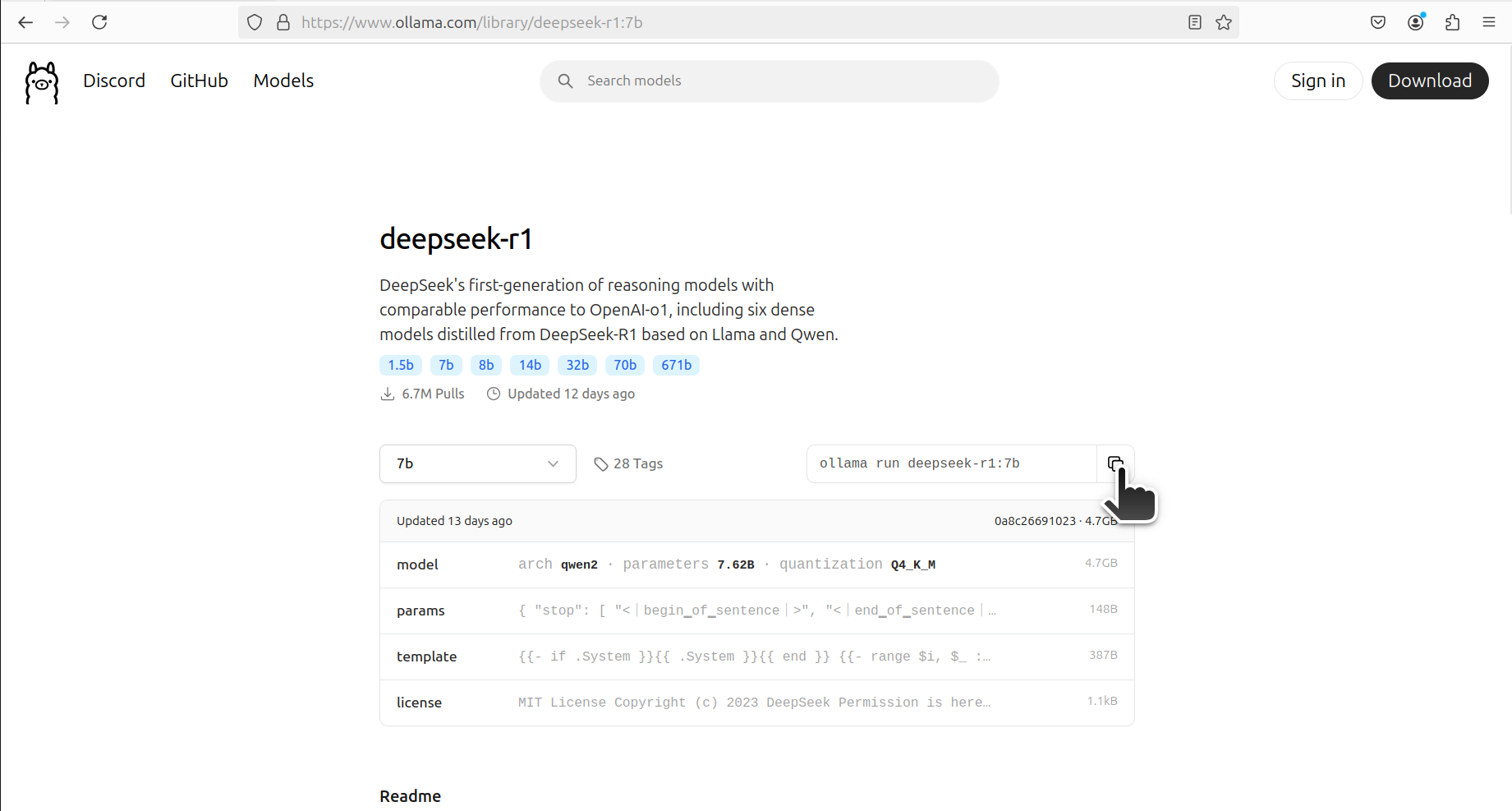

Click on Download and Download the installation file. Run the installation file to run Ollama. Once Ollama is installed, we need to install the model. To install the model, search for deepseek-r1 model on the Ollama website.

Click on the model, then click on the 7B model from the drop down menu, and copy the command. The command is

The command is

ollama run deepseek-r1:7bChange this command to

ollama pull deepseek-r1:7band execute the command to download the model. Once the model is downloaded, you can test it by typing

ollama run deepseek-r1:7bTo exit the model, type this CTRL+d.

Next, we need to create a workspace folder, Python virtual environment, install the library, and run the Open-WebUI GUI. First of all, to properly install Open-WebUI you need to have either Python 3.11 or Python 3.12. To do that, type

cd\

mkdir testWebUI

cd testWebUIpython -m venv env1

env1\Scripts\activate.batpip install open-webui

open-webui serveThis will start the Open-WebUI. To start the GUI, type in the web browser the following address

http://localhost:8080For more details on how to start the model see the YouTube tutorial. The GUI is shown in the figure below.