In this machine learning and large language model (LLM) tutorial, we explain how to install and run on a local computer Google’s Gemme 3 model. We explain how to run Gemma 3.0 1B,4B, 12B, and 27B model.

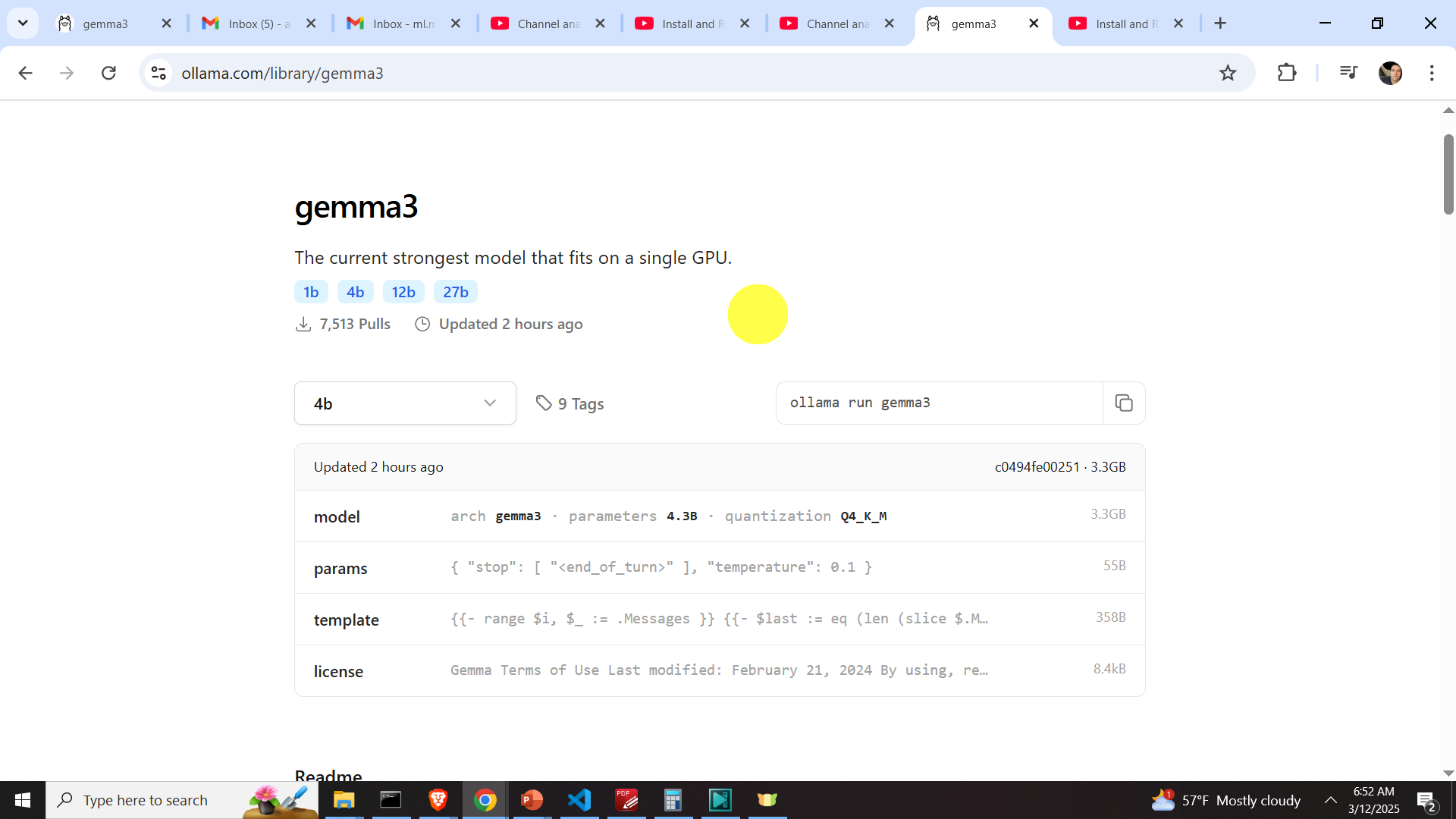

The market punch line for this model is “The current strongest model that fits on a single GPU.” The AI community will have to investigate whether this is really true.

Well, we can confirm that Gemma 27B model runs smoothly on an NVIDIA 3090 GPU. On the other hand, it is advertised that this model’s performance is a little bit weaker than the performance of DeepSeek R1 671B. However, the advantage of Gemma 27B is that it “only” has 27B parameters compared to DeepSeek’s R1 671B parameters.

The YouTube tutorial with the installation instructions is given below.

Install Google Gemma 3 on a local computer

The first step is to install Ollama. To do that, go to the Ollama website, and click on download:

After the file is downloaded, open the installation file, and install Ollama. After Ollama is installed, go to the Ollama website, and in the search menu, search for Gemma 3, and go to the Ollama Gemma 3.0 repository:

Then, select the model you want to download. in this tutorial we, will download Gemma 3 12B and 27B models. To install this model, open a command prompt and type

ollama pull gemma3:12bto download the Gemma 12B model, and type this

ollama pull gemma3:27bto download the Gemma 27B model.

To run the Gemma 12B model, type this

ollama run gemma3:12bOn the other hand, to run the Gemma 27B model, type this

ollama run gemma3:27bNext, we explain how to generate a graphics user interface for running the Gemma 3.0 model by using Open WebUI.

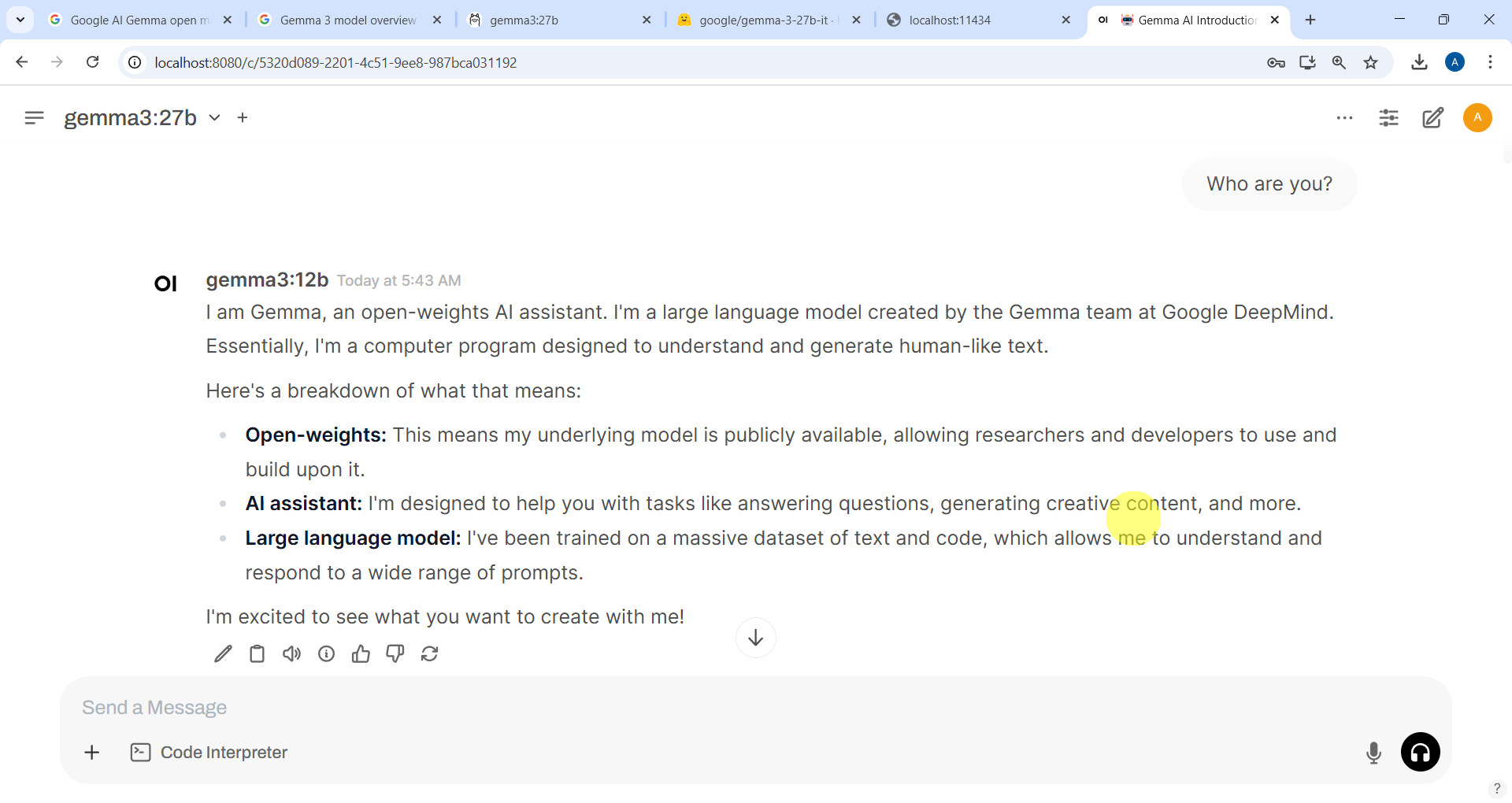

Run Gemma 3.0 Using Open WebUI

Open WebUI is a self-hosted interface for running local LLMs. The installation of Open WebUI is very simple. Open a Windows command prompt and type

cd\

mkdir testModel

cd testModelThen, verify that you have Python installed on your system

Python --versionThen, to install Open WebUI, first, create a Python virtual environment, and then activate it

python -m venv env1

env1\Scripts\activate.bat

Then, install the Open WebUI Python library by typing

pip install open-webui It might take 2-5 minutes to install all the Python packages necessary to run Open WebUI, and consequently, be patient. The next, step is to run Open WebUI. To do that, type the following

open-webui serveAfter the program is loaded, you would be able to access the graphics user interface in your web browser, by typing this address

http://localhost:8080The graphics user interface of Open WebUI will open and you will be able to run the installed Gemma 3 models after you set up your administrator account.

Note that the Open WebUI interface automatically recognizes that the Gemma models are installed through Ollama. This is another advantage of using Open WebUI. That is it! Enjoy!