- In this machine learning and AI tutorial, we explain how to install DeepSeek-R1 model locally on Windows and how to write a Python code that will run DeepSeek-R1.

- DeepSeek-R1 belongs to the class of reasoning models. Reasoning models perform better at complex reasoning problems and tasks compared to the classical large language models. Complex reasoning problems are problems that appear in mathematics, science, and coding.

- According to the information given on GitHub page of DeepSeek, the performance of DeepSeek-R1 is comparable to the performance of OpenAI-o1. However, DeepSeek-R1 is released under the MIT license, which means that you can also use this model in a commercial setting.

In this tutorial, we will explain how to install and run distilled models of DeepSeek-R1. Consequently, it is important to explain what are distilled models.

- To run the full DeepSeek-R1 model locally, you need more than 400GB of disk space and a significant amount of CPU, GPU, and RAM resources! This might be prohibitive on the consumer-level hardware.

- However, DeepSeek has shown that it is possible to reduce the size of the original DeepSeek-R1 model, and at the same time preserve the performance (of course, not completely). Consequently, DeepSeek has released a number of compressed (distilled) models whose size varies from 1.5-70B parameters. To install these models you will need from 1 to 40 GB of disk space.

We installed DeepSeek-R1 on the computer with the following specifications:

– NVIDIA 3090 GPU

– 64 GB RAM

– Intel i9 processor 10850K – 10 cores and 20 logical

processors

– We are running the model on Windows 11.

The installation procedure is explained in the YouTube tutorial given below.

Installation Instructions

The first step is to install Ollama. Go to the official Ollama website

and click on download to download the model. After that click on the installation file to install Ollama.

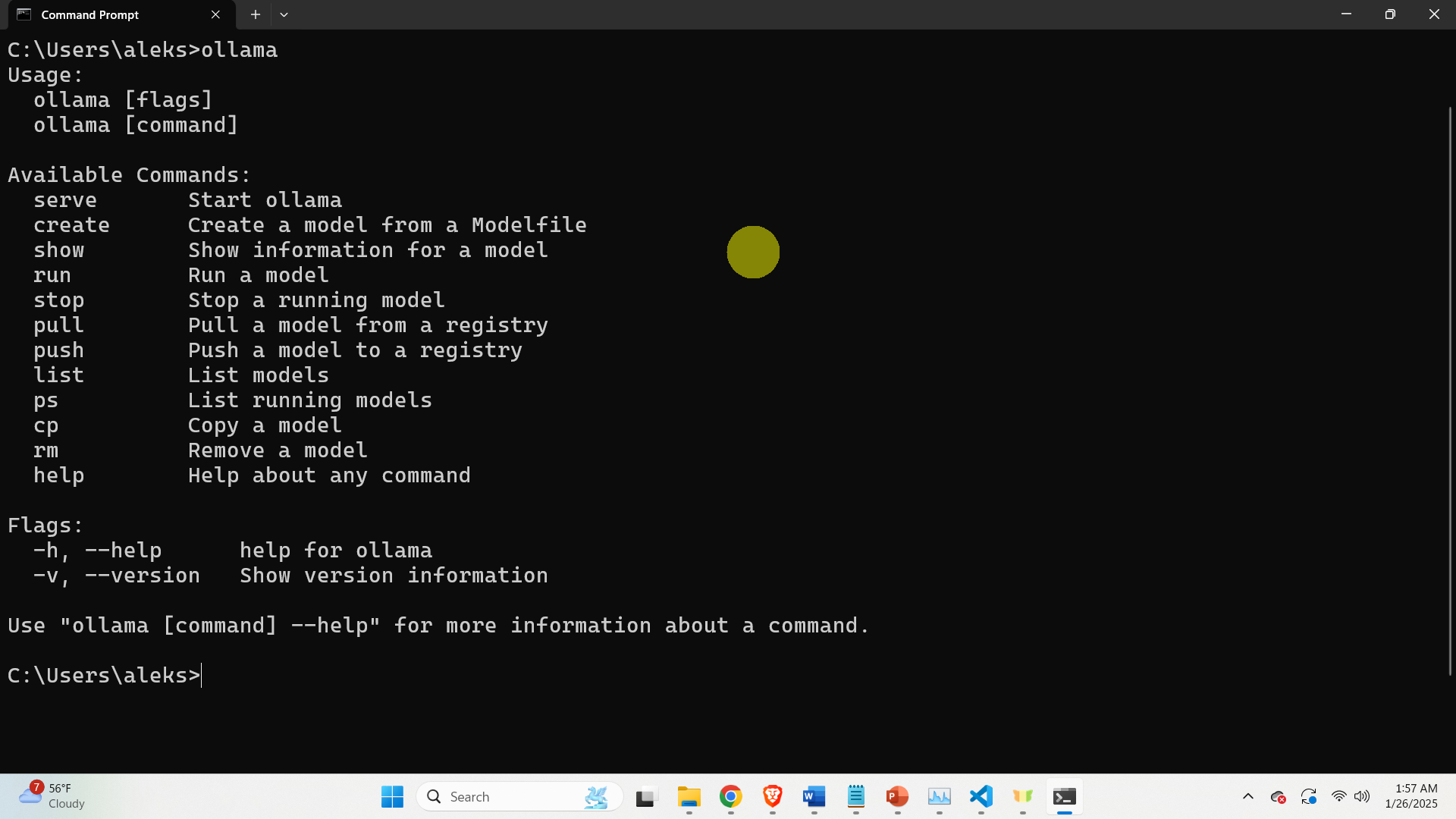

After Ollama is installed, verify the installation by opening a command prompt and by executing the command

ollamaThe output is shown in the figure below.

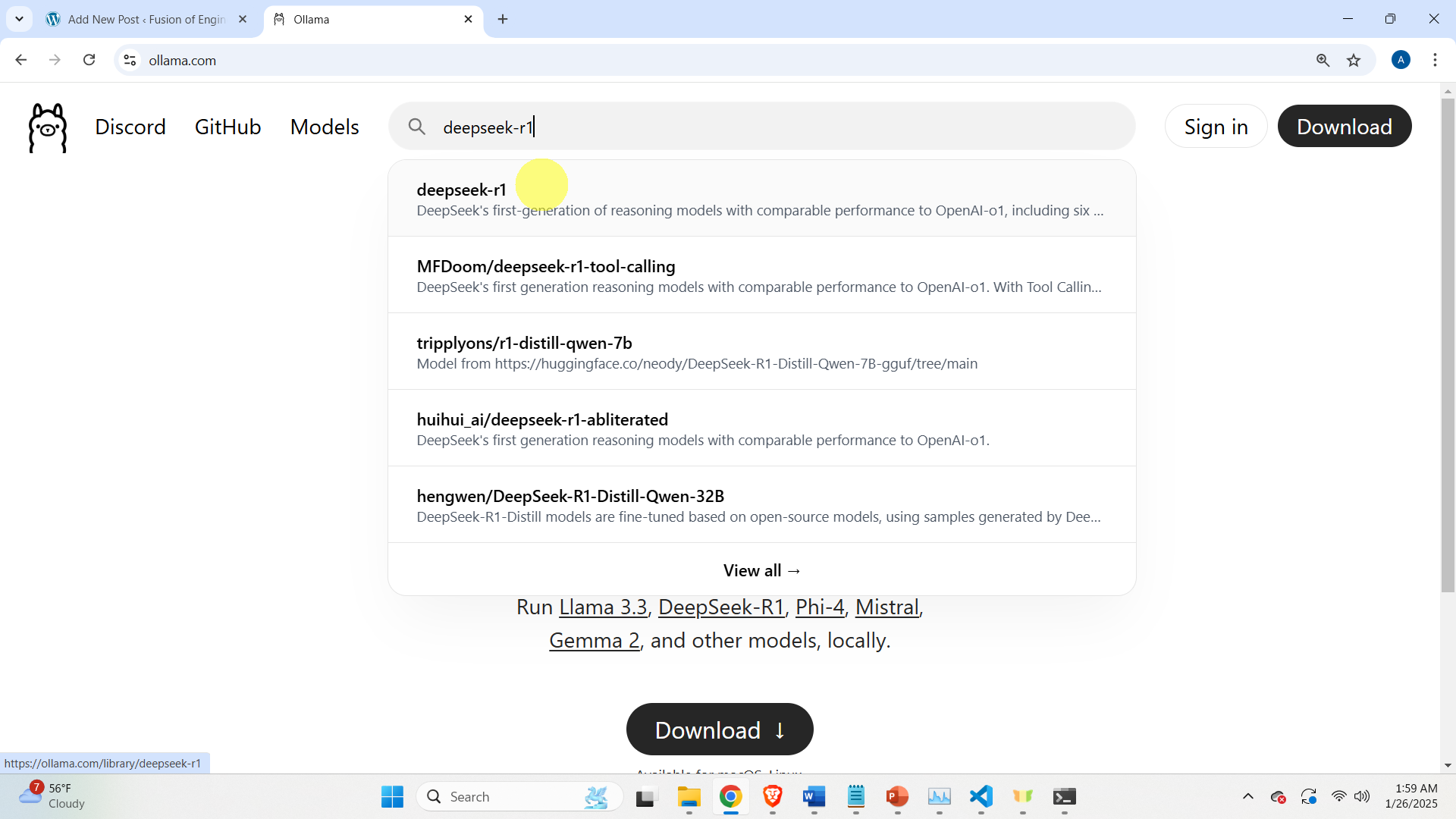

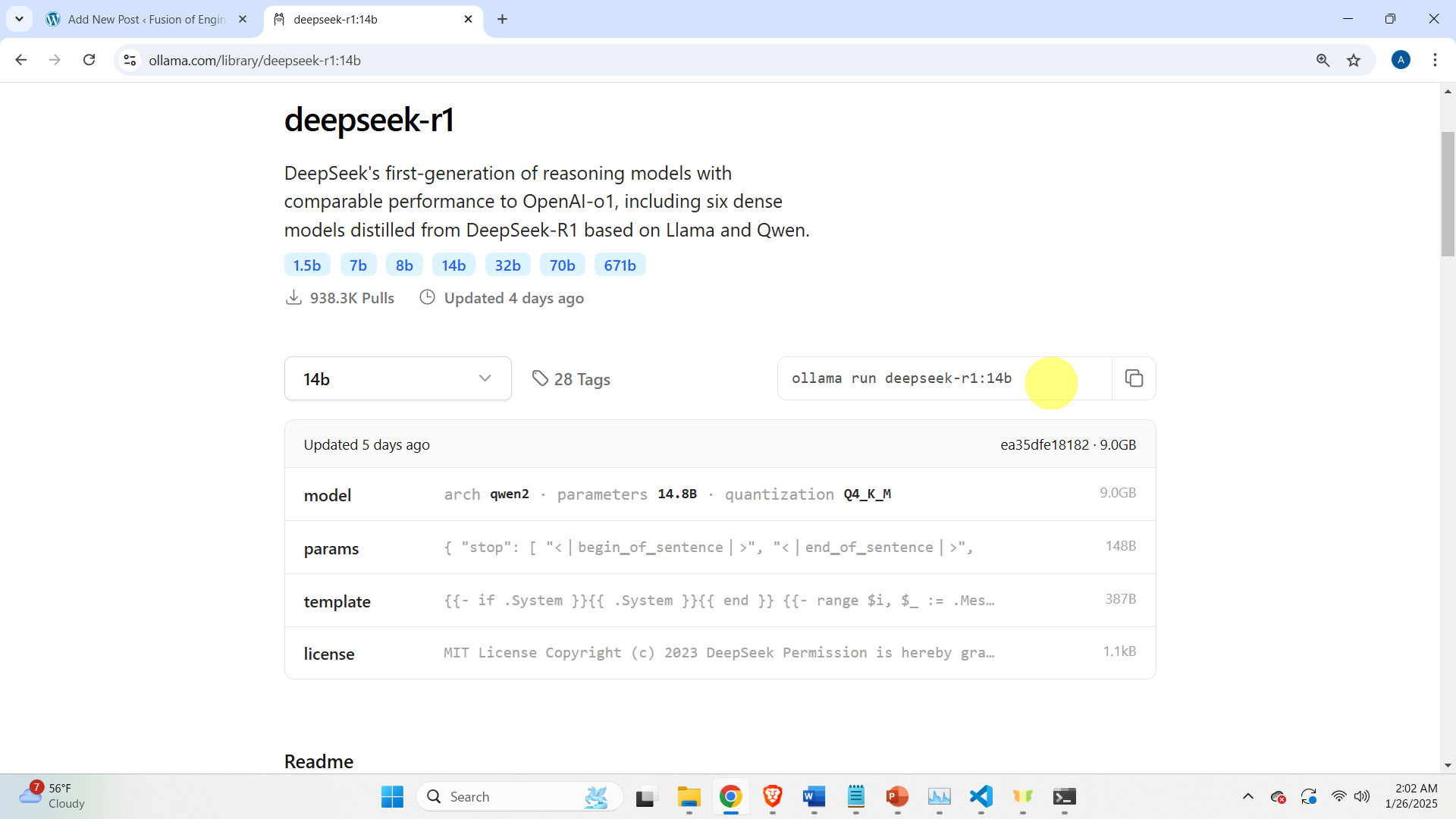

If you see such an output, this means that Ollama is properly installed. The second step is to download the model. To do that, go back to the Ollama website, and search for deepseek-r1

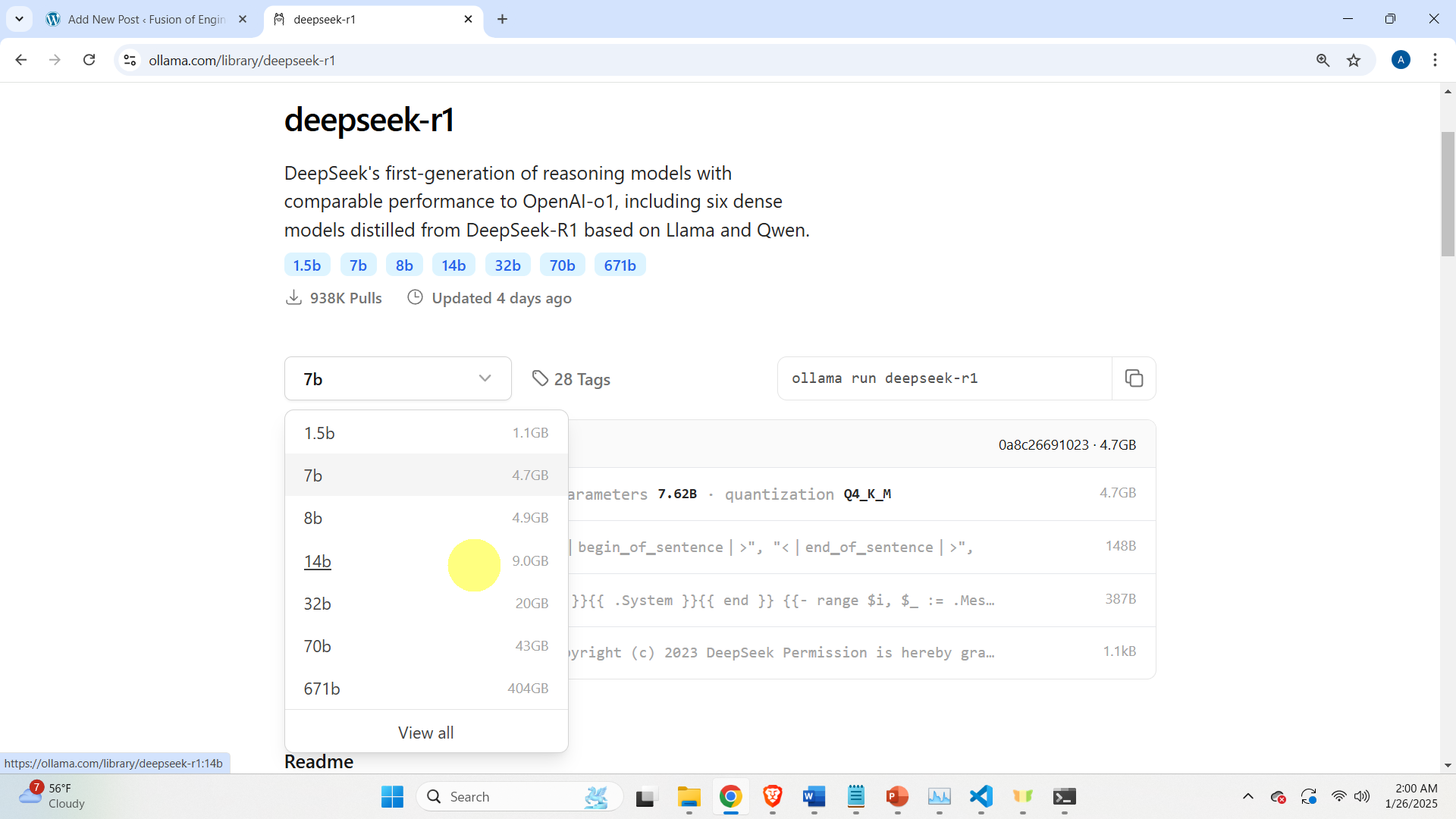

Click on the model, and select the model that you want to download

In our case, we will install a distilled Deepseek-R1 model with 14B parameters. After clicking on this model, a command for downloading and installing the model will be generated:

Change the generated command to:

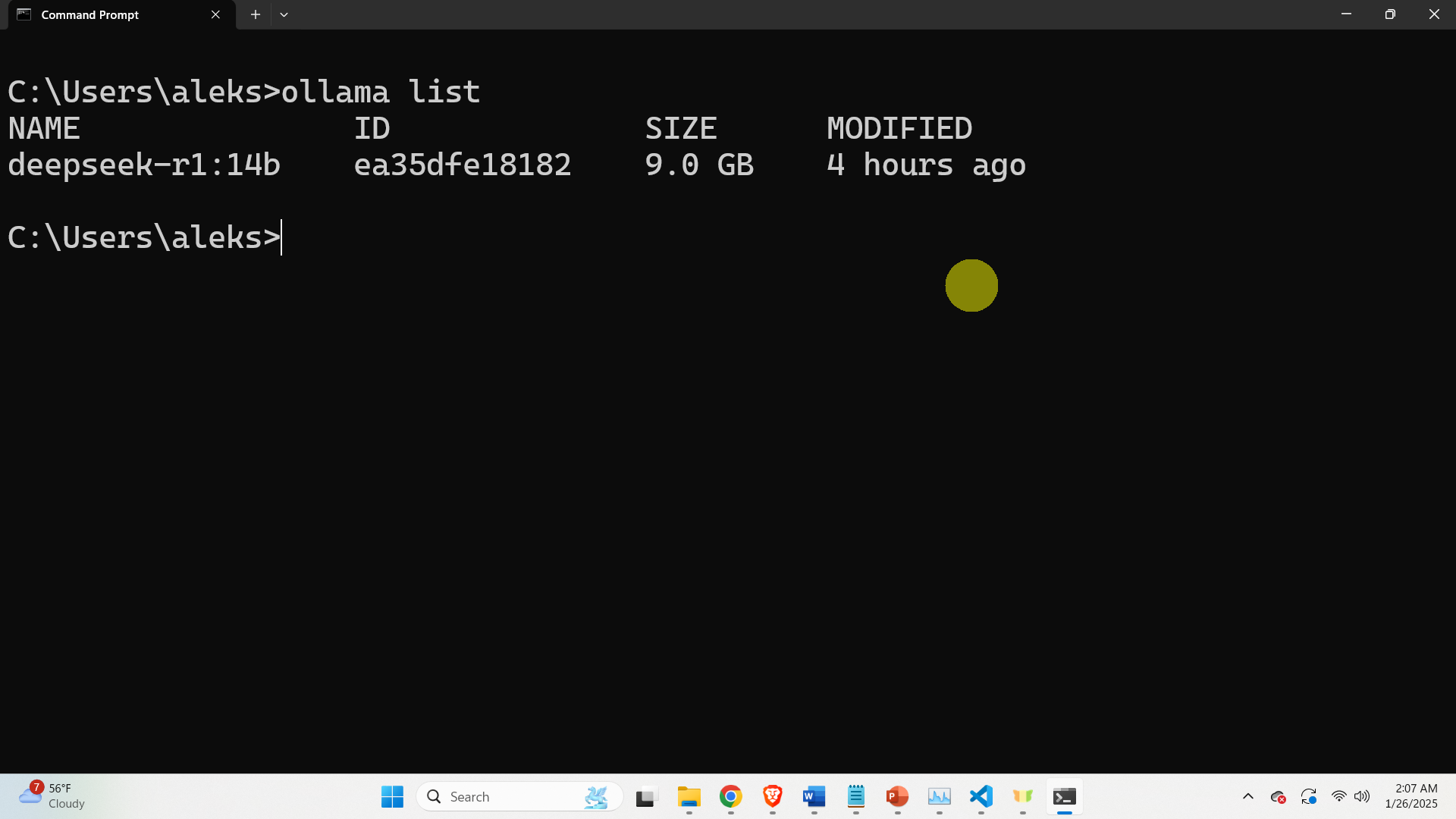

ollama pull deepseek-r1:14bExecute this command in the command prompt. This command will download the model. After the model is downloaded, type the following command in the command prompt

ollama list

As the output you should see the downloaded model. To test and run this model, type this

ollama run deepseek-r1:14bThis will start the model and you can ask a question to the model in order to test. To exit the model press CTRL+D.

The next step is to create a Python virtual environment and install the Ollama library and finally to write a Python code that will run the model.

First, verify that you have Python on your computer. Open a command prompt, and type

python --versionthis command will show the Python version installed on your system. Next, create a workspace folder and navigate to that folder

cd\

mkdir testDeep

cd testDeep

To create and activate the Python virtual environment, type this

python -m venv env1

env1\Scripts\activate.bat

Next, install the Ollama Python library

pip install ollamaNext, create a Python file and type the code given below.

import ollama

desiredModel='deepseek-r1:14b'

questionToAsk='How to solve a quadratic equation x^2+5*x+6=0'

response = ollama.chat(model=desiredModel, messages=[

{

'role': 'user',

'content': questionToAsk,

},

])

OllamaResponse=response['message']['content']

print(OllamaResponse)

with open("OutputOllama.txt", "w", encoding="utf-8") as text_file:

text_file.write(OllamaResponse)

This code will import the Ollama Python API, define the question to be asked, call the model, print the respone on the computer screen, and save the response in the text file called “OutputOllama.txt”.