- In this machine learning and AI tutorial, we explain how to create a simple DeepSeek-R1 application and Graphics User Interface (GUI), and how to run this application locally on a Windows computer. The application is developed in Python, by using DeepSeek-R1, Ollama, and Streamlit.

- DeepSeek-R1 belongs to the class of reasoning models. Reasoning models perform better at complex reasoning problems and tasks compared to the classical large language models. Complex reasoning problems are problems that appear in mathematics, science, and coding.

- According to the information given on the GitHub page of DeepSeek, the performance of DeepSeek-R1 is comparable to the performance of OpenAI-o1. However, DeepSeek-R1 is released under the MIT license, which means that you can also use this model in a commercial setting. This motivates us to use DeepSeek-R1 over using ChatGPT and similar commercial products.

The YouTube tutorial is given below.

- In this tutorial, we present a proof-of-concept for building a desktop app that is based on DeepSeek-R1. The application is deliberately kept as simple as possible in order not to blur the main ideas with too many complex programming details. You can use this tutorial as the basis of the development of your own applications that integrate AI, graphics, sound, animation, etc.

- We are using Streamlit to develop the GUI and to embed the AI model.

Streamlit is an open-source Python framework for quickly creating interactive data applications. Streamlit is designed for data scientists and machine learning engineers. You can quickly learn how to use this framework and you do not need to have a front-end development experience. - The solution presented in this tutorial is a “quick and dirty” solution. In the future video tutorials, we will delve deeper into the AI app development.

Before we start with the installation procedure, we need to explain what are distilled models. Namely, to run the full DeepSeek-R1 model locally, you need more than 400 GB (even more) of disk space and a significant amount of CPU, GPU, and RAM resources! This might be prohibitive on the consumer-level hardware. However, DeepSeek has shown that it is possible to reduce the size of the original DeepSeek-R1 model, and at the same time preserve the performance (of course, not completely). Consequently, DeepSeek has released a number of compressed (distilled) models whose size varies from 1.5-70B parameters. To install these models you will need from 1 to 40 GB of disk space. In this tutorial, we will explain how to install and run distilled models of DeepSeek-R1.

Our computer has the following specifications:

- NVIDIA 3090 GPU

- 64 GB RAM

- Intel i9 processor 10850K – 10 cores and 20 logicalprocessors

- We are running the model on Windows 11 (you can also use Windows 10)

Installation Instructions

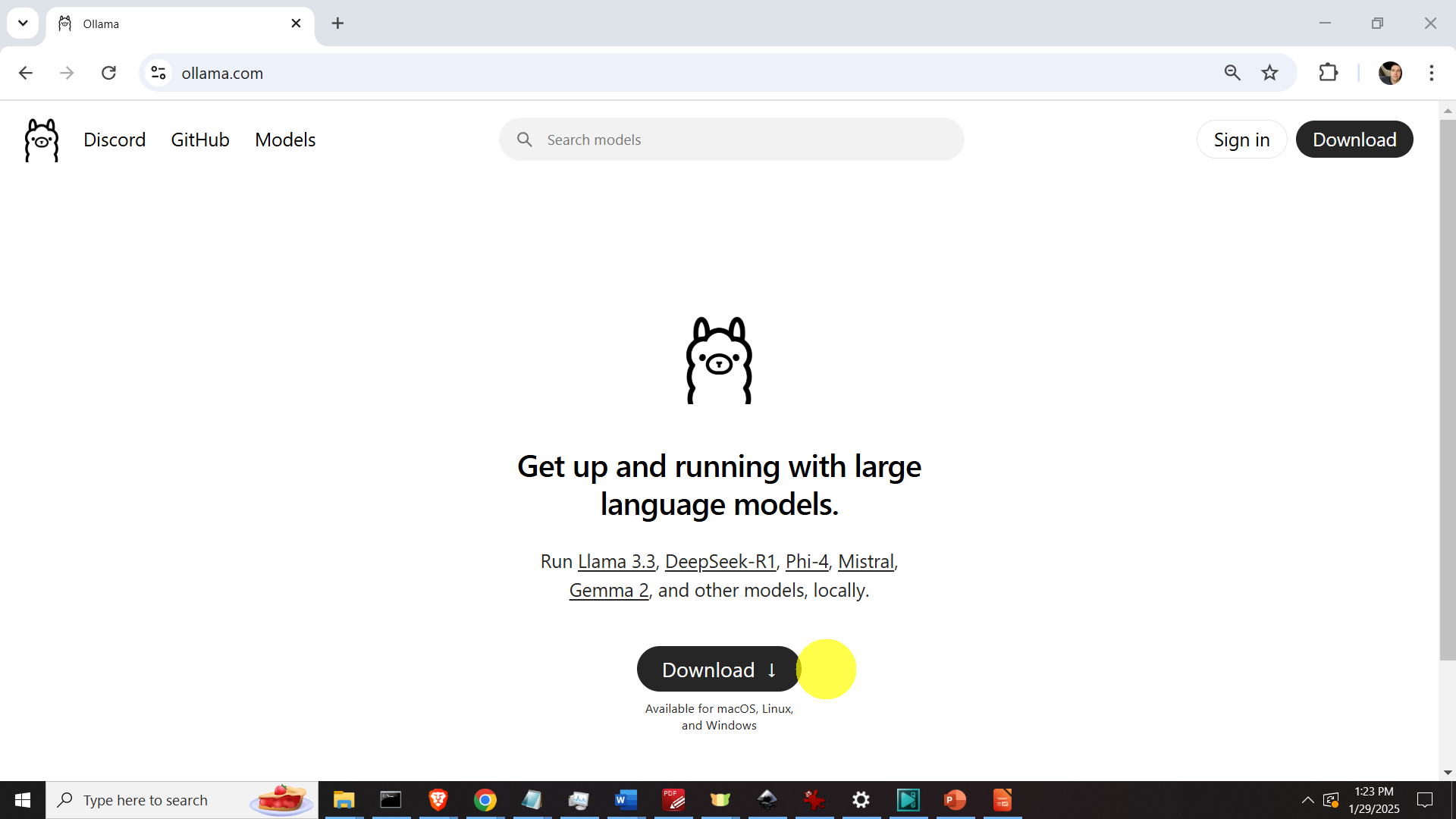

The first step is to download and install the Ollama framework for running large language models. For that purpose, go to the official Ollama website

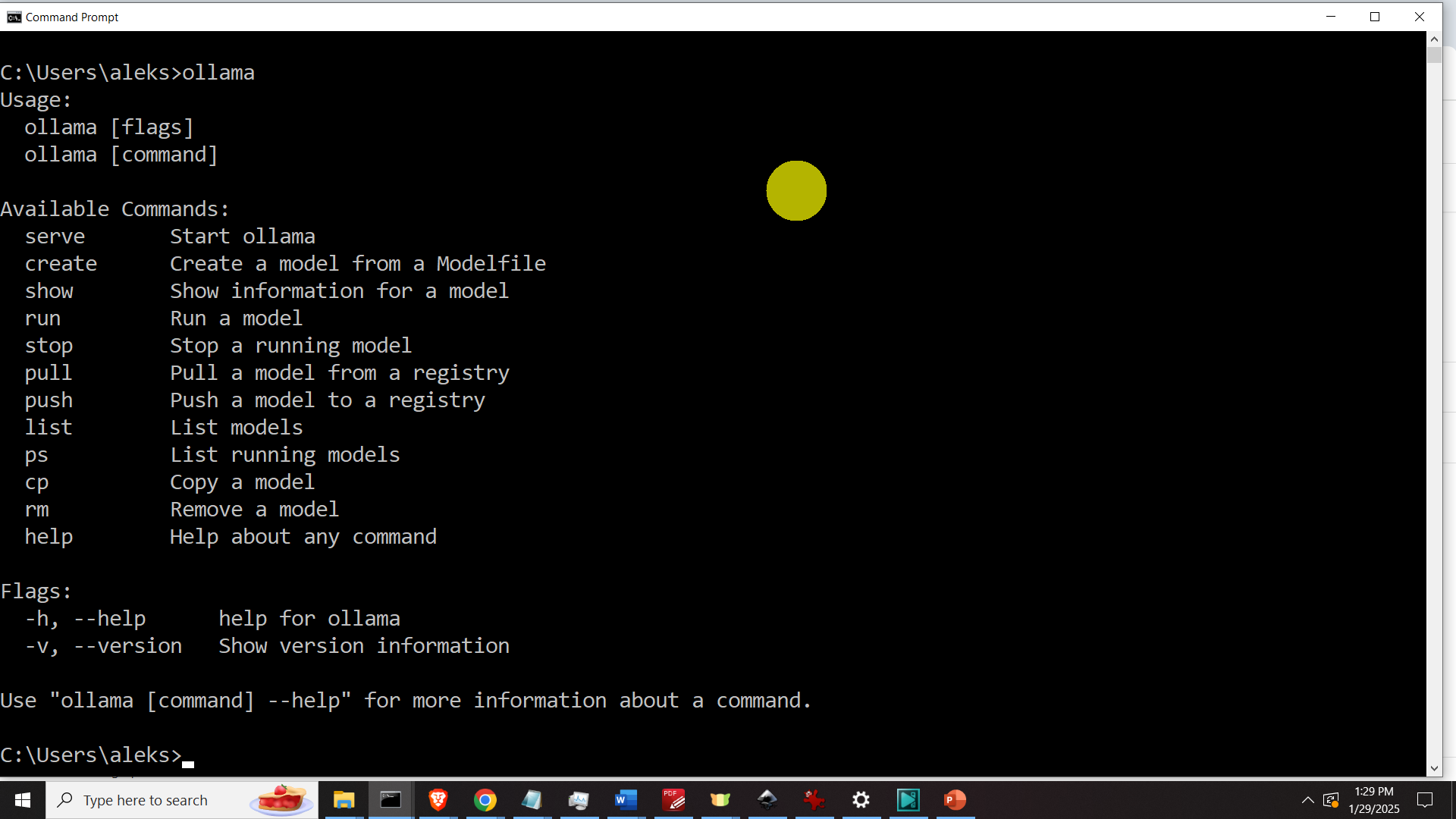

As shown in the figure above, click on download to download the Ollama installation file, and after that run the Ollama installation file in order to install Ollama. To verify that Ollama is properly installed, open a Command Prompt and type

ollama

If Ollama is properly installed, you should get an output similar to the output shown in the figure above.

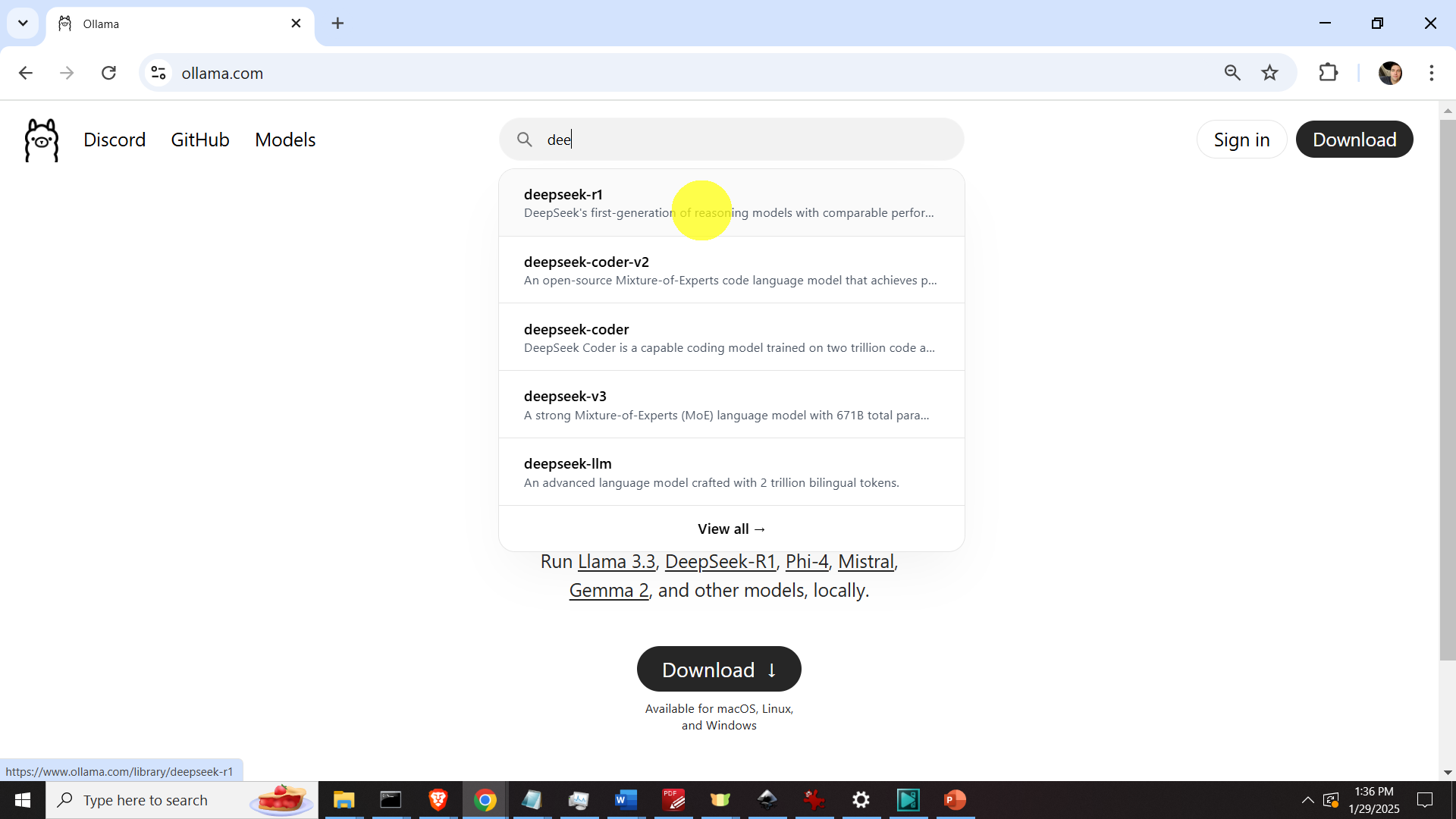

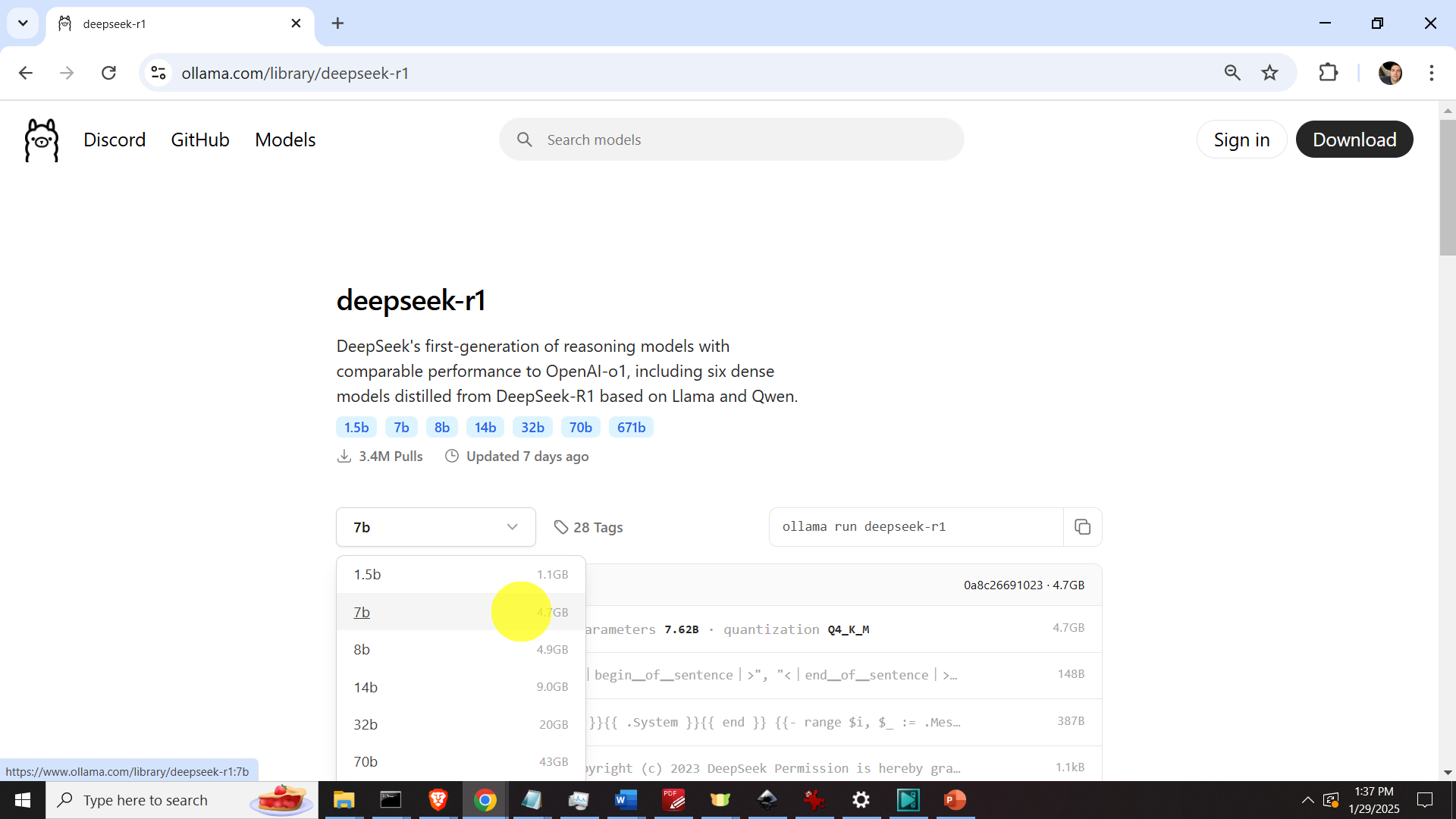

The next step is to download the model. In this tutorial, we will download and use a distilled DeepSeek model denoted by deepseek-r1:7b. You can also experiment with other model and see the best model that makes a good balance between the speed of execution and model performance. Generally speaking, smaller models can be executed faster, however, their performance is worst compared to the larger models. To download the model, go to the Ollama website, and in the search menu search for deepseek-r1, and then open a drop-down menu and find the model (this is shown in the two figure below).

After clicking on the 7B model, the following installation command will be generated:

ollama run deepseek-r1:7bHowever, modify this command like this

ollama pull deepseek-r1:7band executed it in the command prompt. The reason for the modification is that we just want to download the model by using ollama pull, and not to run it afterwards. Once the model is downloaded, you can test it, by typing in the command prompt:

ollama run deepseek-r1:7bThis will run the model. The next step is to create a workspace folder and the Python virtual environment. To create the workspace folder, open a command prompt and type

cd\

mkdir testApp

cd testAppNext, we need to create and activate the Python virtual environment

python -m venv env1

env1\Scripts\activate.batNext, we need to install the necessary libraries. They are Ollama and Streamlit. To do that, once the Python virtual environment is active, type this

pip install ollama

pip install streamlitThen, open your favorite Python editor and type the following code. Save the code in the file test.py

# -*- coding: utf-8 -*-

"""

Demonstration on how to create a Large Language Model (LLM) Web Application Using

Streamlit, Ollama, DeepSeek-R1 and Python

Author: Aleksandar Haber

"""

import streamlit as st

import ollama

# set the model

desiredModel='deepseek-r1:7b'

st.title("LLM Application Based on DeepSeek-R1 (running locally)")

def generate_response(questionToAsk):

response = ollama.chat(model=desiredModel, messages=[

{

'role': 'user',

'content': questionToAsk,

},

])

st.info(response['message']['content'])

with st.form("my_form"):

text = st.text_area(

"Enter text:",

"Over here, ask a question and press the Submit button. ",

)

submitted = st.form_submit_button("Submit")

if submitted:

generate_response(text)The code is self-explanatory. We use the Ollama Python API to call the model, and Streamlit library to create a graphic user interface. To run this code, type in the command prompt (inside of the activated Python virtual environment)

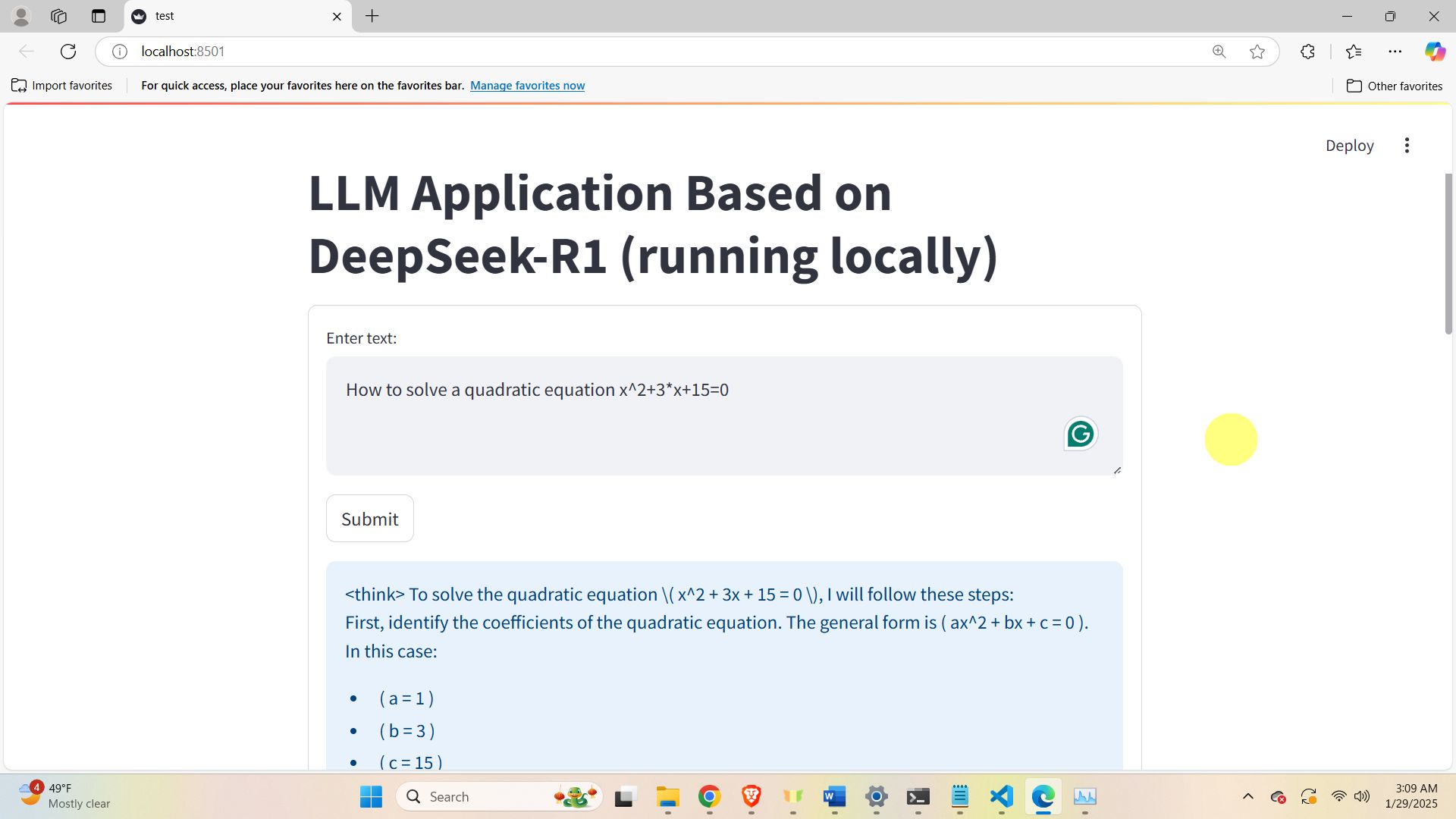

streamlit run c:/testApp/test.pyA web browser will be opened, and the application will start as shown in the figure below