In this tutorial, we explain how to install and run locally Alibaba’s QwQ-32B Large Language Model (LLM). This model is interesting since its performance is similar to the performance of the DeepSeek 671B model. However, the DeepSeek 671B model has more than 20 times more parameters than QwQ-32B. Furthermore, QwQ-32B can be run locally on a desktop computer. We were able to run QwQ-32B on a Windows computer with 64GB RAM and NVIDIA 3090. The YouTube tutorial is given below.

Install QwQ-32B LLM Locally

We will install QwQ by first installing Ollama, and then downloading the QwQ-32B model from Ollama. Finally, we are going to use Open WebUI to generate a Graphics User Interface (GUI) to run this model locally.

First, go to the Ollama website

Then, click on Download to Download the installation. In our case we are using a Windows operating system. After you download the installation file, run it, and Ollama will be installed. Once Ollama is installed, open a terminal and type

ollama pull qwqThis will download the model. It might take 5-10 minutes to download the model depending on how fast is your internet connection. Once the model is downloaded, open a new terminal and type

cd\

mkdir testWebUI

cd testWebUI

python -m venv env1

env1\Scripts\activate.bat

This will create a workspace folder and a Python virtual environment for installing Open WebUI. The last command will activate the Python virtual environment.

Then, to install and run Open WebUI, type this

pip install open-webui

open-webui serveAfter Open WebUI is loaded, then, you can enter the following address in your web browser:

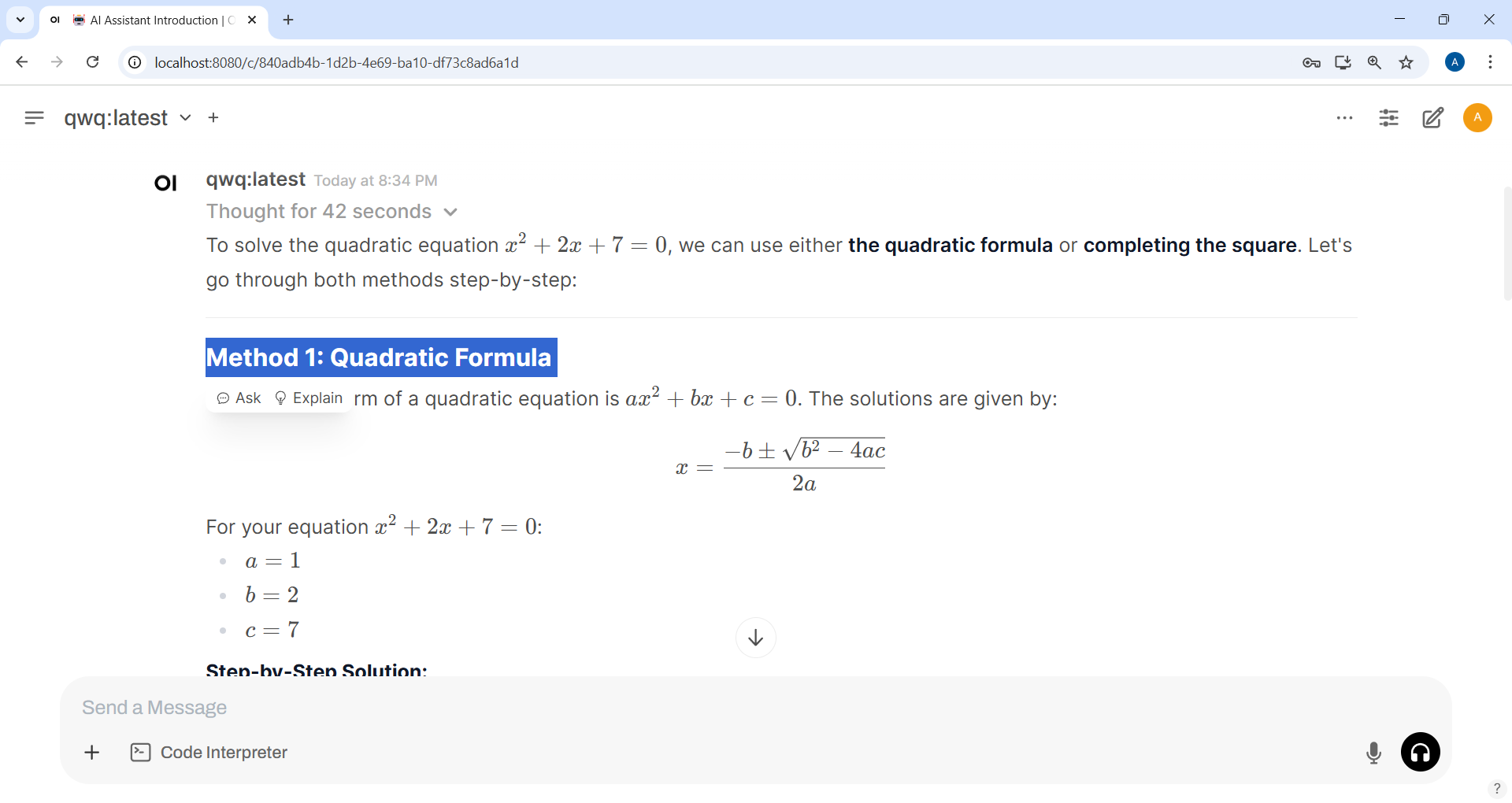

http://localhost:8080After you register yourself and create an administrator account, you will be able to use the model in the graphics user interface shown on the figure below.