- In this machine learning and AI tutorial, we explain how to install the DeepSeek-R1 model locally on a Linux Ubuntu computer and we explain how to write a Python script that will run DeepSeek-R1.

- DeepSeek-R1 belongs to the class of reasoning models. Reasoning models perform better at complex reasoning problems and tasks compared to the classical large language models. Complex reasoning problems are problems that appear in mathematics, science, and coding.

- According to the information given on GitHub page of DeepSeek, the performance of DeepSeek-R1 is comparable to the performance of OpenAI-o1. However, DeepSeek-R1 is released under the MIT license, which means that you can also use this model in a commercial setting.

The YouTube tutorial is given below.

In this tutorial, we explain how to install a distilled version of DeepSeek-R1. Consequently, it is instructive to first explain what are distilled models.

Distilled Models:

- To run the full DeepSeek-R1 model locally, you need more than 400GB of disk space and a significant amount of CPU, GPU, and RAM resources! This might be prohibitive on consumer-level hardware.

- However, DeepSeek has shown that it is possible to reduce the size of the original DeepSeek-R1 model, and at the same time preserve the performance (of course, not completely). Consequently, DeepSeek has released a number of compressed (distilled) models whose size varies from 1.5-70B parameters. To install these models you will need from 1 to 40 GB of disk space.

- In this tutorial, we will explain how to install and run distilled models of DeepSeek-R1.

Installation Instructions

The first step is to install the Ollama framework for running large language models. But, first we need to install curl and open a certain port such that Ollama can run locally. To install curl, open a Linux terminal and execute these commands

sudo apt update && sudo apt upgrade

sudo apt install curl

curl --versionThen, in order to install Ollama, we need to first allow for inbound connections at a port. To do that execute this command

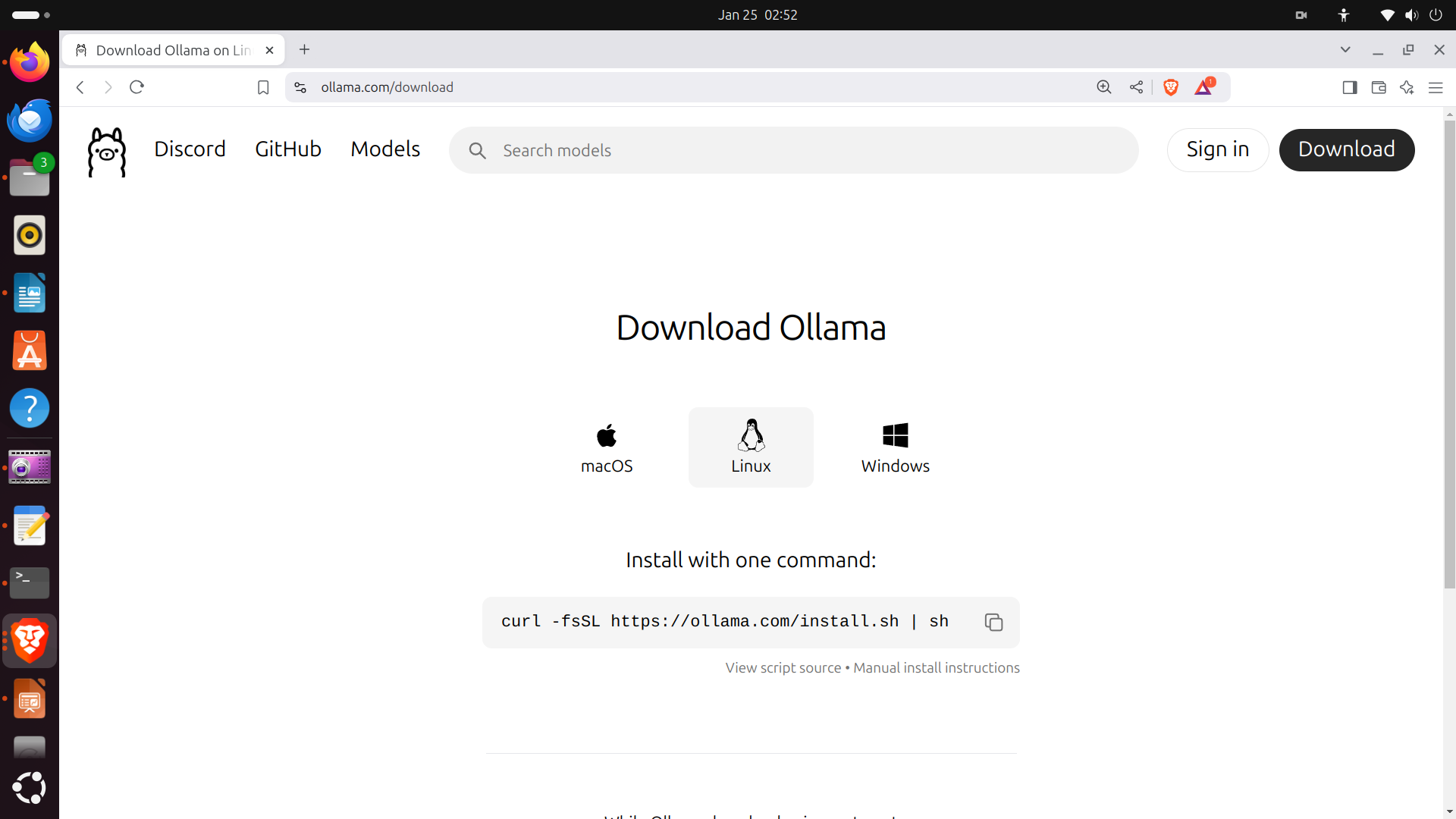

sudo ufw allow 11434/tcpNext, we need to install Ollama. To do that, go the Ollama website

Then, click on download, and the following screen will appear

Then, you need to copy the installation command, and execute this command in the terminal window

curl -fsSL https://ollama.com/install.sh | sh

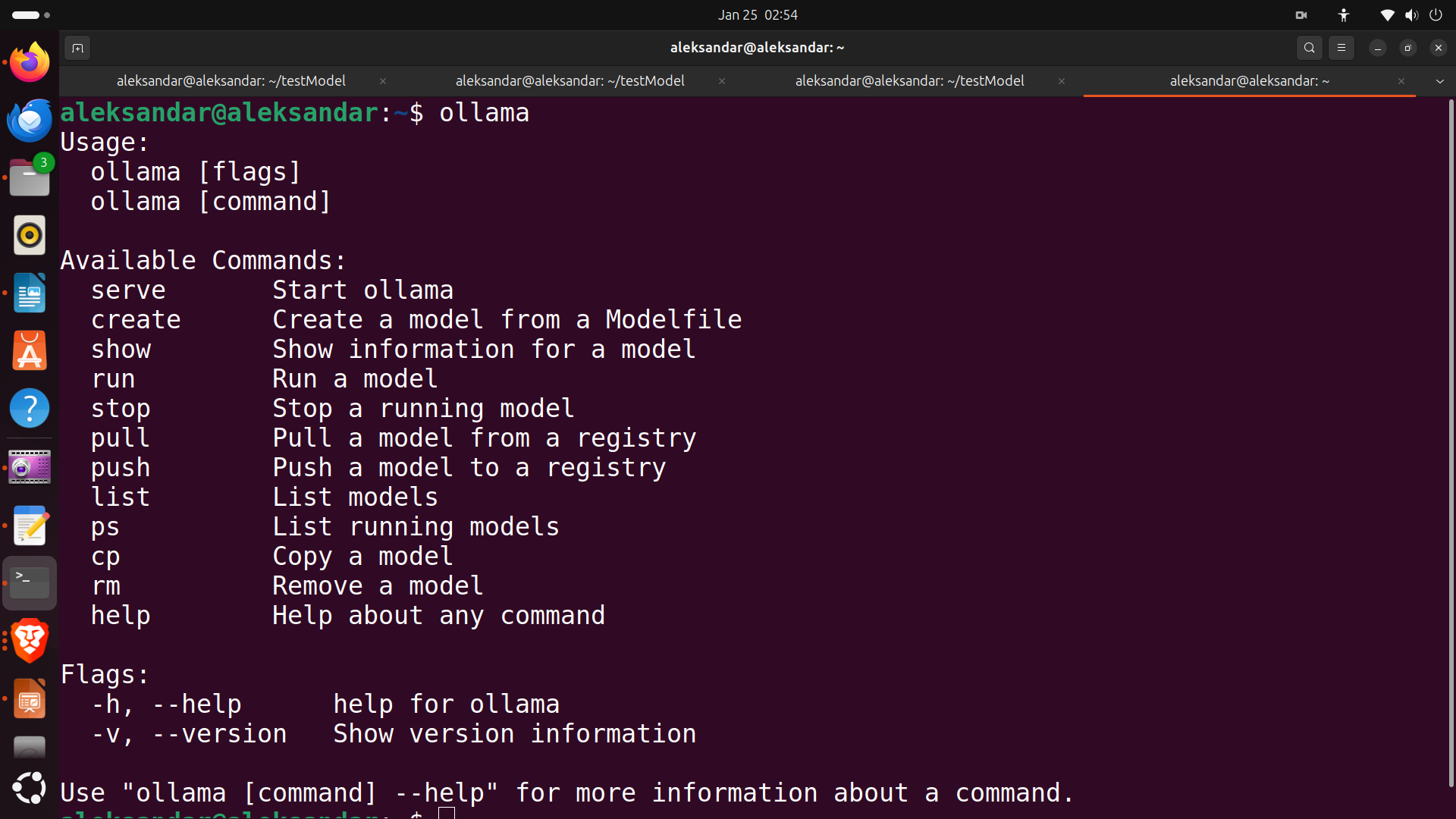

This will install Ollama. Once Ollama is installed, in the terminal execute

ollamaIf Ollama is installed, the response shown in the figure below will appear.

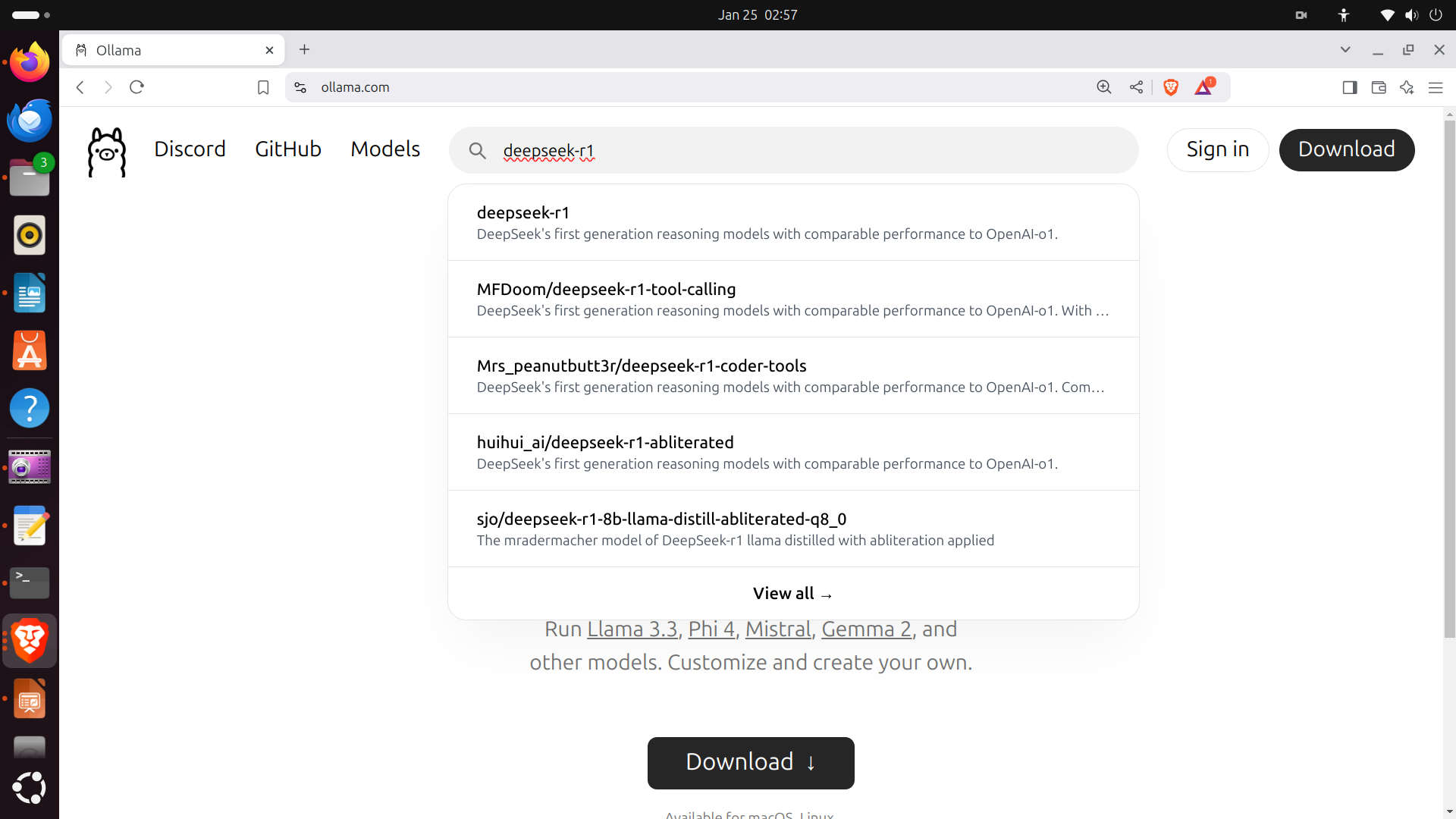

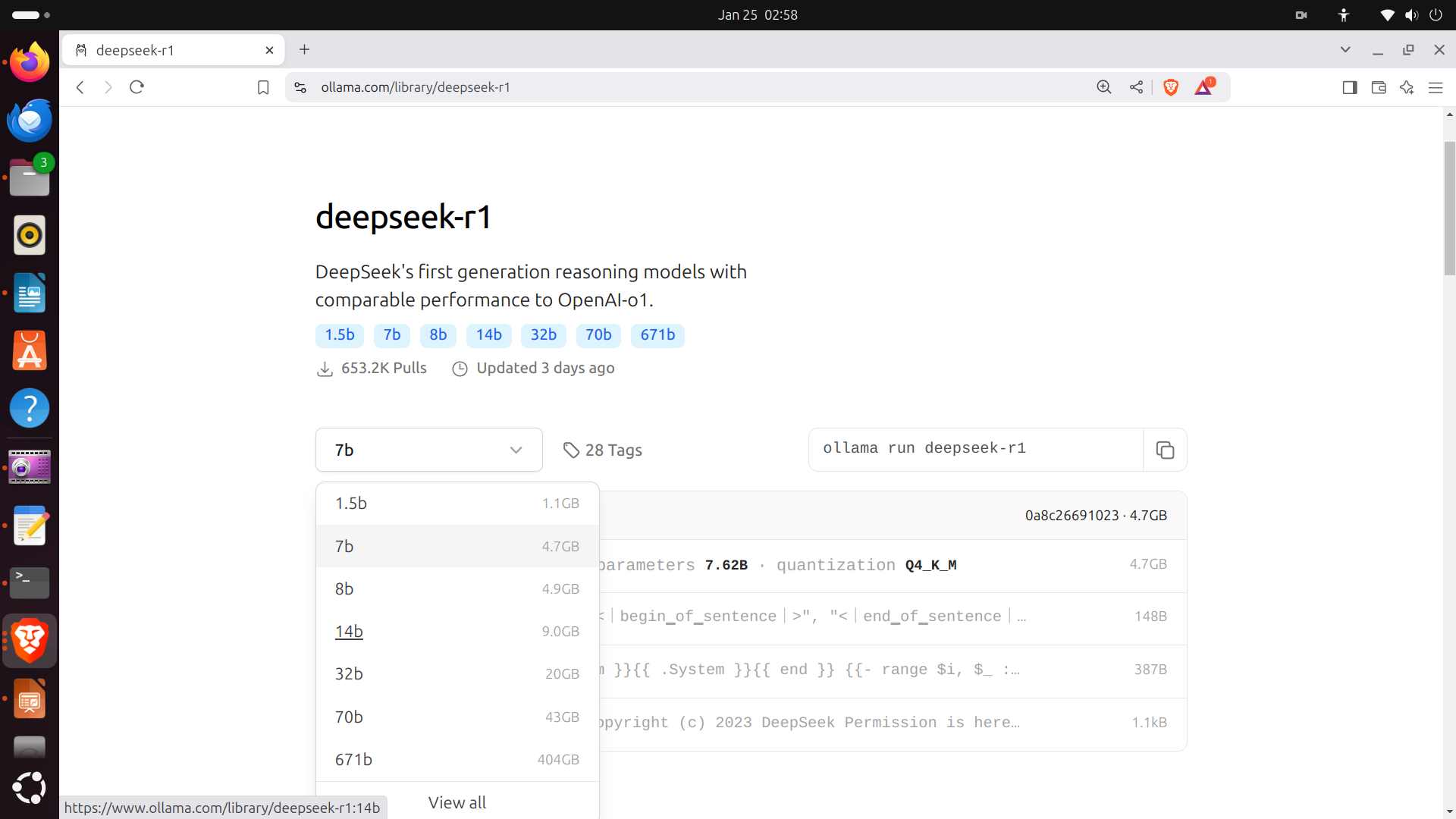

The next step is to install the DeepSeek-R1 model. To do that, go to the Ollama website and search for DeepSeek-R1

click on the model, and then select the model 14b

Then, copy and execute the installation command in the terminal

ollama run deepseek-r1:14bThis command will install and run the model. Test the model by asking a basic question. Next, exit the model.

The next step is to create a Python script for running the model. First, let us verify that Python is installed on the system. Open a terminal and type

which python3

python3 --versionThe output should show the path to the Python installation folder and the current Python version. In our case the Python version is 3.12.3. Next, we need to create a workspace folder. To do that, type

cd ~

mkdir testModel

cd testModel

Next, we need to install a command for creating Python virtual environment. Since our Python version is 3.12, the installation command is

sudo apt install python3.12-venvIn the above command, instead of 3.12, insert your Python version (only the first two numbers separated by the period).

Next, let us create and activate the Python virtual environment

python3 -m venv env1

source env1/bin/activateNext, we need to install the Ollama Python library. To do that, type this

pip install ollamaFinally, let us create a Python script. To create a Python script, we use a simple Linux editor called nano. Consequently, execute this

sudo nano test.pyThe test.py file should look like this

import ollama

desiredModel='deepseek-r1:14b'

questionToAsk='How to solve a quadratic equation x^2+5*x+6=0'

response = ollama.chat(model=desiredModel, messages=[

{

'role': 'user',

'content': questionToAsk,

},

])

OllamaResponse=response['message']['content']

print(OllamaResponse)

with open("OutputOllama.txt", "w", encoding="utf-8") as text_file:

text_file.write(OllamaResponse)This file will load the Ollama model called deepseek-r1:14b. The name of this model can be found by opening a new terminal and by typing

ollama listThis command will list all the models and their names. You should insert the name on the second line of the Python code give above. The Python script will call the model, forward the question stored in the string variable questionToAsk. The output will be printed on the screen and stored in the text file OutputOllama.txt.