In this tutorial, we explain how to run a powerful and simple-to-use AI-agent library called smolagents that is developed by Huggingface. In this tutorial, we will not spend a lot of time explaining the power of AI agents. Instead, we will explain how to install and use smolagents library “locally” by using Ollama and Llama 3.2B. Once you learn how to install smolagents and run test examples, you can proceed further and learn how to develop your own AI agents. You can also use the ideas presented in this tutorial to run any other Large Language Model (LLM) that can be executed by using Ollama. The YouTube tutorial is given below.

Why should you use smolagents with Ollama and Llama? First of all, you do not need to have a remote API or a password to access and LLM. You simply dowlload and install Ollama and Llama LLM and you run it from your computer. This gives you some form of privacy and protection. Secondly, Ollama and Llama are completely free and you do not need to pay money to use an LLM. Thirdly, you can use your computer resources and you can develop a server on your computer that will run an AI algorithm. This gives you more control over the execution of the AI algorithm.

In this tutorial, we use Llama 3.2B, which is a compressed (quantized) version of a larger Llama LLM model with almost the same performance.

Let us start with installation. The first step is to install Ollama. Go to the official Ollama website:

and download and install Ollama

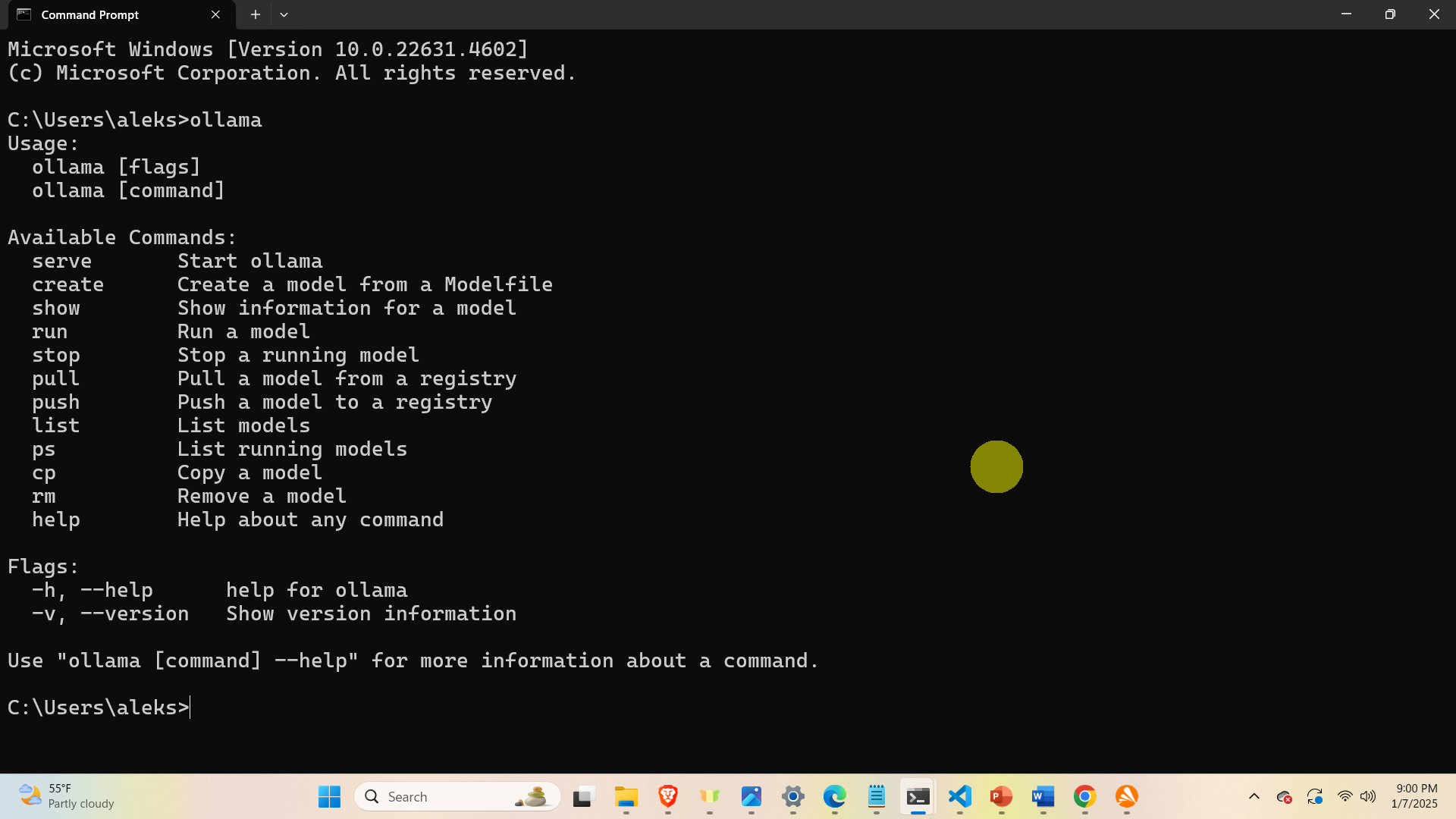

After installing Ollama, you have to make sure that Ollama is working. Open a Windows command prompt and type

ollama and the output should look like this:

If you get such an output, this means that you have installed Ollama properly.

The next step is to install Llama 3.2B. To download this model, open a command prompt and type

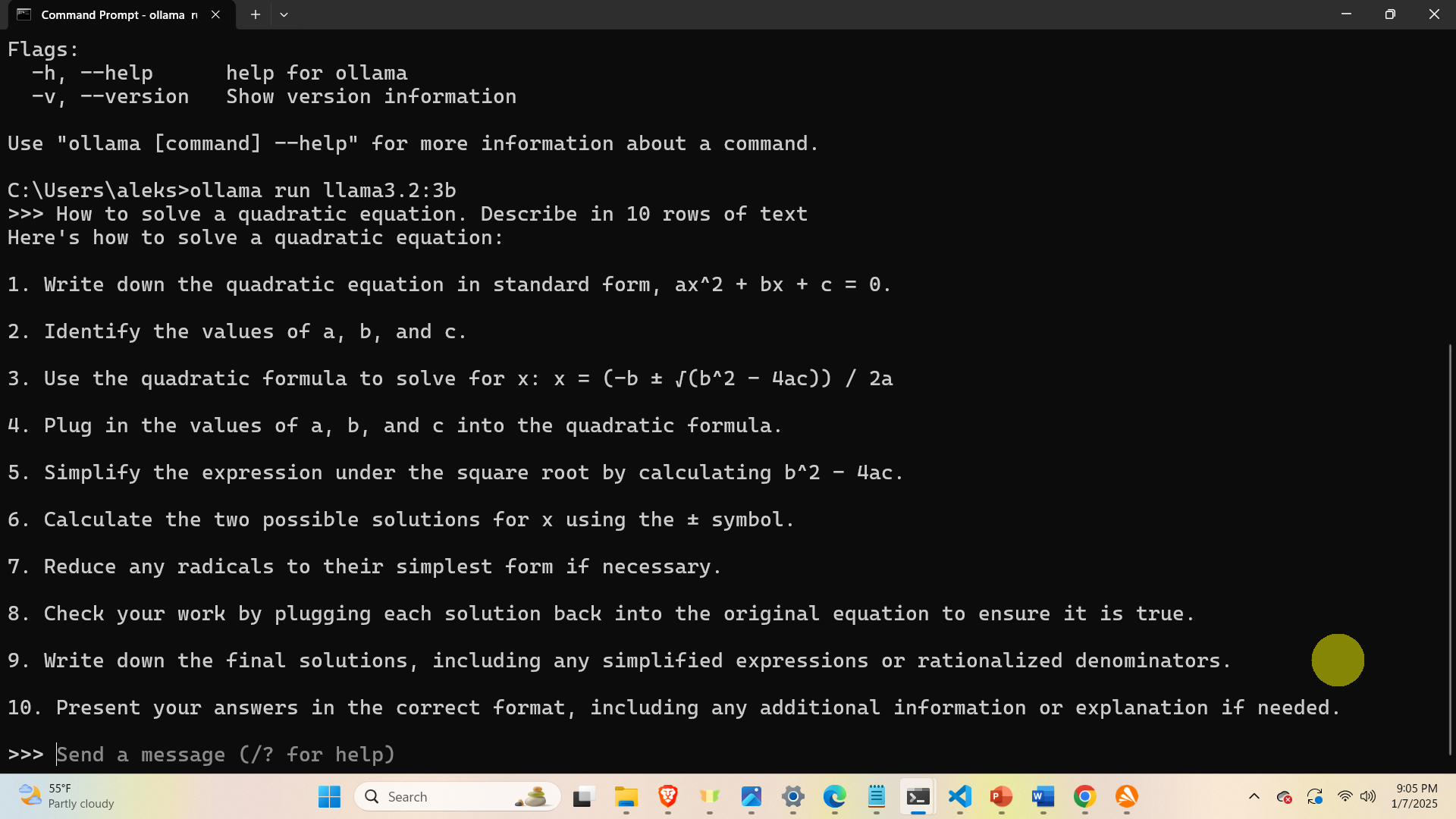

ollama pull llama3.2:3bThis command will download llama 3.2B. To test if Llama is working, in the command prompt type

ollama run llama3.2:3bThis command should load the model, and after that you can ask questions

Next, press CTRL+d to exit Ollama and to get back to the command prompt.

The next step is to create a Python virtual environment and to install smolagents. First open a command prompt and type

cd\

mkdir testFolder

cd testFolder

python -m venv env1

env1\Scripts\activate.batThis set of commands should create a test folder and create a Python virtual environment inside of the test folder. The next step is to install the Python packages and libraries

pip install smolagents

pip install accelerateNext, start VS Code and write the following Python code that test the installation of smolagents

from smolagents import CodeAgent, LiteLLMModel

from smolagents import tool

from smolagents.agents import ToolCallingAgent

from smolagents import DuckDuckGoSearchTool

model=LiteLLMModel(model_id="ollama_chat/llama3.2:3b", api_key="ollama")

agent=CodeAgent(tools=[],model=model,

add_base_tools=True,

additional_authorized_imports=['numpy', 'sys','wikipedia','scipy','requests', 'bs4'])

agent.run("Solve the quadratic equation 2*x+3x^2=0?",)