In this tutorial, we explain how to install and run Llama 3.3 70B LLM on a local computer. Llama 3.3 70B model offers similar performance compared to the older Llama 3.1 405B model. However, the Llama 3.3 70B model is smaller, and it can run on computers with lower-end hardware. The YouTube tutorial is given below.

How to Install Llama 3.3

Our local computer has an NVIDIA 3090 GPU with 24 GB RAM. The computer has 48 GB RAM and an Intel CPU i9-10850K. Llama 3.3 works on this computer, however, it is relatively slow as you can see in the YouTube tutorial. We can speed up the inference by changing model parameters. More about this in future tutorials. You will need around 40 GB of disk space to download the Llama 3.3 70B model.

The installation procedure is:

- Install Ollama on a local computer. Ollama is a framework and software for running LLMs on local computers. By using Ollama, you can use a command line to start a model and to ask questions to LLMs.

- Once we install Ollama, we will manually download and run Llama 3.3 70B model.

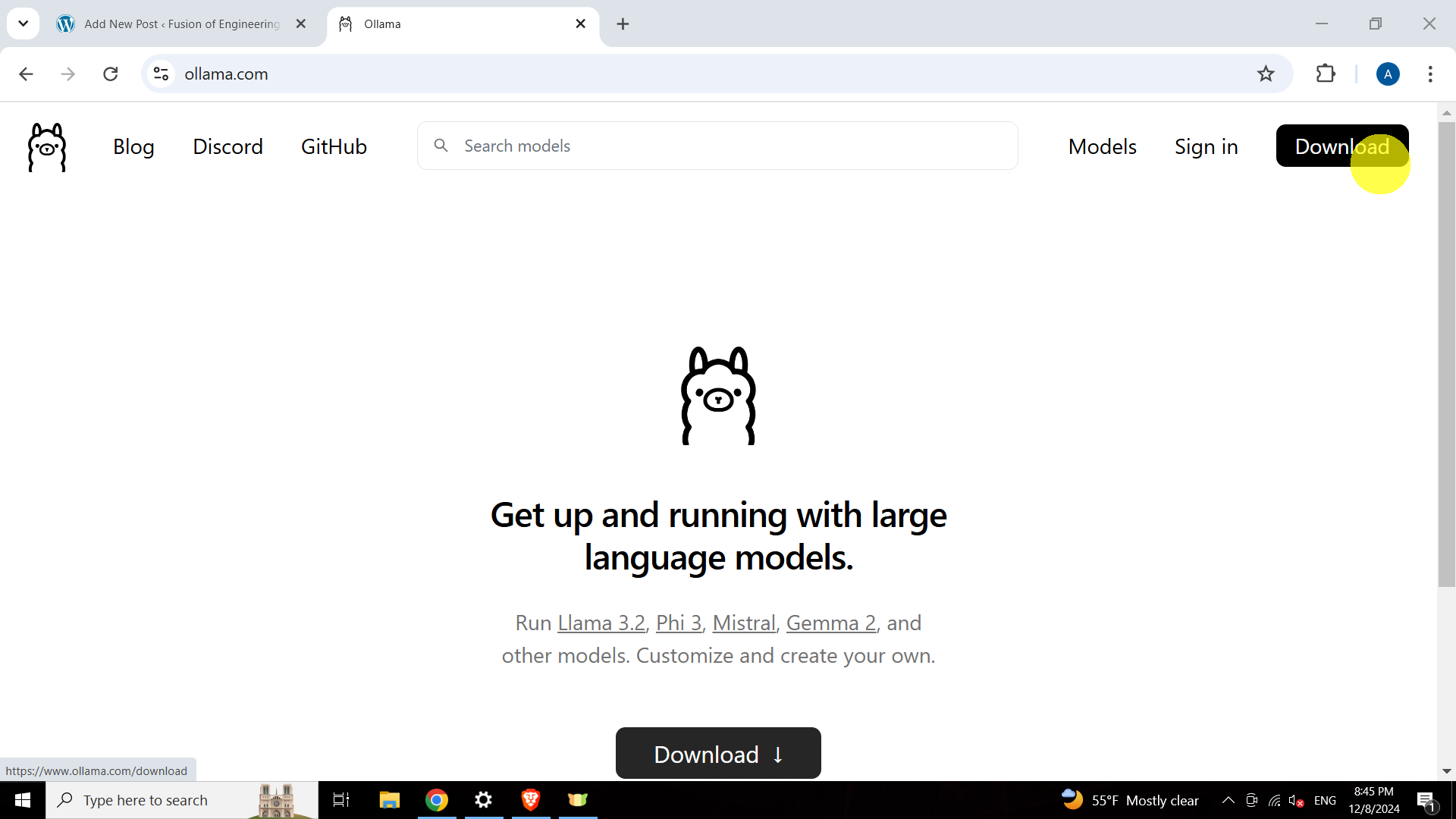

To install Ollama, go to this website:

and click on Download to download the installation file.

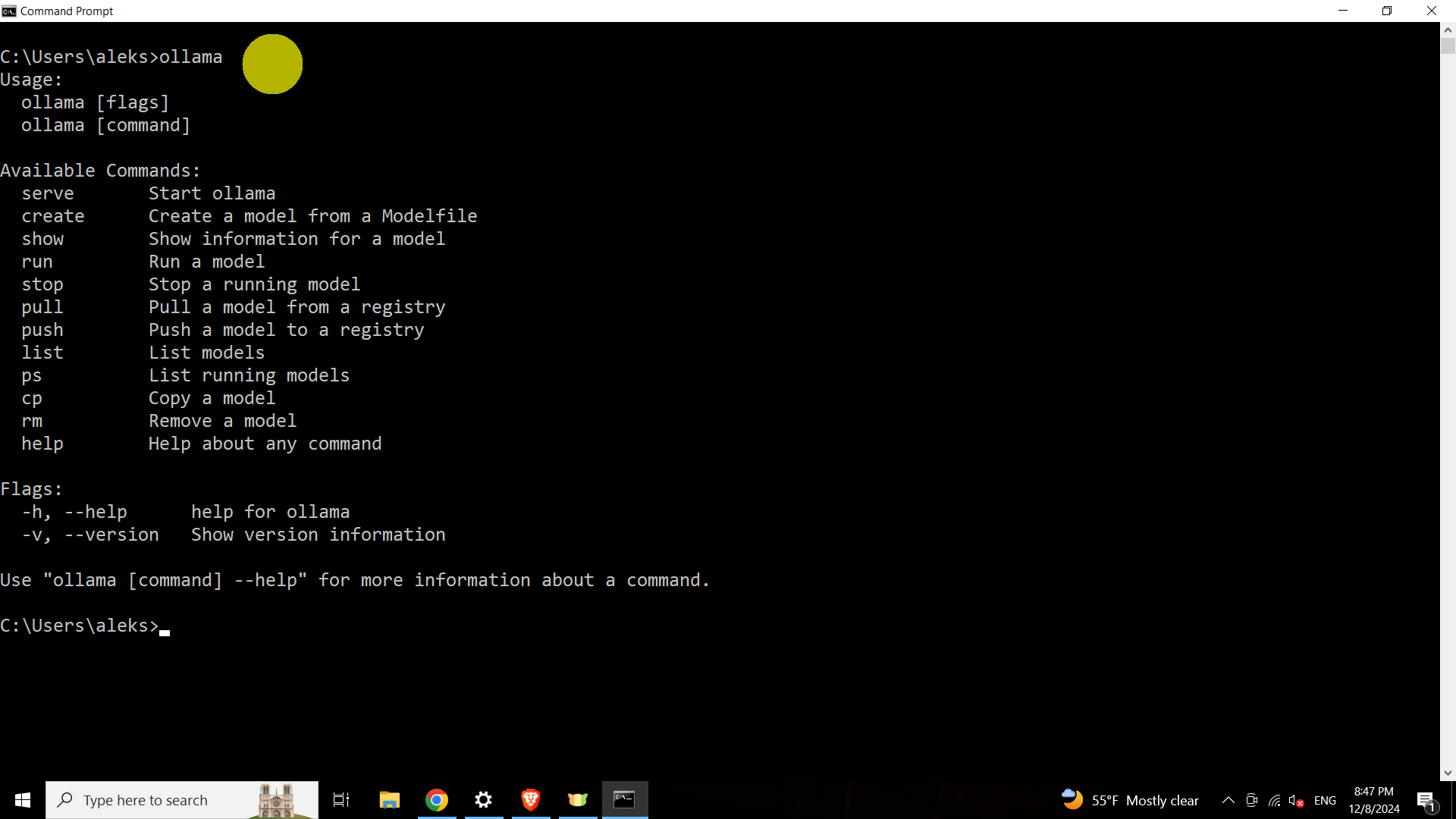

Next, click on the installation file and install Ollama. The next step is verify that Ollama is installed. Open a Windows Command Prompt, and type

ollama

If Ollama is installed, the output should look like the screen shown below.

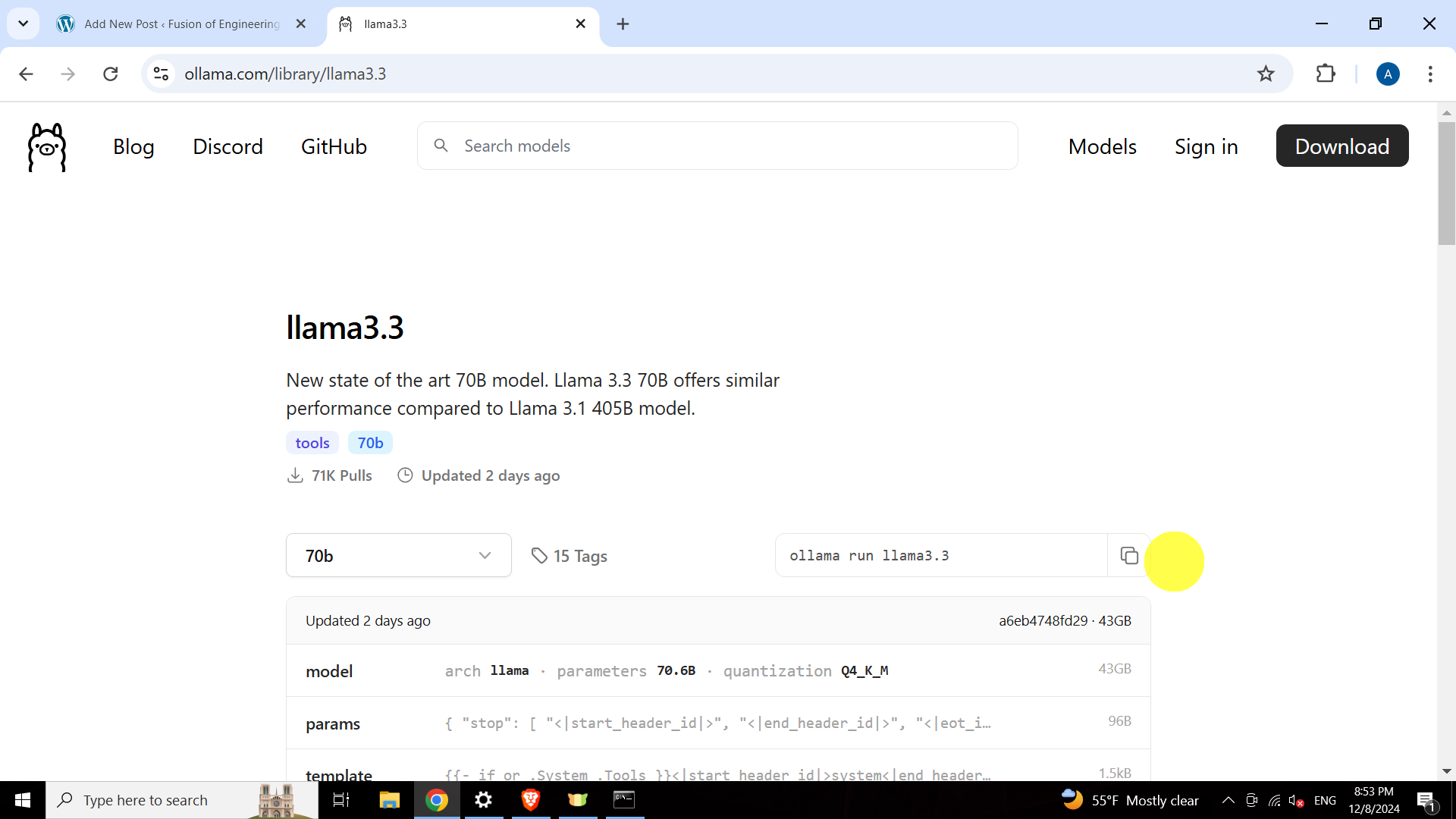

Once Ollama is installed, go to this website

https://www.ollama.com/library/llama3.3

to find the command for insalling Llama 3.3. Copy the installation command given on the website

ollama run llama3.3

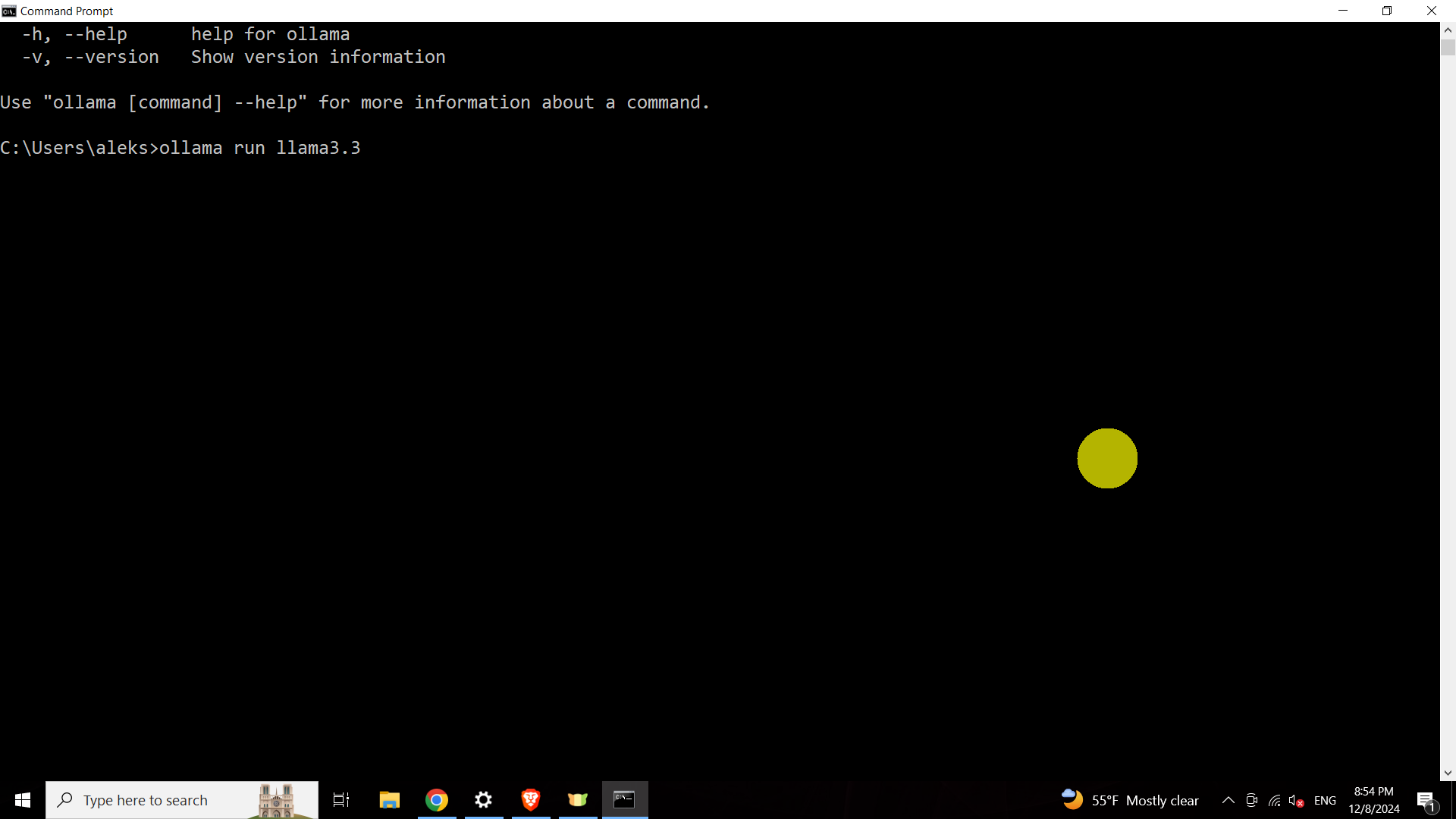

Finally, open a Windows Command Prompt, and type the command

ollama run llama3.3

This command should download and run Llama 3.3 60B model.