In this Large Language Model (LLM) and machine learning tutorial, we explain how to run Llama 3.2 1B and 3B LLMs on Raspberry Pi in Linux Ubuntu. In this tutorial, we use Raspberry Pi 5. We also created a tutorial on how to run Llama 3.2 models on Raspberry Pi 4. Raspberry 5 is much faster than Raspberry Pi 4, and consequently, we suggest to everyone to use Raspberry Pi 5. The YouTube tutorial is given below.

Llama 3.2 1B and 3B are the newest models in the Llama family of large-language models. They are highly compressed and optimized models and their purpose is to run on edge devices with limited memory and computational resources. On the other hand, Raspberry Pi 5 can also be seen as an edge device (in some sense).

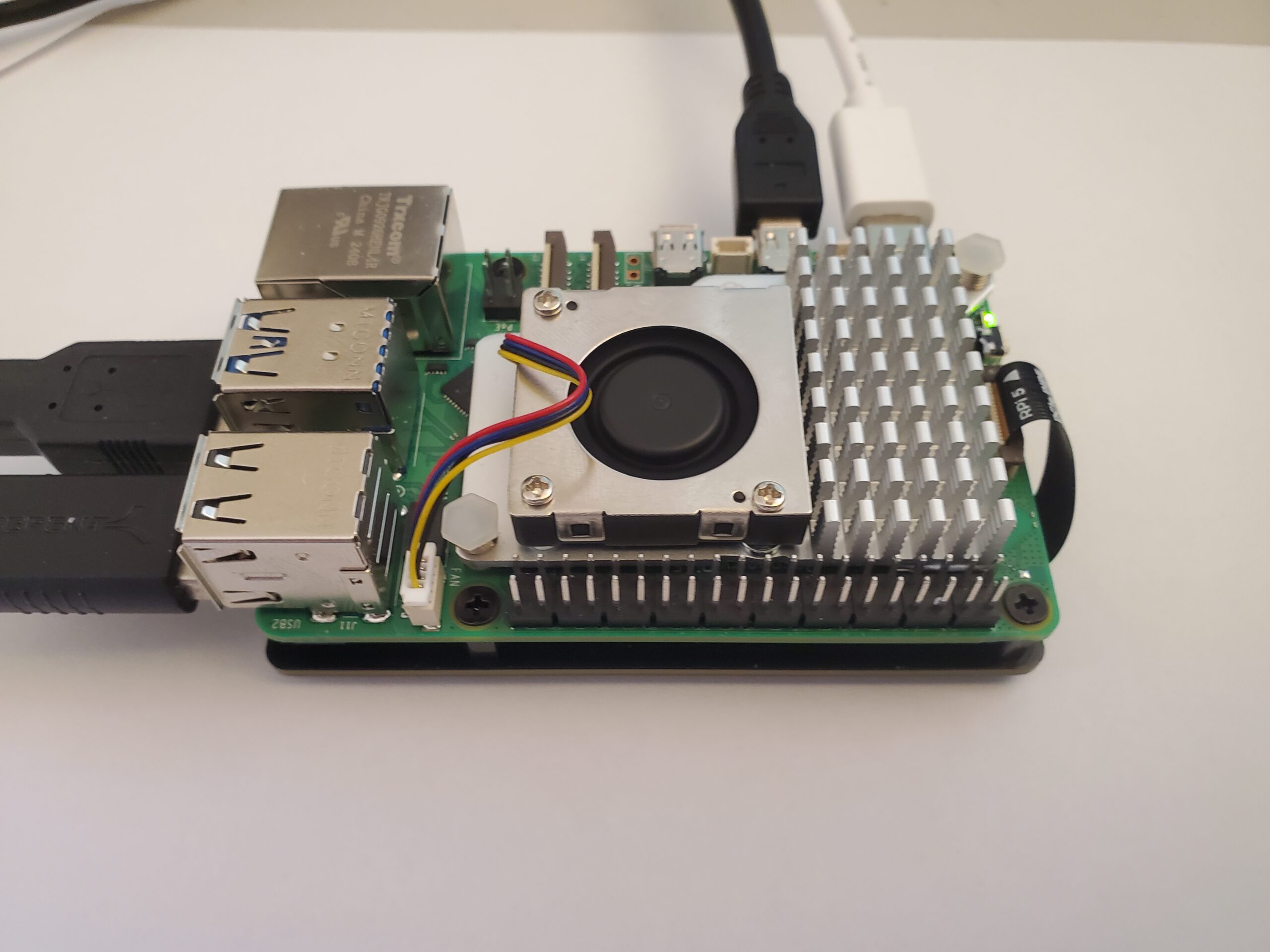

Our Raspberry Pi 5 computational platform is shown below

We have Raspberry Pi 5 with 8GB RAM. We have installed an NVMe SSD with a capacity of around 500GB. By installing an NVMe SSD, we have significantly increased the read and write speed of our Raspberry Pi 5. We have created a separate video tutorial on how to install NVMe SSD in Raspberry Pi. Besides an NVMe SSD, you can also use an external USB SSD. Our suggestion is to avoid micro-SD cards since they are really slow. A tutorial on how to install an NVMe SSD is given here:

Before we start with explanations, we need to emphasize the following:

In this tutorial we are using Linux Ubuntu 24.04. However, you can also use any other supported version of Linux Ubuntu. We created a separate video tutorial explaining how to install Linux Ubuntu 24.04 on Raspberry Pi:

Install Ollama and Llama 3.2 1B and 3B models on Raspberry Pi 5

First, make sure that your system is up-to-date. For that purpose, open a terminal and type

sudo apt update && sudo apt upgradeNext, in parallel to the current terminal, open another terminal and that terminal open a program called top. In the terminal type

topThis program is used to monitor the computational resources.

We will install Llama 3.2 by first installing Ollama. Ollama is a framework and an API for running different large language models. It provides an easy to use interface to run different models. It also has a Python library that is very simple to use. First, we will install Ollama and then we will install Llama 3.2 models.

We have to make sure that our computer allows for inbound connections on port 11434. To do that, open a terminal and type

sudo ufw allow 11434/tcpTo install Ollama in Linux Ubuntu, open a terminal and type

curl -fsSL https://ollama.com/install.sh | shIt will take some time to download Ollama and to install it. To verify the installation, first open a web browser, and type this:

127.0.0.1:11434

You should see a message saying that Ollama is running. If you do not see this message, then, you need to manually start Ollama. You can do it by opening a terminal and by typing

ollama serveThis should start Ollama if Ollama was not running. Let us now open a terminal and type:

ollamaand

ollama listIf you see the response, it means that the Ollama can be executed from a terminal window. The next step is to download the Ollama models.

To download the 1B and 3B models, in the terminal type this:

ollama pull llama3.2:1b

ollama pull llama3.2:3b

It is going to take 5-10 minutes to download the models. After the models are downloaded, we can execute them by typing this (to run 1B model)

ollama run llama3.2:1bor this (to execute 3B) model

ollama run llama3.2:3bAfter that, you will see a model prompt and you can start asking questions. To exit a model, you need to type this

/byeTo list all the models on your computer, you need to type this

ollama list